Llamaindex Response Modes Explained: Let’s Evaluate Their Effectiveness

Alongside the rise of Large Language Models (LLM), has risen a swath of programming frameworks that aim to make it easier to build applications on top of them, such as LangChain and LlamaIndex.

LlamaIndex is a programming framework that aims to simplify the development of LLM-enabled applications that leverage Retrieval Augmented Generation (RAG).

A core conceptual component to understanding the mechanisms behind LlamaIndex is that of query Response Modes. LlamaIndex has 5 built-in Response Modes: compact, refine, tree_summarize, accumulation, and simple_summarize.

In this article, I will:

- outline what the 5 algorithms are,

- how they compare in function,

- evaluate their effectiveness in answering questions against a large body of text.

To evaluate each mode, I put them to the test in helping answer a question that has plagued the world since that fateful day in November 1963:

Who shot President John F. Kennedy?

I will demonstrate how each Response Mode works by asking LlamaIndex to summarize the conclusions of the ‘Warren Commission Report’, the 250,000-word Congressional report that investigated the assassination of President Kennedy.

This document far exceeds the token limit for GPT-4 and most other mainstream LLMs and requires the use of RAG techniques for an LLM to answer questions on it using only passed in contextual data (not training data).

What is Retrieval Augmented Generation (RAG)?

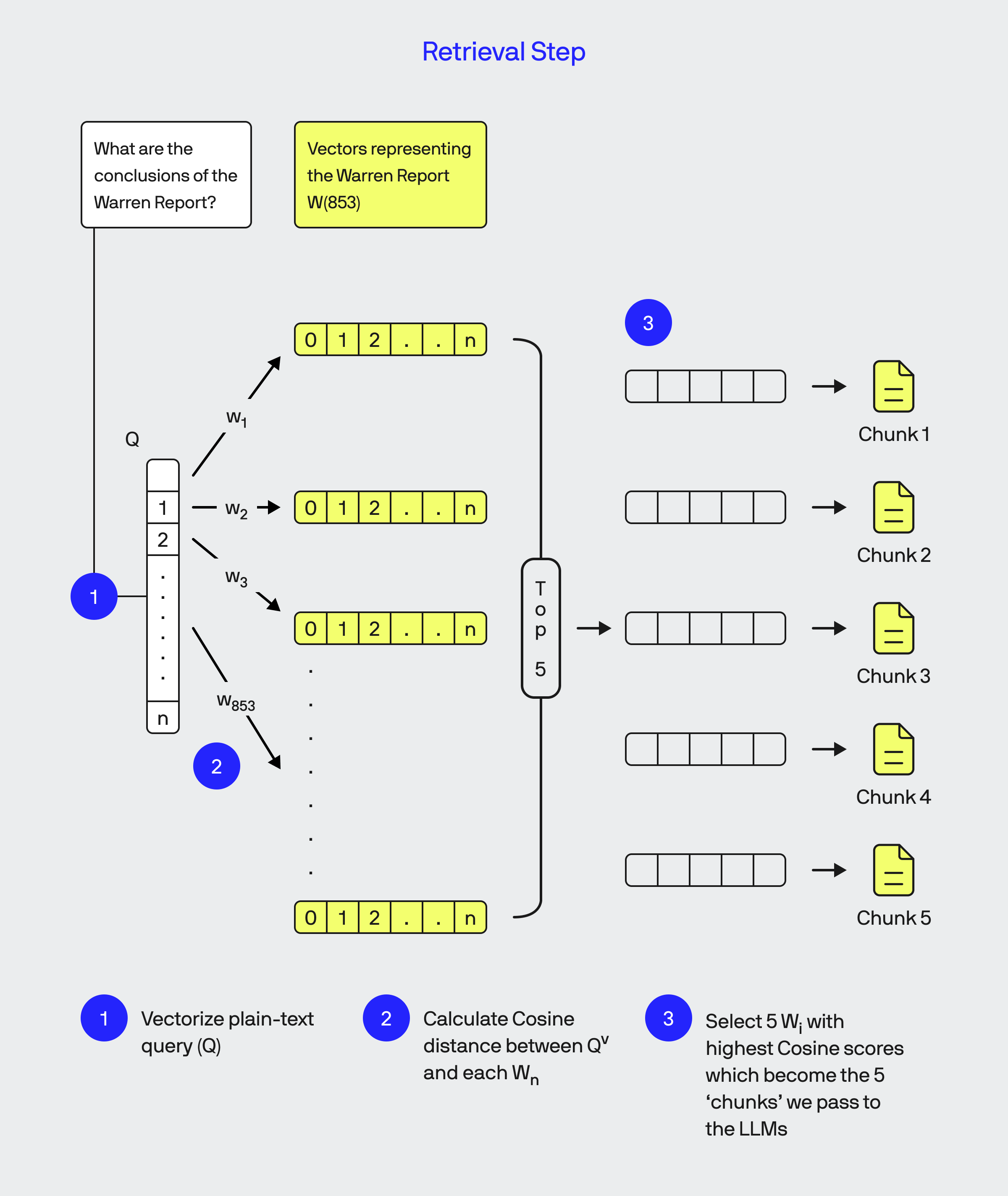

The concept of Retrieval Augmented Generation (RAG) describes an approach to allow an LLM to answer questions based on data that it wasn’t originally trained on. In order to do this, an LLM must be fed this data as part of the prompt, which is generally referred to as ‘context’. However, LLMs have limited input size restrictions (also called token input size), which make it impossible and impractical to pass large datasets, such as the Warren Report, in their entirety via the prompt. Instead, with the RAG-approach, a query to an LLM is broken into two parts: a ‘retrieval step’ and then a ‘generation step’. The ‘retrieval step’ attempts to identify the portions of the original dataset that are most relevant to a user supplied query and to only pass this subset of data to an LLM, alongside the original query, as part of the ‘generative step’. Essentially, RAG is a mechanism to work within the input size restrictions of LLMs by only including in the prompt the most relevant parts of the dataset needed to answer a query. Usually, the ‘retrieval’ portion of RAG utilizes tried-and-true semantic search algorithms such as Cosine Similarity, alongside a Vector Databases to perform this step.

For the purposes of this article and the testing of the Response Modes, the question I am seeking to get an answer to is:

“What are the conclusions of the Warren Report?”

For the purposes of this article, in the retrieval step I set my code to return the top 5 most relevant ‘chunks’ of data that relate to my original query from the Warren Report as part of the Cosine similarity algorithm. These 5 chunks of data are then passed forward to the LLM, which is where LlamaIndex Response Modes come into play.

| Chunk | Location in “Warren Report” |

|---|---|

| Chunk 1 | Pages 1-2 |

| Chunk 2 | Pages 551-553 |

| Chunk 3 | Pages 398-400 |

| Chunk 4 | Pages 7-8 |

| Chunk 5 | Pages 553-1039 |

What are LlamaIndex Response Modes?

When building an LLM enabled application that utilizes RAG techniques, it is still likely that the subset of data returned as part of the retrieval step, which are normally referred to as ‘chunks’, will still be too large to fit within the input token limit for a single LLM call. Most likely, multiple calls will need to be made to the LLM in order to derive a single answer that utilizes all of the retrieved chunks. LlamaIndex Response Modes govern the various approaches that can be used to break down, sequence and combine the results of multiple LLM calls with these chunks to return a single answer to the original query. As of writing, there are 5 basic Response Modes that can be used when querying with LlamaIndex.

Testing Methodology

In evaluating the 5 different Response Modes, I utilize the following frameworks and tools:

- OpenAI’s GPT-4 LLM.

- The BAAI/bge-small-env-v1.5 embedding model available via HuggingFace.

- The PromptLayer library for logging of all prompts sent to and responses received from the LLM.

- A copy of the Warren Commission Report made available via Project Gutenberg.

- A self-hosted Milvus vector database that contains 853 vectors representing the Warren Report.

For each test, I pose the same question: “What are the main conclusions of the Warren Report regarding the assassination of President Kennedy?”

The following is the Python code I used to evaluate each Response Mode, this one configured to use the compact Response Mode:

import os.path

from llama_index.core import (

VectorStoreIndex,

SimpleDirectoryReader,

StorageContext,

load_index_from_storage,

ServiceContext,

set_global_handler,

Settings

)

from llama_index.vector_stores.milvus import MilvusVectorStore

from llama_index.embeddings.huggingface import HuggingFaceEmbedding

from llama_index.llms.openai import OpenAI

# Set the global handler to print to stdout

set_global_handler("promptlayer",pl_tags=["warrenreport"])

Settings.llm = OpenAI(temperature=0.7,model="gpt-4")

Settings.embed_model = HuggingFaceEmbedding(model_name="BAAI/bge-small-en-v1.5")

#load index from existing vector store

vector_store = MilvusVectorStore(dim=1536,uri="http://x.x.x.x:19530", collection_name="warrenreport",token="xxxx:xxxxx")

storage_context = StorageContext.from_defaults(vector_store=vector_store)

index = VectorStoreIndex.from_vector_store(vector_store,storage_context=storage_context)

query_engine = index.as_query_engine(response_mode="compact",similarity_top_k=5)

response = query_engine.query("query_engine = index.as_query_engine(response_mode="compact",similarity_top_k=5)

response = query_engine.query("What are the main conclusions of the Warren Report regarding the assassination of President Kennedy?")")

Note also that in my call to initialize the query_engine in LlamaIndex, I set the similarity_top_k parameter to 5, which tells LlamaIndex to return the top 5 chunks of data that are semantically similar to the query as part of the retrieval step.

For all Response Modes, the same 5 chunks of text are returned from the Warren Commission Report, which are available for you to view in the table below:

‘Compact’

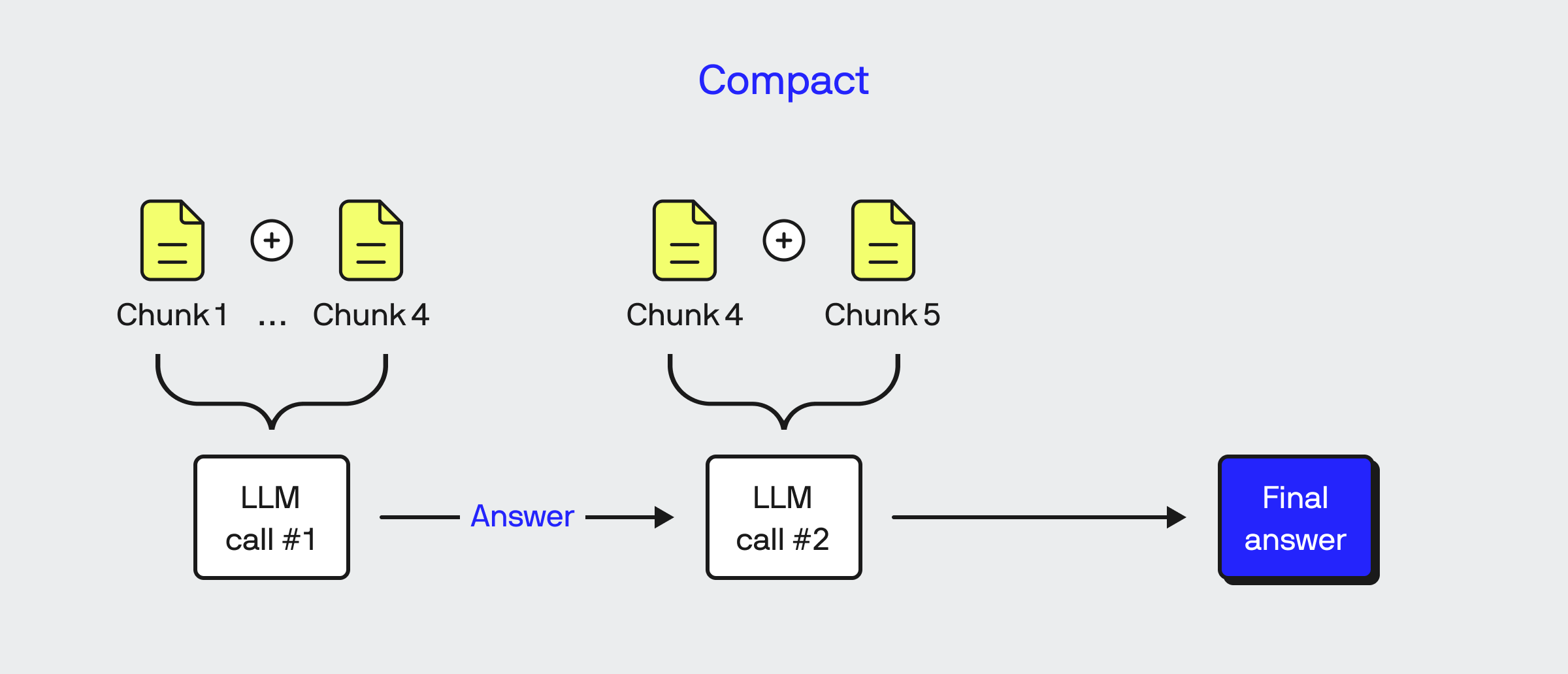

The Response Mode called compact is the default mode used by LlamaIndex if none is specified. The way the compact mode works is that for each chunk that is returned from the retrieval step, LlamaIndex concatenates as many of those chunks together into the largest possible string that fits into a single prompt to the GPT-4 LLM. In our example, the first 4 chunks of matched text fit into the context window for a single GPT-4 call, which means that it requires 2 LLM calls to answer our query on the conclusion of the Warren Report. The 1st call made using the compact Response Mode always uses the text_qa_template prompt:

Context information is below.

---------------------

file_path: blog-data/warren-report.txt

<Concatenation of Chunk 1...4>

--------------------

Given the context information and not prior knowledge, answer the query.

Query: What are the main conclusions of the Warren Report regarding the assassination of President Kennedy?

Answer:LlamaIndex then takes the answer returned to this prompt, the next concatenated set of chunks (in our case simply Chunk 5) and passes it to the LLM along with the last few sentences of Chunk 4 using the refine_template prompt:

You are an expert Q&A system that strictly operates in two modes when refining existing answers:

1. Rewrite an original answer using the new context.

2. Repeat the original answer if the new context isn't useful.

Never reference the original answer or context directly in your answer.

When in doubt, just repeat the original answer.

New Context:

<Last X Characters of Chunk 4>

file_path: blog-data/warren-report.txt

<Chunk 5>

Query: What are the main conclusions of the Warren Report regarding the assassination of President Kennedy?

Original Answer: The main conclusions of the Warren Report regarding the assassination of President Kennedy are not explicitly listed in the provided context. The Warren Report was created to provide full and truthful knowledge concerning the events surrounding the assassination, and to appraise this tragedy by the light of reason and the standard of fairness. The context mentions that the report was prepared with a deep awareness of the Commission’s responsibility to present an objective report of the facts to the American people. However, the specific conclusions of the report are not provided in the context.

New Answer:

‘Compact’ Answer:

The main conclusions of the Warren Report regarding the assassination of President Kennedy are not explicitly listed in the provided context. The Warren Report was created to provide full and truthful knowledge concerning the events surrounding the assassination, and to appraise this tragedy by the light of reason and the standard of fairness. The context mentions that the report was prepared with a deep awareness of the Commission’s responsibility to present an objective report of the facts to the American people. However, the specific conclusions of the report are not provided in the context.As you can see, the compact Response Mode doesn’t answer the question at anything resembling a coherent answer. In fact, the LLM ends up throwing up its hands and doing a spot on rendition of a high school student fumbling their way trying to answer a question they simply have no clue about. The likely reason why the compact Response Mode was unable to answer the question is that the first 4 chunks of text from the Warren Report don’t actually contain the conclusions of the report, which only appears in Chunk 5 (which oddly has a lower Cosine similarity score than the proceeding chunks). Thus, the structure of the refine_template is such that if the first set of calls has gone down the wrong path, it’s difficult for the final prompt to steer the LLM back onto the right track.

You can see the full-text log for both of the LLM calls made with the compact Response Mode below:

‘Refine’

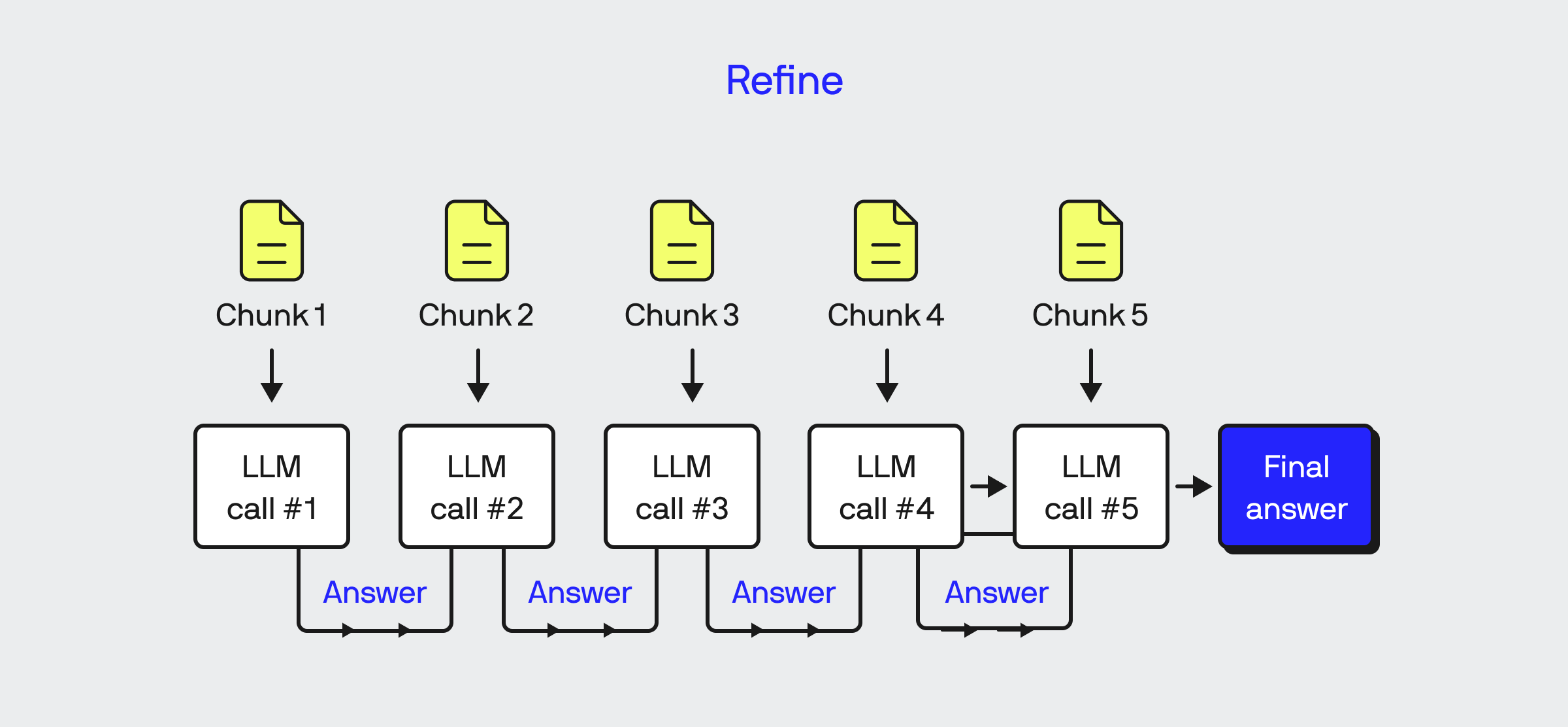

The second Response Mode that LlamaIndex provides is refine. The refine mode is very similar to the compact Response Mode, except that instead of attempting to concatenate as many chunks as it can to maximize the use of the LLM token limit, LlamaIndex only include 1 chunk of retrieved data for each LLM call. Starting with the text_qa_template, LlamaIndex passes in Chunk 1 to the LLM. After that, LlamaIndex then progresses sequentially through each of the remaining chunks one at a time; with each subsequent LLM call using the refine_template to build upon the answer returned from the answer returned from the previous chunk.

‘Refine’ Answer:

The provided context does not include the main conclusions of the Warren Report regarding the assassination of President Kennedy.While the compact Response Mode tried to obfuscate it’s inability to answer the question behind a wall of hand-waving text, the refine Response Mode yields a much more succinct, yet equally useless answer. Much like the compact mode, the refine is much more likely to not be able to properly answer the question when the initial chunks passed to it are less relevant. In our case, the first chunk of data from the Warren Report is the introduction and forward parts of the report which do not contain any conclusions whatsoever, which is likely why it was never able to return a proper answer to our query.

You can see the full-text log for all of the LLM calls made with the refine Response Mode below:

‘Tree Summarize’

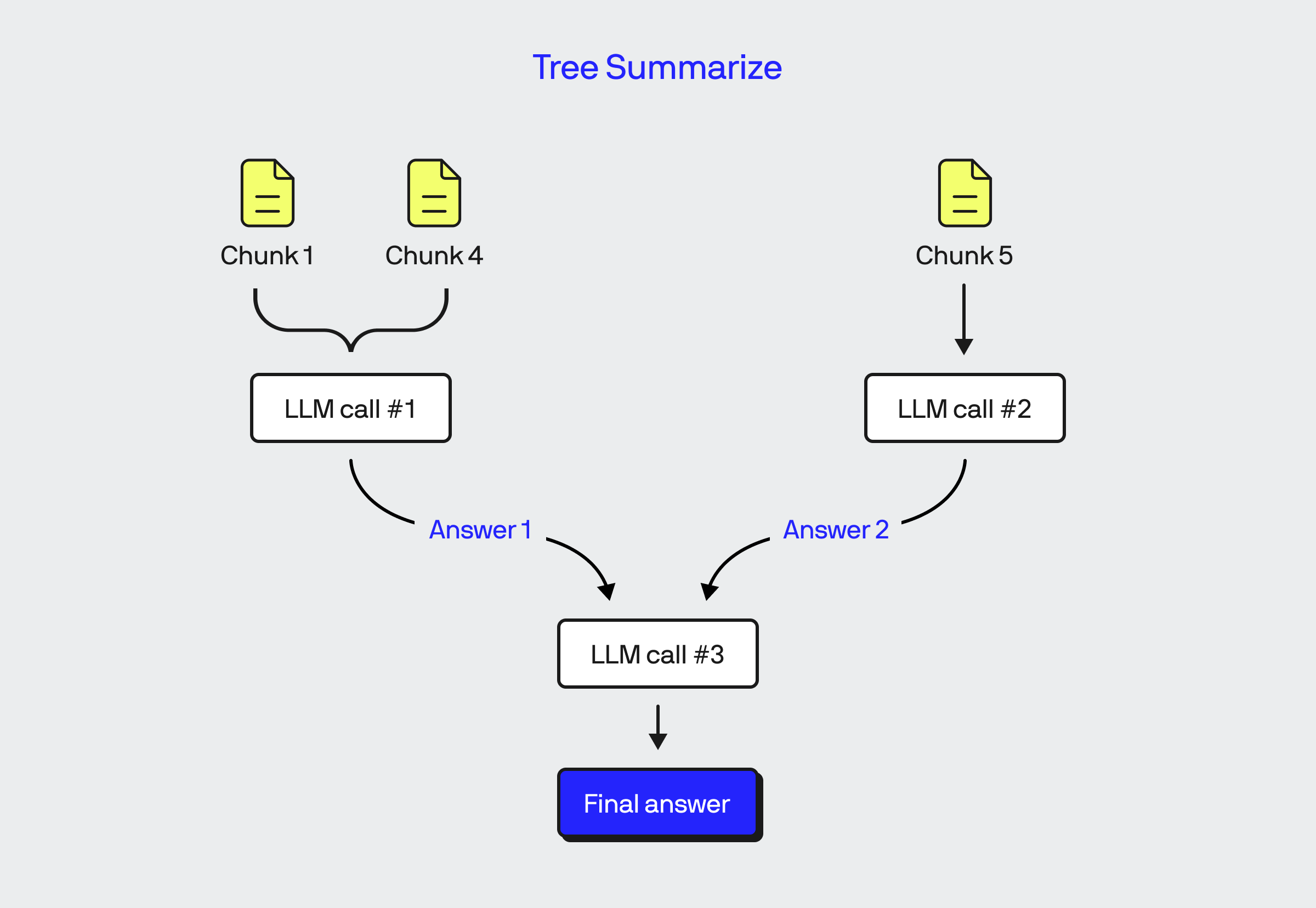

The third, and by far most effective, Response Mode is tree_summarize. The astute reader might surmise from the use of the word ‘tree’ that there is a recursive property to this response mode, and they would be correct. The tree_summarize mode in its base case makes a series of LLM calls that concatenate chunks of retrieved data so that it maximizes the input token limit for the LLM. It then takes the outputs of each of these base case responses, and then passes them together to the LLM and instructs it to derive an answer using those initial answers as context. For anyone familiar working with LangChain, the tree_summarize Response Mode is essentially the same as LangChain’s MapReduceDocumentChain. In our analysis of the Warren Report, the tree_summarize mode required 3 calls to the LLM, 2 for processing the 5 chunks of data, and then 1 for combining the answers from the first 2.

The prompt template used by the tree_summarize Response Mode is quite simple and the same each time, regardless if we are working at the ‘leaf’ level or higher up in the processing tree:

Context information from multiple sources is below.

---------------------

file_path: blog-data/warren-report.txt

<Chunk 1>

file_path: blog-data/warren-report.txt

<Chunk 2>

file_path: blog-data/warren-report.txt

<Chunk 3>

file_path: blog-data/warren-report.txt

<Chunk 4>

---------------------

Given the information from multiple sources and not prior knowledge, answer the query.

Query: What are the main conclusions of the Warren Report regarding the assassination of President Kennedy?

Answer:After making a similar call that includes only Chunk 5, tree_summarize then uses the following prompt template to combine the answers:

Context information from multiple sources is below.

---------------------

<Answer from LLM call for Chunks 1...4>

<Answer from LLM call for Chunk 5>

Given the information from multiple sources and not prior knowledge, answer the query.

Query: What are the main conclusions of the Warren Report regarding the assassination of President Kennedy?

Answer:‘Tree Summarize’ Answer:

The Warren Report concluded that Lee Harvey Oswald acted alone in assassinating President Kennedy, and Jack Ruby also acted alone when he killed Oswald. The shots that killed the president and wounded Governor Connally came from behind and above, specifically from the Texas School Book Depository Building. There was no evidence of any shots being fired from any other location, such as the guarded railroad overpass. No cartridges were found on the overpass, and no witnesses have come forward claiming to have found one. Despite some witness reports, like that of Mrs. Jean L. Hill who claimed to have seen a man running away from the Depository Building, there was no evidence of a conspiracy, domestic or foreign, to assassinate the President. The Commission did recommend improvements to the procedures for presidential protection, but also acknowledged that no procedures can guarantee absolute security.The tree_summarize response mode nails the answer and delivers a thoughtful and complete summary of the findings of the Warren Report that correctly identify Lee Harvey Oswald as the assassin, while also in the same breath, disabusing any notion of a conspiracy or second shooter.

Whereas the refine and compact modes were led astray by the irrelevance of the first set of chunks they analyzed, tree_summarize mode overcomes this as it is uses a map-reduce pattern to have the LLM independently analyze each concatenated chunk of data and then in a separate prompt combine the outputs of those into a single answer.

You can see the full-text log for all of the LLM calls made using the tree_summarize Response Mode below:

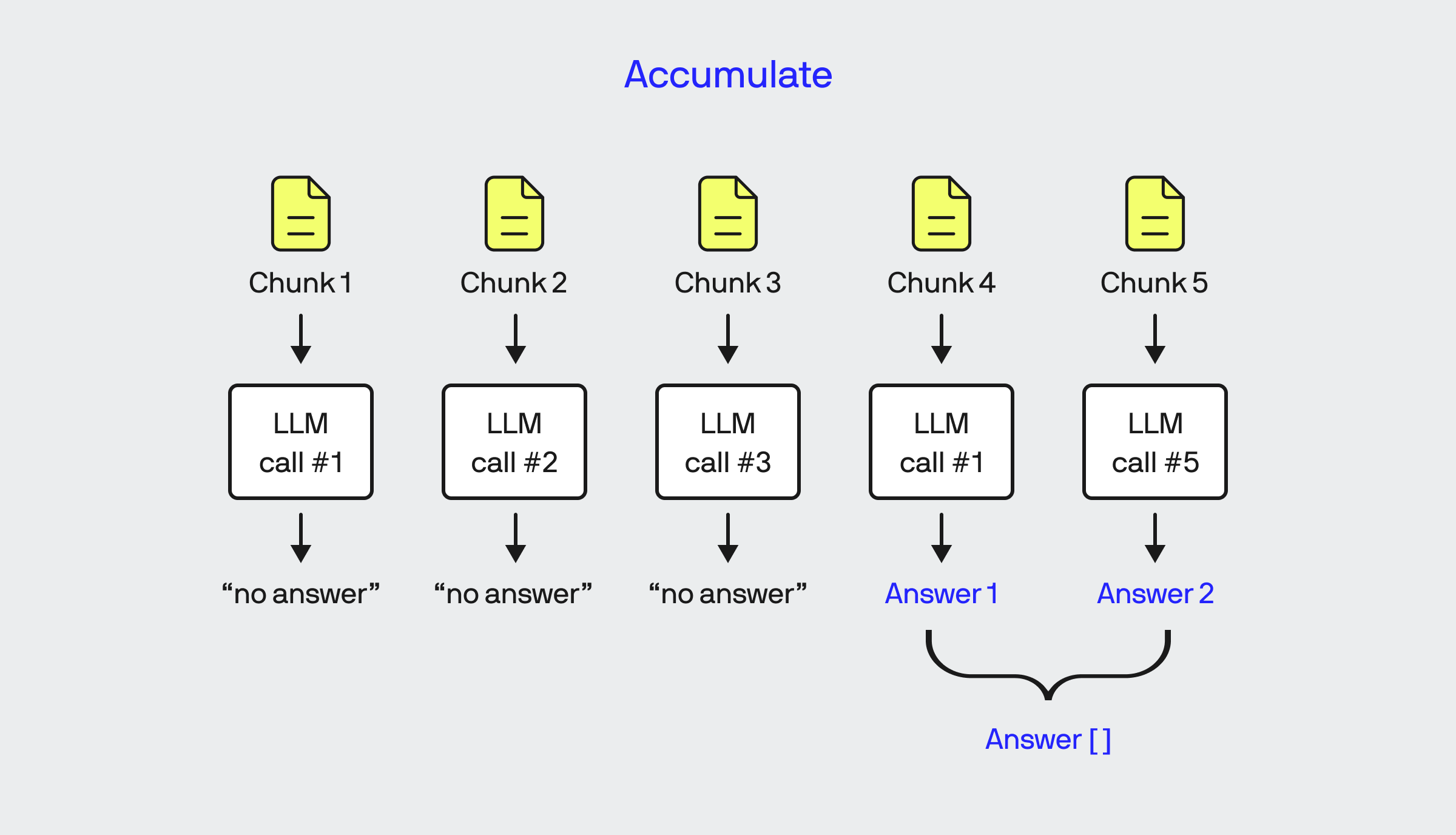

‘Accumulate’

The accumulate Response Mode is quite simple, LlamaIndex makes 1 call per retrieved chunk and then returns every ‘non-null’ answer together as an array of answers. For each of the calls that LlamaIndex makes in accumulate mode, it uses a similar template to tree_summarize:

Context information is below.

---------------------

file_path: blog-data/warren-report.txt

<Chunk n>

---------------------

Given the context information and not prior knowledge, answer the query.

Query: What are the main conclusions of the Warren Report regarding the assassination of President Kennedy?

Answer:‘Accumulate’ Answer:

---------------------

Response 4: The Warren Report concluded that the assassination of President John F. Kennedy was a devastating act of violence against an individual, his family, the nation, and all of humanity. The report aimed to provide a full and truthful understanding of these events, based on reason and fairness, to the American public and the world. It also acknowledged the Commission's responsibility to present an objective account of the facts relating to the assassination.

---------------------

Response 5: The main conclusions of the Warren Report regarding the assassination of President Kennedy include the following points: The shots that entered the neck and head of the President and wounded Governor Connally came from behind and above, with no evidence that any shots were fired at the President from anywhere other than the Texas School Book Depository Building. The railroad overpass was guarded on November 22 by two Dallas policemen, Patrolmen J. W. Foster and J. C. White, who testified that only railroad personnel was permitted on the overpass. The Commission did not find any witnesses who saw shots fired from the overpass. No cartridge of any kind was found on the overpass. Lastly, Mrs. Jean L. Hill stated that after the firing stopped she saw a man running west away from the Depository Building in the direction of the railroad tracks, but no other witnesses claimed to have seen a man running towards the railroad tracks.The returned result from LlamaIndex is an array of strings of length 2, containing the responses returned from the LLM for chunks 4 and 5. The answers for chunks 1,2,3 are not included in this result, because for each of those calls, the LLM returned the logical equivalent of a ‘null’ response in the form of 'The context does not provide information on the main conclusions of the Warren Report regarding the assassination of President Kennedy.’

The accumulate mode doesn’t so much answer the question, but instead returns n answers to the question with each of the answers being scoped simply to the context chunk passed to the LLM in that call. It is the responsibility then of the calling application to take the list of answers to produce an actual final answer to the query. ‘Accumulate’ doesn’t work well for something like the Warren Report where each chunk doesn’t necessarily contain the complete information to answer the question.

You can see the full-text log for all of the LLM calls made using the accumulate Response Mode below:

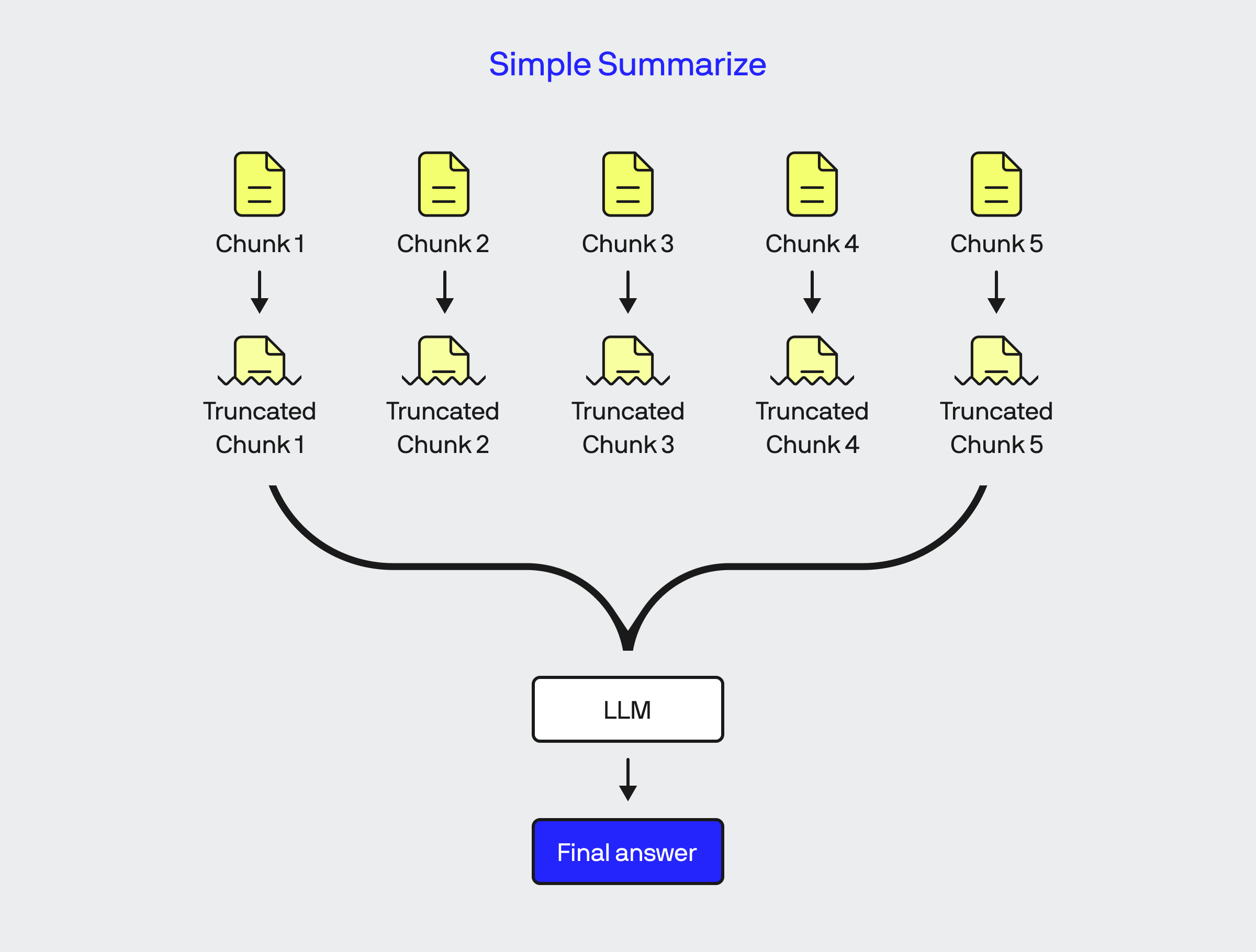

‘Simple Summarize’

The final LlamaIndex Response Mode is simple_summarize. This perhaps the most basic and straightforward of the response modes. In this mode, LlamaIndex truncates all text chunks so that all chunks can be concatenated and passed into the LLM in a single call. No matter how many chunks are retrieved, there will only ever be a single call made to the LLM. In our example, the prompt template that is used in simple_summarize mode looks like:

Context information is below.

---------------------

file_path: blog-data/warren-report.txt

<Truncated Chunk 1>

file_path: blog-data/warren-report.txt

<Truncated Chunk 2>

file_path: blog-data/warren-report.txt

<Truncated Chunk 3>

file_path: blog-data/warren-report.txt

<Truncated Chunk 4>

file_path: blog-data/warren-report.txt

<Truncated Chunk 5>‘Simple Summarize’ Answer:

The Warren Report concluded that the shots which killed President John F. Kennedy were fired from behind and above, specifically from the Texas School Book Depository Building. It also determined that Lee Harvey Oswald was the assassin of the President. Furthermore, the report found no evidence that any shots were fired from the railroad overpass, as speculated by some, and asserted that the overpass was guarded by two Dallas policemen. The report aimed to dispel various speculations and rumors surrounding the assassination, providing a factual account based on their investigation.Surprisingly, the answer provided by simple_summarize is not bad and almost as complete as the one provided by tree_summarize However, I would attribute this more to luck then a structural advantage of the mechanism. With the simple_summarize mode, as the number of chunks returned from the retrieval step goes up, its likely that the ultimate answer returned will decrease in quality due to the knowledge being lost in the truncated segments of each chunk.

You can view the full-text log of the prompt made in the simple_summarize test here.

Conclusion:

Looking at the results of my tests, the tree_summarize Response Mode returned the most comprehensive and complete answer to the question “What are the conclusions of the Warren Report?”. The recursive, map-reduce like algorithm it employs allows it to build up the correct answer looking at all matched chunks of text from the Warren Commission Report and the responses from GPT-4 to each of them. This is not to say that tree_summarize is the only Response Mode you need when building a RAG-style application, however for the purposes of summarizing content from across a large body of text, it clearly has structural advantages that lend itself more effective than the other modes. However, it’s important to understand the different motivations behind each of the other 4 Response Modes and to know which circumstances each might be the best tool for the job.

With the rapidly increasing sizes of input token limits for newer LLM models, it can be argued that the need for RAG will slowly diminish. However, even in a world where LLMs are able to accept million token inputs, it will always behoove a consumer of an LLM to maximize the efficiency of the each token used in the context passed in each LLM call. As such, the LlamaIndex framework provides a very neat and consistent programming model to build RAG-style applications, certainly more so than LangChain. For those of you interested in learning more about LlamaIndex and building with it, I encourage you to visit the LlamaIndex documentation, as the team has done an excellent job of building a set of , clear and easy to work through tutorials that outline the many capabilities of LlamaIndex.

Bobby Gill