AutoTweeter: How I Used LangChain, GPT-4 and AWS Lambda to Put AI in Control of My Twitter Feed

A few weeks ago, I set out on a quest to demonstrate the potential of generative AI to autonomously create content for a well-established, long-running, formerly human-authored Twitter feed.

Simply put, I wanted an AI to tweet like me on topics I generally tweet about and in the same tone. This project, called AutoTweeter, aims to demonstrate a prototype for fully automating social media content creation using AI that can create content that matches the user’s voice without anyone noticing it.

Built using LangChain, GPT-4 packaged within an AWS Lambda function, and a souped-up Google Sheet, AutoTweeter has been in control of my Twitter feed for the past three weeks, and I think the results have been devastatingly positive. Perhaps I have never been great at Tweeting, or perhaps generative AI is that good, but looking at my recent Twitter history, you’d be hard-pressed to identify Tweets produced by AI versus those written by me.

In this post, I will outline the AutoTweeter solution at a high-level solution view, then drill down into the mechanics of how I used LangChain with Open AI’s GPT-4 model to, in essence, put an AI in the driver’s seat of my Twitter account.

The complete source code for the AutoTweeter solution and instructions to use it can be found on Github.

Goals for an AI-Powered Tweeter

When I set out on this fabled quest, there were specific requirements any solution I developed had to fulfill:

- It needed to run entirely autonomously in the cloud, researching topics and generating Tweets on a scheduled basis.

- It needed to tweet in a manner that accurately reflected the tone and structure of how I normally tweet. Like a Turing test, the AI in control of my Twitter has to make people reading my Twitter believe that it is me tweeting.

- It had to have tact. That is, I didn’t want the AI to generate something insensitive or inappropriate about a tragedy while attempting to be humorous.

- It needed to allow for manual intervention/editing on my part, meaning I needed to be able to add/edit any Tweets generated through my mobile phone at anytime.

Solution: AutoTweeter

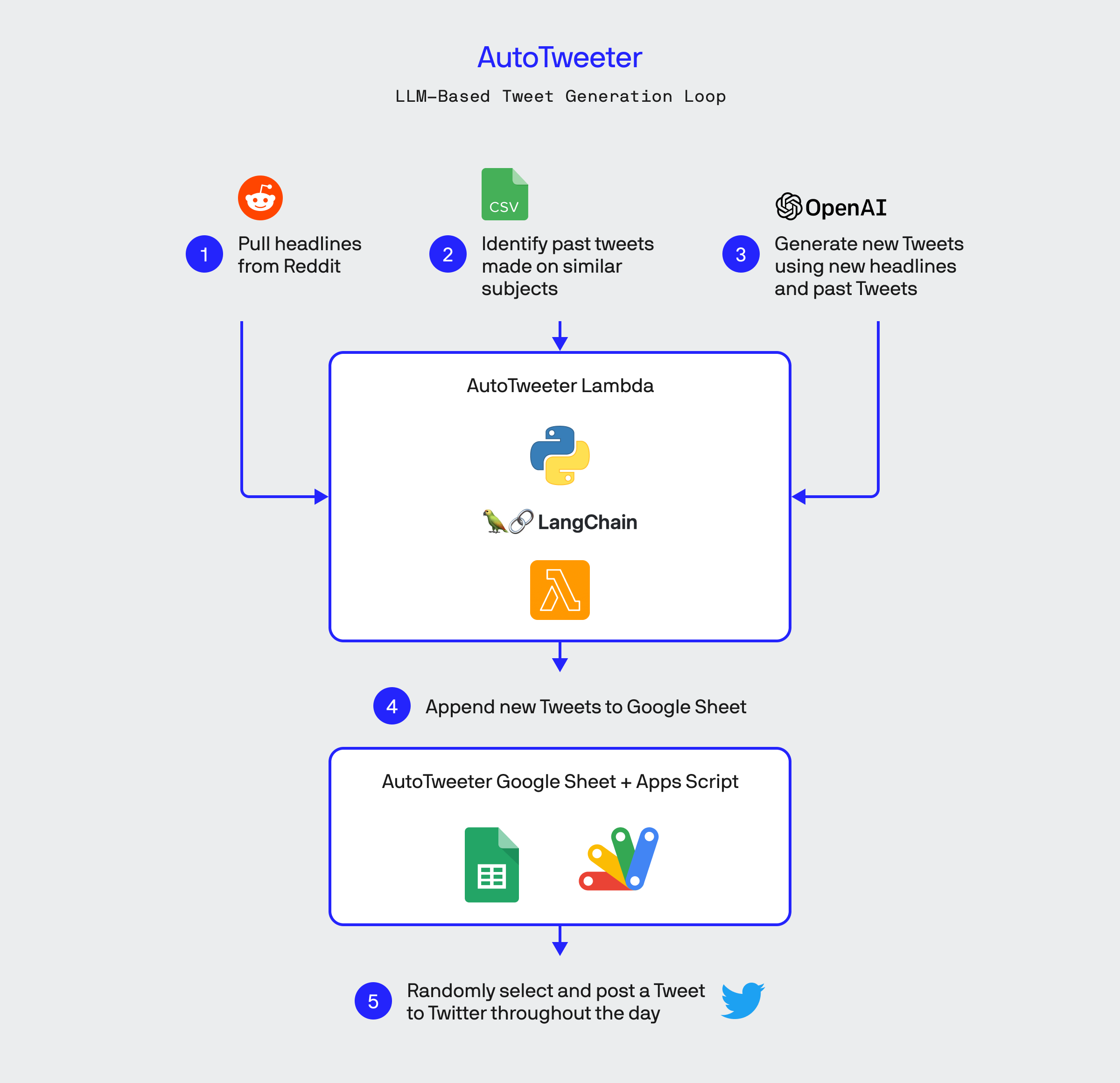

The ultimate solution, which I’ve so aptly named “AutoTweeter,” comprises two parts, with the first being a Python-based Lambda function that contains all the LLM magic to generate new Tweets.

The AutoTweet Lambda function then dumps these newly created Tweets into a Google Sheet. The Lambda function makes heavy use of LangChain’s capabilities for vectorization, document search, and LLM chaining to leverage GPT-4 to generate new Tweets. The second part of AutoTweeter is contained within the Google Sheet: a Google Apps Script that runs on a scheduled basis, randomly selecting one of the Tweets in the Sheet and posting it to my Twitter feed.

Steps in the LLM-Based Tweet Generation Loop

Let’s dive into each step of the AutoTweeter AI solution for generating new Tweets shown in the above diagram.

1.) Pull Headlines for the AI to Tweet About from Reddit

Since much of the tweeting I’ve done in the past has been about current events and happenings in the world, part of the challenge of this solution was finding a source of relevant and timely current events that could be used to seed the generation of new content.

Like most problems in life, the solution was found in Reddit, specifically the /r/worldnews subreddit. What better source than this subreddit to find new headlines for my AI to generate pithy, sarcastic Tweets about? In the first step of the AutoTweeter content generation loop, it calls out to the Reddit API to retrieve headlines from r/worldnews, which the AI will generate Twitter commentary upon.

2.) Train the AI Using My Own Tweets Made on Similar Topics

I’ve been tweeting since 2009 and have written a corpus of about 10,000 Tweets since then. I used the data export facility of Twitter to get a CSV dump of those Tweets. One important goal of this whole exercise was to ensure the AI could accurately match my tone when Tweeting, which charitably could be described as observational humor that I’d like to think is sometimes on-point and, at times, shockingly low-brow.

These 10,000 Tweets, which I used Code Interpreter to scrub vanilla RTs and replies out of, touch on a variety of current events and topics that serve to train the LLM via a sort-of few-shot learning exercise when attempting to generate new Tweets on related issues.

After the AutoTweeter queries Reddit for the latest posts in the r/worldnews subreddit, it then searches through my entire Twitter history to identify past Tweets I’ve written about similar subjects to pass in as context to the LLM when attempting to generate a new set of Tweets. This is done through an in-memory vector database and a similarity search run on top via LangChain’s DocArrayInMemorySearch store.

The following method describes the code that completes this step, which takes input from a string containing lists of headlines pulled from Reddit.

def getSampleTweetsUsingRetrievalQA(selected_reddit_posts_as_text)->str:

#load all of the past Tweet data from a CSV file.

file = SAMPLE_TWEETS_FILENAME

loader = CSVLoader(file_path=file,encoding="utf8", csv_args={'delimiter':',','quotechar': '"','fieldnames':['Tweet']})

tweets = loader.load()

#vectorize the loaded Tweet database into an in-memory vector DB

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

split_tweets = text_splitter.split_documents(tweets)

embeddings = OpenAIEmbeddings()

db = DocArrayInMemorySearch.from_documents(

split_tweets,

embeddings)

#perform a query on the vector DB to identify a subset of past tweets which are

#semantically related to the Reddit headlines passed as input

query = TWEET_CSV_QA_TEMPLATE + selected_reddit_posts_as_text

docs = db.similarity_search(query)

#compose all queried past Tweets into a single string and return

reference_tweets = "\n".join([docs[i].page_content for i in range(len(docs))])

return reference_tweets

3.) Generate New Tweets Using Reddit Headlines

In the next step, both the newly queried Reddit headlines, alongside the reference Tweets identified in the vector store of past Tweet history, are then passed to a method that calls the LLM and generates a new set of Tweets.

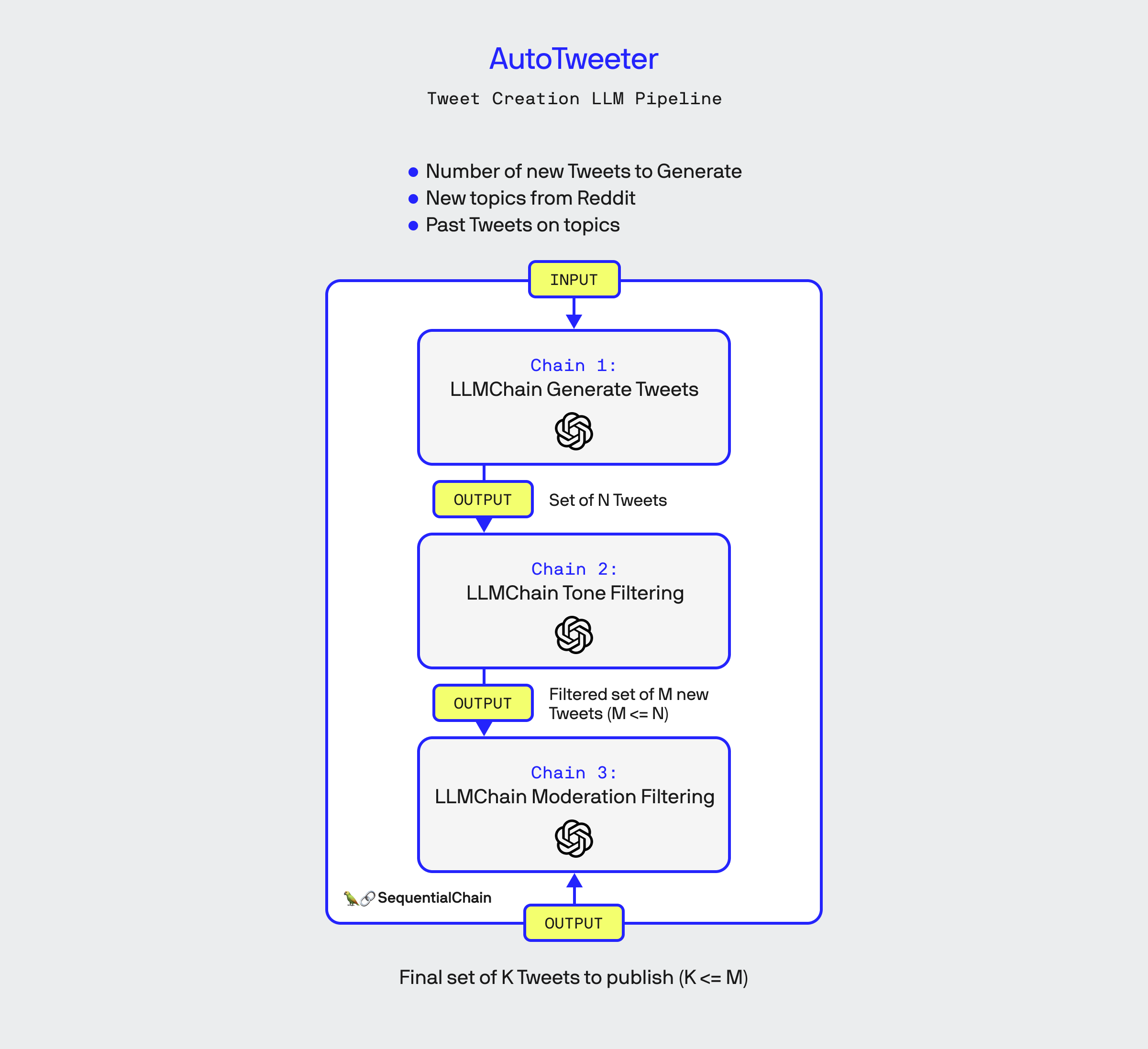

This diagram outlines the sequence of LLMChain objects used to generate a set of new Tweets

Rather than a single prompt to create the Tweets, AutoTweeter leverages three individual LLMChain objects sequenced together in LangChain’s SequentialChain construct first to generate the Tweets, then filter them to ensure tone compliance and apply a final filter used to moderate against inappropriate or insensitive remarks.

Chain 1: Tweet Generation

Purpose: Generate a raw set of new Tweets using a prompt that references an inputted set of headlines from Reddit and provides as context a set of past Tweets made by me on similar topics.

Input:

- The number of new Tweets to generate.

- Set of reference Tweets that were identified in the previous step.

- Set of new topics returned from Reddit to use for generating new Tweets.

Output:

- A set of proposed Tweets.

Code:

GENERATE_TWEETS_TEMPLATE = """Please generate {number_of_tweets_to_generate} tweets that relate to the topics

delimited by <> and are similar in tone to the examples provided below.

Tweets must be funny.

Tweets must not be serious in tone.

Tweets must NOT use hashtags.

Tweets must not end in Emoji characters.

Format your answer as a list of Tweets, with each individual Tweet on its own line.

Each line must end with a newline character.

% START OF EXAMPLE TWEETS TO MIMIC

{example_tweets}

% END OF EXAMPLE TWEETS TO MIMC

<{topics}>"""

#Setup for first Tweet generation chain

prompt_template = PromptTemplate(input_variables=["number_of_tweets_to_generate", "example_tweets", "topics"],template=GENERATE_TWEETS_TEMPLATE)

generator_chain = LLMChain(llm=llm,prompt=prompt_template,output_key="proposed_tweets")

Chain 2: Tone Filtering

Purpose: Ensure that the set of Tweets generated matches the desired tone and structure of my Tweets, and filter out any that do not.

Input:

- The proposed set of Tweets that were outputted from Chain 1.

Output:

- Set of filtered Tweets confirmed by the LLM to be “funny.”

Code:

MAKE_IT_FUNNY_TEMPLATE="""

Listed below are a set of Tweets.

Pick and return the 3 Tweets from that group that you feel are both the funniest and wittiest.

Format your answer as a list of Tweets, with each individual Tweet on its own line.

Each line must end with a newline character.

{proposed_tweets}

"""

#we setup our 'make sure its funny chain'

funny_chain_prompt_template = PromptTemplate(input_variables=["proposed_tweets"], template=MAKE_IT_FUNNY_TEMPLATE)

funny_chain = LLMChain(llm=llm,prompt=funny_chain_prompt_template,output_key="final_tweets")

Chain 3: Moderation Filtering

Purpose: This final chain filters out inappropriate or insensitive Tweets and removes any duplicative sentence structure.

Input:

- Tweets returned from the output of Chain 2.

Output:

- A final set of moderated, confirmed to be funny Tweets.

Code:

MODERATE_TWEETS_TEMPLATE="""

Delimited in <> are a set of proposed Tweets,

please filter out any tweets which have duplicate structure

or any Tweets which are offensive or inappropriate.

Format your answer as a list of Tweets,

with each individual Tweet on its own line.

Each line must end with a newline character.

<{final_tweets}>

"""

prompt_template2 = PromptTemplate(input_variables=["final_tweets"], template=MODERATE_TWEETS_TEMPLATE)

moderation_chain = LLMChain(llm=llm, prompt=prompt_template2,output_key="moderated_tweets")

Sequencing and Running all 3 Chains

Once all three chains have been set up, the AutoTweeter logic then uses the LangChain SequentialChain to execute them in order:

overall_chain = SequentialChain(

chains=[generator_chain,funny_chain,moderation_chain],

input_variables=["number_of_tweets_to_generate", "example_tweets", "topics"],

output_variables=["moderated_tweets"])

new_tweets = overall_chain.run({"number_of_tweets_to_generate":NUM_TWEETS_TO_GENERATE, "example_tweets":sample_tweets, "topics":reddit_posts})

The final output of this SequentialChain is a set of Tweets which are then added to the Google Sheet used by the publishing component of AutoTweeter.

4.) Append new Tweets to Google Sheet

A specially crafted Google Sheet stores the output of the Tweets created by AutoTweeter, using it as a simplified CMS store. Using Google Sheets means I can easily monitor and edit the content coming out of AutoTweeter before it is actually pushed out to my Twitter feed. Further, the Google Sheets mobile app makes it super easy to edit and add my own good ole’ fashioned human-crafted Tweets to be scheduled alongside that of my new robotic content-creating overlord.

5.) Google Apps Script Randomly Selects and Publishes Tweet to Twitter

The final step of the AutoTweeter process is executed via a Google Apps Script contained within the aforementioned Google Sheet. This script, which executes as a sort of cron job throughout the day, randomly selects any Tweet within the Google Sheet, posts it to Twitter, and then marks it in the Sheet as having been published.

Enjoy the Fruits of Generative AI Labor!

The AutoTweeter solution is deployed via the Serverless framework to an AWS Lambda function which executes periodically throughout the day. The full AutoTweeter solution runs independently and has generated pretty convincing Tweet content to my Twitter feed for 3 hours. I can safely say the results are outstanding and yet equally depressing.

With only a few hours of programming, I’ve created an autonomous agent that can craft Tweets just like I would, except it’s funnier, wittier, and just plain better at it than I have ever been.

The next goal in the AutoTweeter journey? Commenting, liking, and replying to Twitter posts. Stay tuned.

Once again, the complete source code for AutoTweeter can be found on Github.

Bobby Gill