How AI And ML Are helping Mental Healthcare Apps

In a recent blog post, we discussed how deep learning networks are beginning to change how we approach healthcare, specifically with medical imaging and radiology. Machine learning (ML) and Artificial Intelligence (AI) are beginning to change how several different types of applications function – while the capabilities of such underlying technologies are vast, one of the most notable areas where such software is used is in the healthcare field.

While certain health services, such as those mentioned above, benefit imaging applications (i.e. AIs that have “studied” a multitude of different images) mental health providers are attempting to do the same to increase the effectiveness of treatment methods. We’re going to provide a cursory look at ML and AI then discuss the possibilities of how such tech can benefit mental health as well.

Let’s talk about ML and AI

A lot of providers are still figuring out how to really apply ML and AIs to such software for mental health. There are “known knowns” and things we realize we “don’t know, that we don’t know.”

Perhaps you’ve seen the clip of the Joe Rogan Experience with Elon Musk. If not, follow the clip and watch the segment of the show where the two delve into artificial intelligence. They start with a short dive into time management which is incredibly useful for some of us!

Right after the four-minute mark, Elon speaks about his reservations about the technology. He sees this turning into a realm for abuse, as humans have been known to do.

We can use nuclear technology to power communities. If you think back to the Manhattan Project, it wasn’t too difficult for us to extrapolate our understanding of nuclear bonds at an atomic level to build devastating weapons that, well, demolish whatever they touch, rather than uplift a community.

In a parallel sense, he’s right. We can use this technology for the great benefit of humanity or we can build something equivalent to nuclear bombs from said technology. Take a look at the image below:

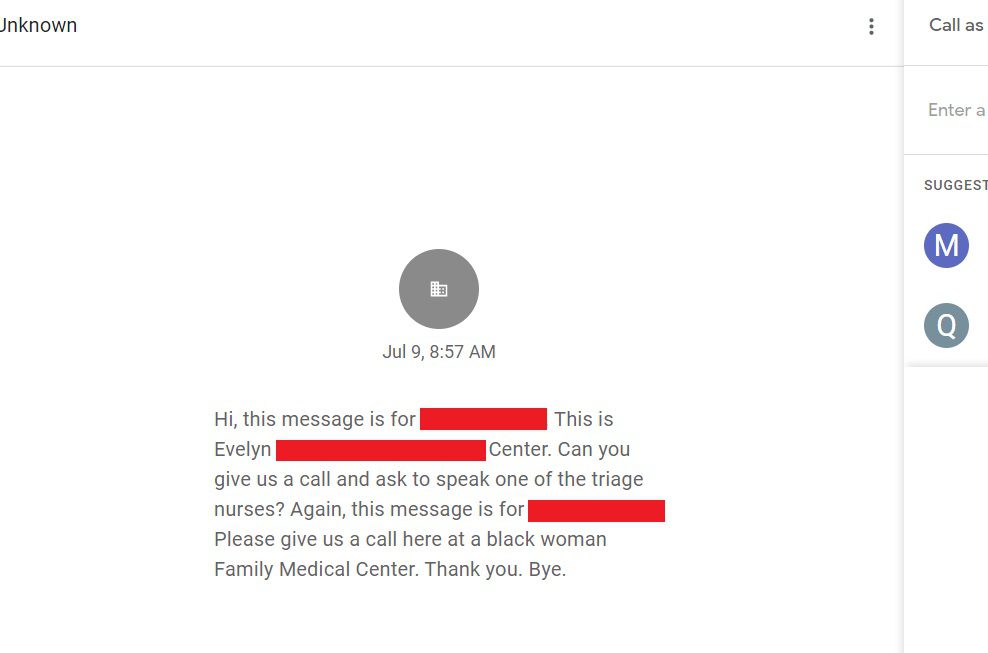

One of our contractors uses his Google Voice to field certain calls as it provides a free voicemail transcription which makes reviewing these kinds of communications easy, especially for those who don’t like to answer your phone. As you can see, some of the “meta-information” the system uses to understand dialects and accents made it into the transcription.

It is isn’t inherently malicious however, it’s easy to speculate any given applications potential for abuse. Too, the “wrong data” can be learned just like a human can encode the wrong data when learning a certain fact. In the simplest example, let’s say as a child you were told a giraffe was a hippo and a hippo was a rhino and so – if no one tells you otherwise and you suddenly have to discuss herbivore mammals in Africa, you’re going to miss your mark.

This is why it’s critical that ML and AI systems have good teachers – the crucial determination between a good application of AI versus a bad one is the quality and diversity of data used to train these algorithms.

At Blue Label Labs, we approach the application of AI techniques from as a data science problem rather than under a software engineering lens. When we develop a machine learning model for one of our products, we aren’t so much focusing our energy on the nuts and bolts of what makes the algorithm work, but rather on gathering, cleaning and using the right data to train your specific model. Ultimately, the difference between a useful ML-based algorithm versus a race-baiting model is very much reflected by the screenshot above to minimize the error in the output they generate. One can only minimize errors if they train their ML models correctly.

Applications of ML and AI in mental healthcare tech

Mental and physiological care overlap in many respects. Let’s look at a few of the ways AI is (or can) help with mental healthcare.

Meditation and relaxation. The word meditation can produce a weird vibe for some people – remember that it’s not about going full Shaolin monk, it’s simply clearing your head. Of the mental healthcare apps on the market, Headspace is one of the most popular apps and they’re beginning to implement AI as part of their backend.

Currently, the app offers services that help you chill – essentially, their services are designed to help you relax during times of anxiety, panic episodes and when these segments of mental illness affect sleep which also exacerbates these conditions in a cyclical kind of nature. While Headspace hasn’t managed to implement an actual AI backend, machine learning can enhance these applications by retaining an examining data which links with wearable tech, specifically those that can monitor various conditions such as heart rate, blood pressure, oxygen levels, and many others. When one is on the verge of a stressful episode – of whatever flavor – an app that reports real-time information to the patient’s provider can help reduce the severity by alerting these professionals of physiological (i.e. somatic responses) that coincide with many mental health episodes.

Schizophrenic, schizoaffective and bipolar episodes. Of the latter two, there are very similar occurrences for in manic episodes that define the mood disorder component of the illness, with bi-polar technically being broken into three categories based on the severity of an individual’s mania. Schizophrenia is somewhat of an umbrella term for a mental illness that can manifest in multiple ways from (at the most severe) crossing boundaries with dissociative identity disorders (DID) down to extreme paranoia and what’s known as a “persecution complex” as well as delusions of self.

During these episodes, people’s bodies tend to behave differently. For example, periods of mania usually coincide with increased movement speed, heart rate, blood pressure and other maladaptive behaviors with the most commonly discussed issue being engaging in risky situations such as drug use, unprotected sex, gambling, and other potentially problematic activities.

By using a system to track an individual’s movement and other physiological signs, an app can alert a provider to such behavioral patterns and allow them to securely intervene. While this has yet to be done, there are providers and entrepreneurs in the healthcare who are working with data compliance legislation (i.e. namely HIPAA) to figure out a baseline for such healthcare software which would allow them to securely collect data and even reach out to individuals who are in (or about to) experience a mental healthcare crisis.

Depression and suicide prevention. In much the same way as these apps can help with schizophrenia and mood disorders, they can be used to detect periods of depression which is particularly useful for those who have or might attempt suicide. Episodes in major depressive disorder (MDD) can often be detected by changes in a person’s physical status.

Too, medications like SSRIs, SNRIs, tricyclics, MAOIs, and others work differently for every individual, depending on how damaged or non-functional their neuroreceptors might be as well as comorbid conditions. Healthcare providers can use these apps to monitor changes and interact with these patients between visits to help get them back on track quicker, rather than simply assessing an individual based on data collected during an office visit.

Blue Label Labs can build your mental healthcare app

We understand the growing application of software for assisting in diagnosing and treating mental healthcare issues. Our process involves thoroughly assessing your needs during a design sprint then building software that adheres to every data compliance agency’s conditions. We’ve used this process to design various healthcare apps and we’ll gladly use the same process to build the framework for your vision.

Get in touch with us to learn how about our process and how we can help infuse the power of AI into your app for mental healthcare.

Natasha Singh

Senior iOS Developer at Blue Label Labs