How To Setup Automatic Audit Logging In Postgres Using Triggers and Trigger Functions

An important, but often overlooked, concept when building an app or new software service is that of auditing.

Auditing in this context refers to maintaining a reliable and complete log of all data changes that happen in the system so that at some point in time in the future you can reliably answer the question about why a certain piece of data changed, who caused it and when did it happen?

While your uncle’s social media network for left-handed ventriloquists might not have a pressing need for an audit log, something like an on-demand mobile marketplace that deals with financial transactions or a medication information system managing sensitive patient information quite often need to have an audit trail implemented for both compliance and normal operational reporting needs.

In these situations, it is essential to track at the row-level each change that is made in the database along with the requisite answers to the who/what/when of those modifications.

At a first glance, implementing such an audit log might sound like a whole bunch of extra work that your already overstretched development team cannot handle, in this post I will show you how that notion is completely wrong through the use of Postgres triggers and trigger functions.

Approach: Audit Tables & Trigger Functions

At a high level, what we are going to do is define a new schema and within it a table (logged_actions) that will be used to collect and log all row-level operations in the system.

From there, we will then create a database trigger defined on every table in our database, that will be fired after every update made to a table.

This trigger will collect information about the change that was made along with the identity of the database user making the change and then write that data directly into the logged_actions table.

Once the audit table and trigger function are set up, the only thing that needs to be done on an ongoing basis is to add a trigger to each newly added table to the system which invokes the trigger function after every update.

Besides being super simple to set up, the great benefit of implementing auditing through the user of Postgres triggers is that it operates completely independently without any requirement for the application developers to modify their queries and logic in order for the audit trail to be kept.

Thus, everything will be logged and there is no way for any changes to occur in the system without the audit trail being kept.

Audit Table Schema

At the heart of our auditing setup is the audit table which will serve as our log of all data operations done in our database. For each row-level change made in the database, there will be an entry added to the audit table. Some notable fields in our audit table include:

- event_id: A unique identifier for each audit entry entered

- schema_name: The schema of the audited change.

- table_name: The name of the table in which a row was changed

- session_user_name: The database username which performed the audited operation.

- action_tstamp_tx: The timestamp of the transaction for the operation.

- transaction_id: The transaction id that performed the change.

- application_name: The application name that was set at the time of the operation.

- client_addr: The IP address of the client which performed the operation.

- client_port: The remote port through which the operation was performed.

- client_query: The raw top-level query which was executed to cause the operation.

- action: The operation performed on the row, in this case:

I = insert, D = delete, U = update, T = truncate

- row_data: The data prior to the operation being performed. In the case of a Create, this is the new data only. In the case of Update/Delete, this is the row as it existed prior to the operation.

- changed_fields: The list of fields that were updated by the operation.

Steps to Setting Up an Automatic Audit Log

- In your existing database, create a new schema and name it “audit”. This way we will keep all the data related to the audit in a separate namespace from the operational data of your app.

- Within the new audit schema, create a new table named “logged_actions”.

CREATE SEQUENCE audit.logged_actions_event_id_seq

INCREMENT 1

START 1

MINVALUE 1

MAXVALUE 9223372036854775807

CACHE 1;

CREATE TABLE IF NOT EXISTS audit.logged_actions

(

event_id bigint NOT NULL DEFAULT nextval('audit.logged_actions_event_id_seq'::regclass),

schema_name text COLLATE pg_catalog."default" NOT NULL,

table_name text COLLATE pg_catalog."default" NOT NULL,

relid oid NOT NULL,

session_user_name text COLLATE pg_catalog."default",

action_tstamp_tx timestamp with time zone NOT NULL,

action_tstamp_stm timestamp with time zone NOT NULL,

action_tstamp_clk timestamp with time zone NOT NULL,

transaction_id bigint,

application_name text COLLATE pg_catalog."default",

client_addr inet,

client_port integer,

client_query text COLLATE pg_catalog."default",

action text COLLATE pg_catalog."default" NOT NULL,

row_data hstore,

changed_fields hstore,

statement_only boolean NOT NULL,

CONSTRAINT logged_actions_pkey PRIMARY KEY (event_id),

CONSTRAINT logged_actions_action_check CHECK (action = ANY (ARRAY['I'::text, 'D'::text, 'U'::text, 'T'::text]))

)

CREATE INDEX logged_actions_action_idx

ON audit.logged_actions USING btree

(action COLLATE pg_catalog."default" ASC NULLS LAST)

TABLESPACE pg_default;

CREATE INDEX logged_actions_action_tstamp_tx_stm_idx

ON audit.logged_actions USING btree

(action_tstamp_stm ASC NULLS LAST)

TABLESPACE pg_default;

CREATE INDEX logged_actions_relid_idx

ON audit.logged_actions USING btree

(relid ASC NULLS LAST)

TABLESPACE pg_default;

- Within the audit schema, now create a new trigger function called “create_log_on_modify”. This is the heart of our auditing set up as it will fire after every row-level operation and will pull out the necessary parameters to populate a row in the logged_actions table.

CREATE FUNCTION audit.create_log_on_modify()

RETURNS trigger

LANGUAGE 'plpgsql'

COST 100

VOLATILE NOT LEAKPROOF SECURITY DEFINER

SET search_path=pg_catalog, public

AS $BODY$

DECLARE

audit_row audit.logged_actions;

include_values boolean;

log_diffs boolean;

h_old hstore;

h_new hstore;

excluded_cols text[] = ARRAY[]::text[];

BEGIN

IF TG_WHEN <> 'AFTER' THEN

RAISE EXCEPTION 'audit.create_log_on_modify() may only run as an AFTER trigger';

END IF;

audit_row = ROW(

nextval('audit.logged_actions_event_id_seq'), -- event_id

TG_TABLE_SCHEMA::text, -- schema_name

TG_TABLE_NAME::text, -- table_name

TG_RELID, -- relation OID for much quicker searches

session_user::text, -- session_user_name

current_timestamp, -- action_tstamp_tx

statement_timestamp(), -- action_tstamp_stm

clock_timestamp(), -- action_tstamp_clk

txid_current(), -- transaction ID

current_setting('application_name'), -- client application

inet_client_addr(), -- client_addr

inet_client_port(), -- client_port

current_query(), -- top-level query or queries (if multistatement) from client

substring(TG_OP,1,1), -- action

NULL, NULL, -- row_data, changed_fields

'f' -- statement_only

);

IF NOT TG_ARGV[0]::boolean IS DISTINCT FROM 'f'::boolean THEN

audit_row.client_query = NULL;

END IF;

IF TG_ARGV[1] IS NOT NULL THEN

excluded_cols = TG_ARGV[1]::text[];

END IF;

IF (TG_OP = 'UPDATE' AND TG_LEVEL = 'ROW') THEN

audit_row.row_data = hstore(OLD.*) - excluded_cols;

audit_row.changed_fields = (hstore(NEW.*) - audit_row.row_data) - excluded_cols;

IF audit_row.changed_fields = hstore('') THEN

-- All changed fields are ignored. Skip this update.

RETURN NULL;

END IF;

ELSIF (TG_OP = 'DELETE' AND TG_LEVEL = 'ROW') THEN

audit_row.row_data = hstore(OLD.*) - excluded_cols;

ELSIF (TG_OP = 'INSERT' AND TG_LEVEL = 'ROW') THEN

audit_row.row_data = hstore(NEW.*) - excluded_cols;

ELSIF (TG_LEVEL = 'STATEMENT' AND TG_OP IN ('INSERT','UPDATE','DELETE','TRUNCATE')) THEN

audit_row.statement_only = 't';

ELSE

RAISE EXCEPTION '[audit.create_log_on_modify] - Trigger func added as trigger for unhandled case: %, %',TG_OP, TG_LEVEL;

RETURN NULL;

END IF;

INSERT INTO audit.logged_actions VALUES (audit_row.*);

RETURN NULL;

END;

$BODY$;

- Now it’s time to link the trigger function created in the previous step to triggers defined on the main tables within your database. In our example, we have a Users table with 3 fields (ID, Username, Email). To create a trigger to fire the “create_log_on_modify” trigger function explained in step 3, we use the following trigger definition:

CREATE TRIGGER audit_trigger_row

AFTER INSERT OR DELETE OR UPDATE

ON public."user"

FOR EACH ROW

EXECUTE FUNCTION audit.create_log_on_modify('true');

- You will need to create the trigger on each table that you’d like to include within the scope of the audit log, so rinse and repeat for all tables within your database.

Testing the Audit Log

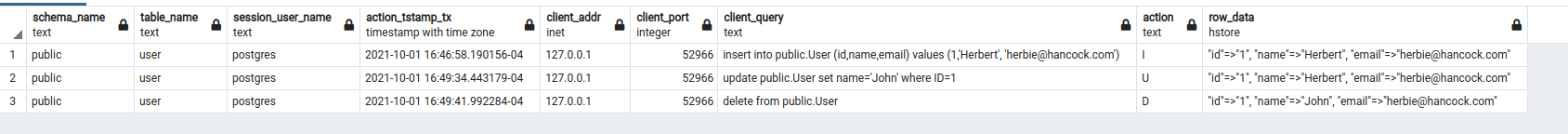

Now to test that the audit log is working we will use a very basic application table named “Users” with an ID, Name and Email field. The starting state is an empty table, following that we perform the following:

1.) Insert a row of data into the Users table for a User (ID:1, Name: Herbert, Email: herbie@hancock.com):

insert into public.User (id,name,email) values (1,'Herbert', 'herbie@hancock.com')

3.) Now, I modify the name record to “John”:

update public.User set name='John' where ID=1

4.) Finally, I delete the record from the User table:

delete from public.User

5.) Now, for the big reveal, I query the audit.logged_events table and here is our fully formed audit log:

In the above screenshot, you see 3 rows inserted into the logged_events table, one representing the creation of the record, the second the modification of the name, and the final one representing the deletion.

Limitations

While the above auditing solution is very easy to implement, maintain and report on, there are some downsides to be aware of this approach:

- For systems at scale with a high number of write operations, this single table will not scale. You will quickly find yourself with a massive table that is almost unworkable through traditional RDBMS queries. While you can still use the same basic approach, at scale will need to create a data pipeline to remove and transfer data from this log to a data lake or data warehouse.

- This scheme requires that every new table added to the system have a trigger defined on it in order for it to work. So to properly audit all tables in the system, you will need to make sure that your database migration scripts always include the trigger definition when new tables are added.

Acknowledgments

Special thanks to the PostgreWiki and our friends at Eager.io for inspiration on this approach.

Bobby Gill