5 Technical & Ethical Challenges of Using AI for Business

Using AI and ML demands responsibility – these robust, complex systems can easily cause as much harm as good when misused.

Building with AI means navigating both technical and ethical challenges that sometimes (but not always) go hand-in-hand. Here, we’re going to discuss a handful of the most pressing constraints of using AI at the moment.

An overview of technical & ethical constraints in AI

While “the sky is the limit”, using AI and ML is a lot like launching a rocket into space as certain conditions can quickly cause a catastrophe.

It’s complex, costly, and requires a lot of oversight, as issues such as hallucinations surface, which are one of several problems inherent to powerful AI.

Ethical concerns can also arise, which shouldn’t be surprising with headlines like Scientists Use GPT AI to Passively Read People’s Thoughts in Breakthrough presently surfacing on the web.

In 2019, teams in both Russia and Japan successfully recreated images captured from brain waves during an MRI. | Source: Grigory Rashkov/SciTechDaily

Ethics and technical issues often intersect, but problems may fall into one or the other category.

Technical limitations of AI in business

Technical problems vary, where most issues arise from the complexity and scope of performance at scale. Systematically working with layers of logic that can perform advanced functions means a vast number of moving parts.

Data quality and quantity create a degree of unpredictability

One of the most glaring limitations of AI in business comes from the amount and variety of data. Training a language model like ChatGPT or Bard, requires an ocean of data and ongoing training to produce correct output for the topics on which they’re trained, plus the copious syntax configurations needed for a conversational output in any given language.

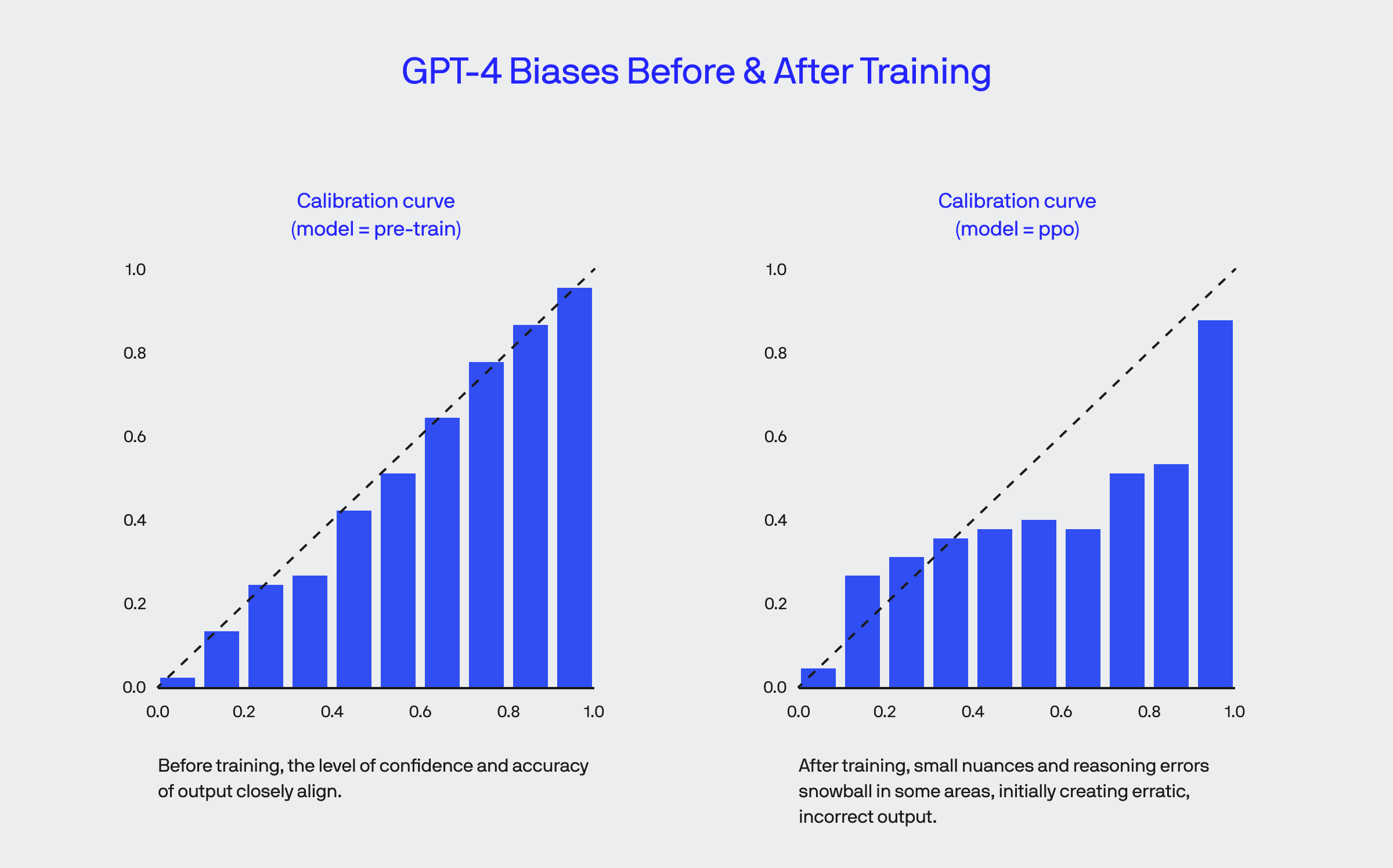

In our blog on the GPT LLM, we re-purposed one of their charts that shows how even after training the system with new data, small nuances introduced to its existing knowledge throw it off to where it becomes more confident (and wrong) in most areas during this time.

It isn’t easy to explain because of the complexity

Often, multiple layers of fuzzy logic (i.e., a range of acceptable conclusions) are responsible for creating the output you see for any given system. When you dig down into the computer science side, various mathematical and programming functions are extensive and complicated.

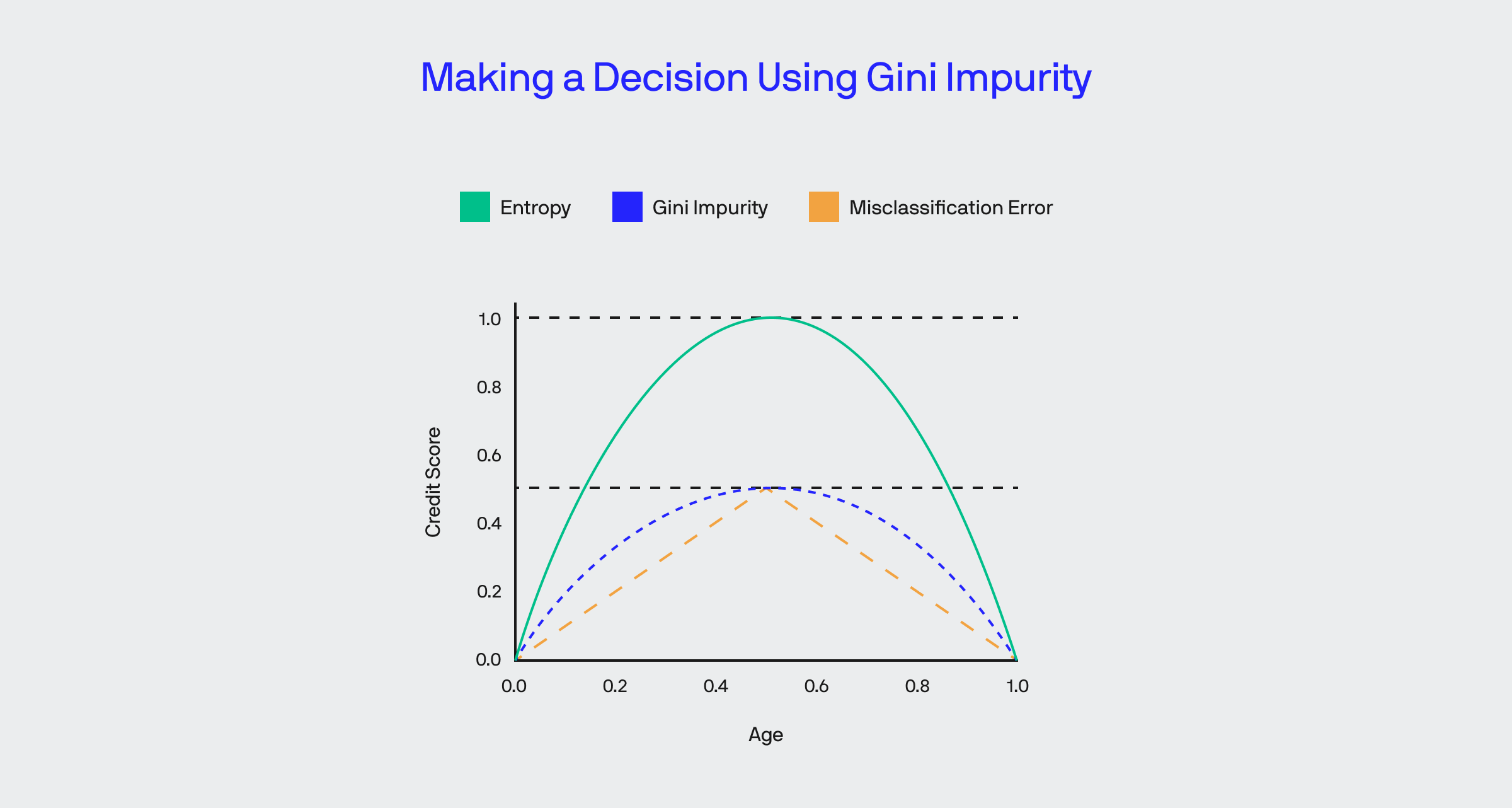

Computational processes like the Gini Impurity are used on the fly to compare two pieces of data in a set, for example, age and credit score, which is one of several decision-making functions used when determining someone’s likelihood to default on a loan.

A constant stream of advanced computations coalesce to produce the output streams your see, whether a page of written content in ChatGPT or an image made by DALL-E. When output results from layers of data ranges aligning at any given moment, describing exactly what happens and pinpointing different elements is time-consuming and complex.

As such, new problems regularly arise simply because these systems have so many moving parts.

While AI is also used to double-check and review other AI, you can probably see why this is an issue. Human intervention is required to tweak these systems regularly – quite simply, the bigger the task, the more time-consuming this becomes.

Change and scaling require consistency, which is in short supply

Developer perspectives inherently become instilled in some processes, as do viewpoints from training data.

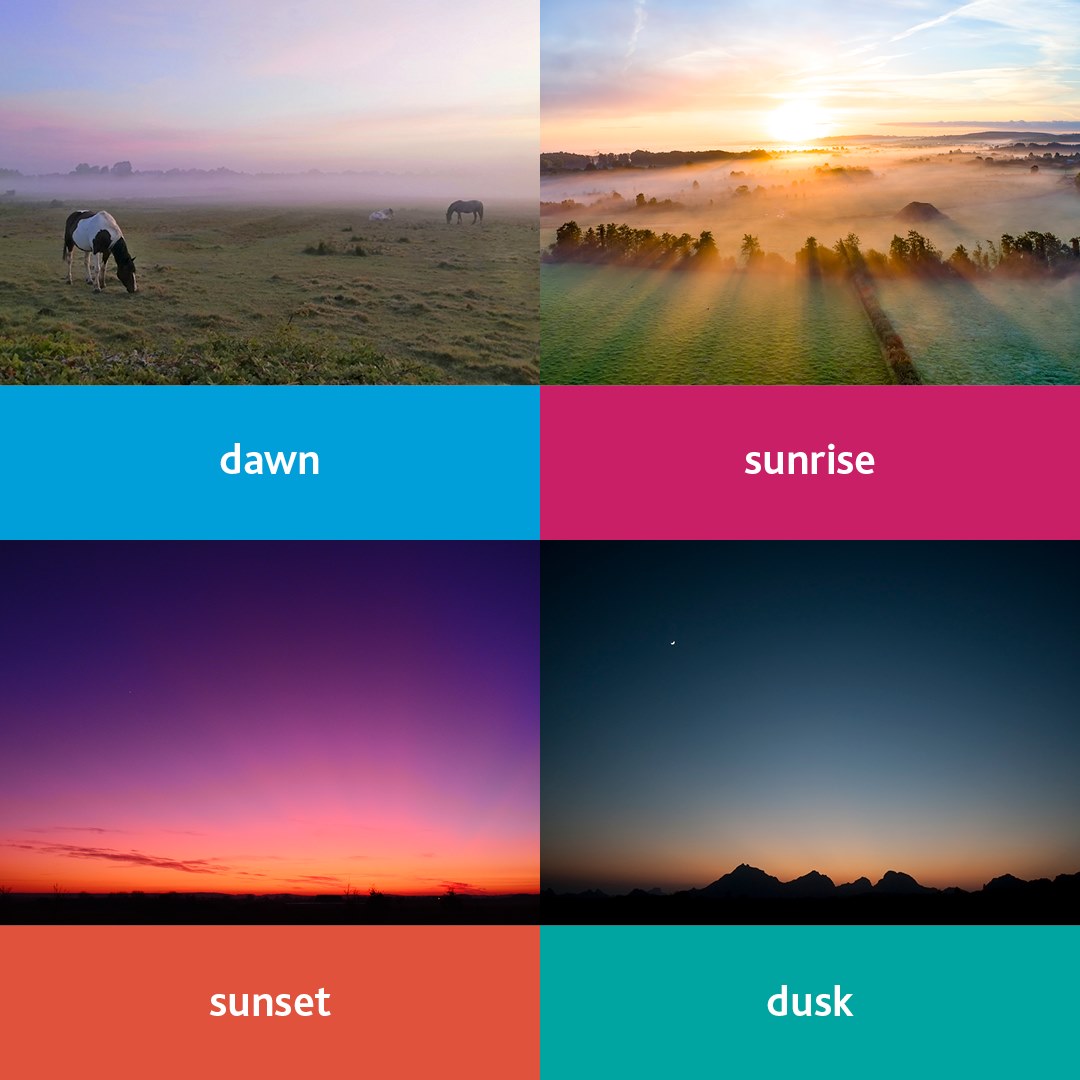

Hard, constant facts are usually simple to cement into the system, but little nuances introduced over time change behavior. For example, let’s say you want to build a computer-vision-based system that identifies and logs recognizable shapes of the clouds in the sky.

If set to find clouds against a blue sky, you’d miss clouds at different times of the day and in different weather conditions. | Source: Learning English with Cambridge on Facebook

Early in training an AI, it’s much like teaching a child the basics of how things work. Training data might start off with a basic knowledge that the sky is:

- Blue

- Above you

Over time, the system will learn that the sky is:

- Different colors at different times

- Only above you while on the ground

- Composed of various gases

- Occupies the same general shape and volume around the planet

- Etc.

Sometimes, new knowledge “fits” perfectly with existing knowledge, but this isn’t always true. Remember, when we as a human learn something, we typically learn based on relative knowledge, but in an AI, it relies on how it was taught to learn.

Learning some processes incorrectly with both people and AI will typically derail anything contingent on said knowledge. But with people, we can (sometimes) get a hunch when we’re doing something wrong and use our cognitive functions to help us figure out a problem.

As time progresses, people supporting such projects change, influencing how new information is integrated, the quality of the data, training speed, and much more.

Ethical challenges in AI development

Complete, reliable automation command a particular appeal for businesses, but routine human intervention is currently required in every layer of AI development. Without people working to adjust and direct learning, the following ethical problems can surface.

A skill gap can require problems to spiral out of control

Supporting AI requires a comprehensive technical understanding of these products and a consciousness of appropriate use.

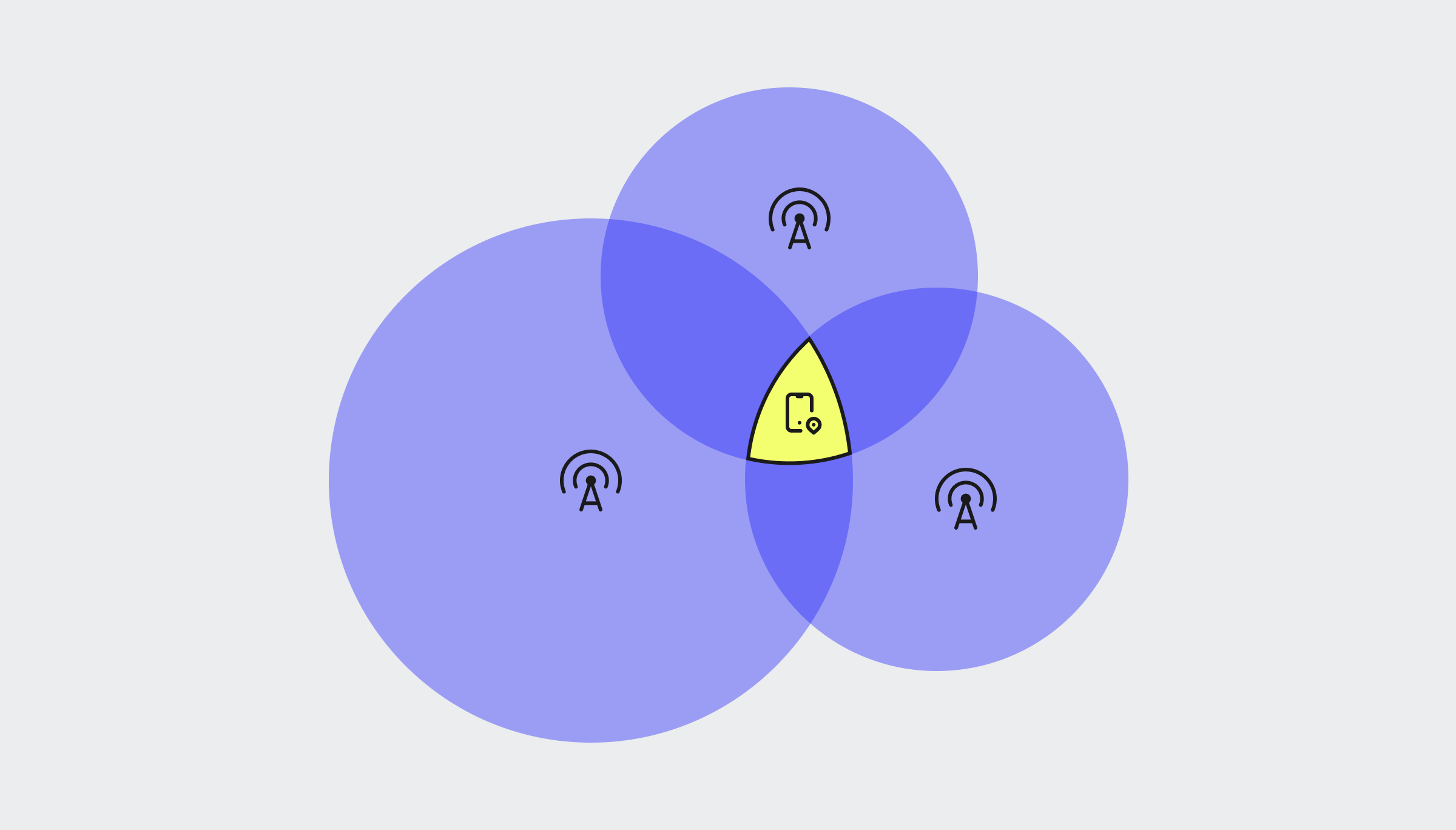

You don’t send a firefighter into a burning building without training and equipment, and AI is much the same – similar to how position is calculated from a cellular signal, multiple points of data on any one person or entity can be revealing.

Calculations using the distance from each tower allow them to signal track (i.e., locate) a cellular radio.

AI technology providers and the developers who use these systems (and in some cases, the end user as well) can potentially use certain associations to identify individuals in addition to known, well-defined personally identifiable information (PII).

Like browser fingerprinting, multiple parameters can easily create associations that identify a person or device. Unchecked methods pose a risk to the privacy of users and bystanders if used maliciously or when unwittingly revealing data.

Over-reliance on AI is a slippery slope

It’s easy to seek what’s easy. Almost all systems follow the path of least resistance, like electricity through a circuit or a plane or boat gliding through their respective medium.

As humans, we normally become more efficient over time. Some argue it’s laziness, but it’s not hard to look back at human history and see that everything around us is a result of layer upon layer of efficiency improvements.

Though AI can’t intrinsically develop a habit itself, unless designed to, AI can become a habit for us.

- “Is this right? Let’s just do what the AI thinks is best.”

- “Communicating sucks. I’ll just have ChatGPT talk to my mom for me by collating all my calendar events and redacted text messages.”

- “Fighting crime is hard. Let’s just tell our AI police what’s good and bad. It should be fine.”

Surgery at this scale with human hands is impossible, but some solutions like this can do tasks we could never hope to do otherwise.

Eventually, AI will likely be used in conjunction with some immutable programming methodology to create a swarm of sentient nanobots that self-replicate using raw carbon to wipe out life as we know it.

Until then, we must take things one step at a time. As we know from the highly-automated process of manufacturing most physical products, one small oversight can run other destroy a production run.

As solutions on the market continue to evolve, keeping human hands and eyes involved for the foreseeable future will be necessary to keep things from spiraling out of control.

We help navigate technical & ethical challenges with AI

Working with ML is evolving challenge that we don’t take lightly.

We stay on top of best practices and keep strategy front-and-center to ensure that we approach AI development with a practical mindset that also minds the ethical Ps and Qs of good software development.