From GPT-1 to GPT-4: The Evolution of Large Language Models (From Humour to Biases)

The OpenAI family of products and services have certainly caught the attention as they amaze and terrify audiences with their capabilities.

We want to examine how OpenAI has progressed as a business and how the GPT family of products has evolved over time. In the following, we’ll provide plenty of images to convey some of the most interesting OpenAI stats.

A Brief History of OpenAI & Their Funding

The non-profit OpenAI was founded in 2015 by several individuals who shared a vision of building AI that’s both safe and beneficial.

In 2018, OpenAI opened the arm OpenAI LM to pursue commercial and research efforts – at this time, the company dialed in on developing and understanding language models.

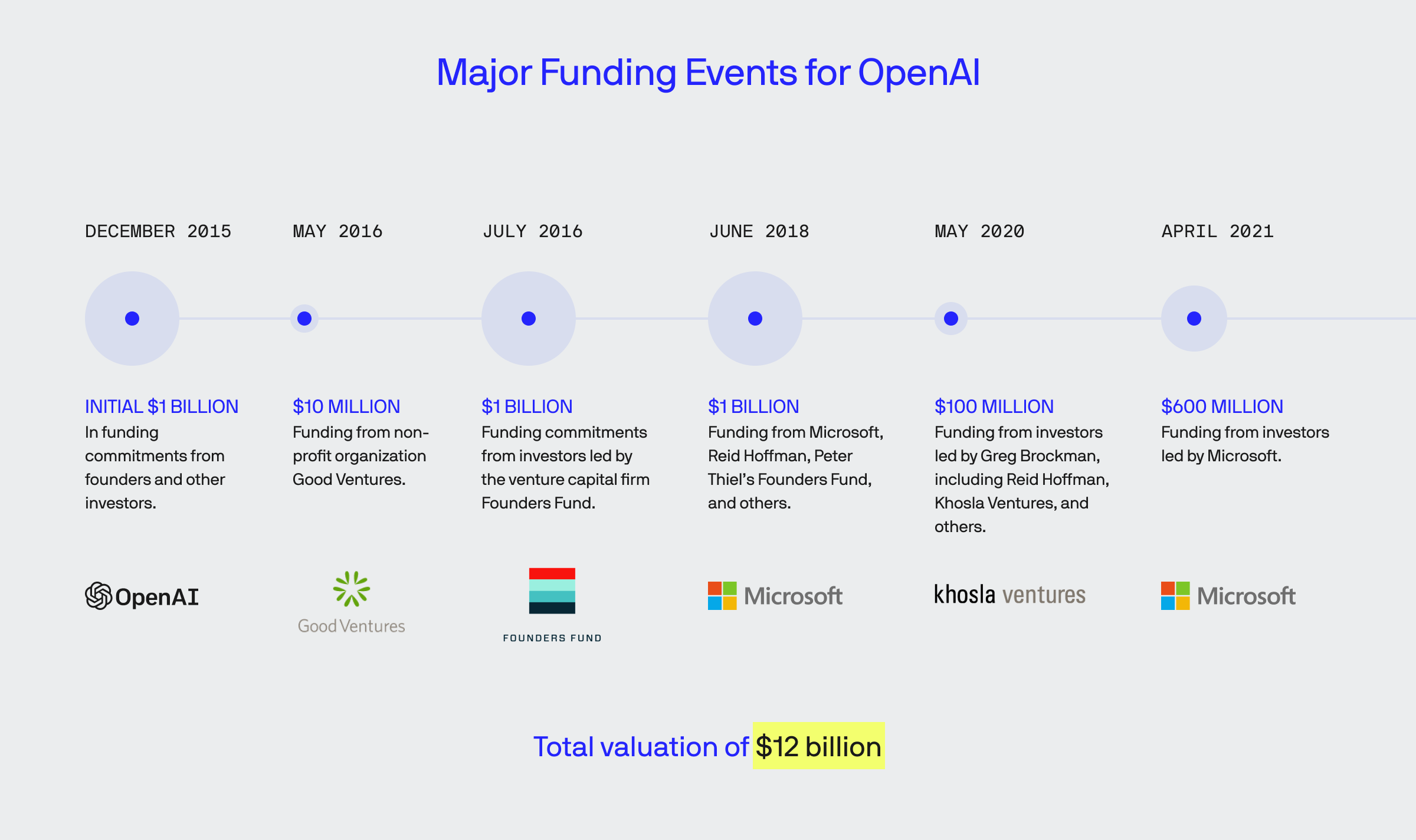

Between December 2015 and April 2021, the company raised billions, reaching a worth of $12 billion.

Evolution of GPT LLMs at OpenAI

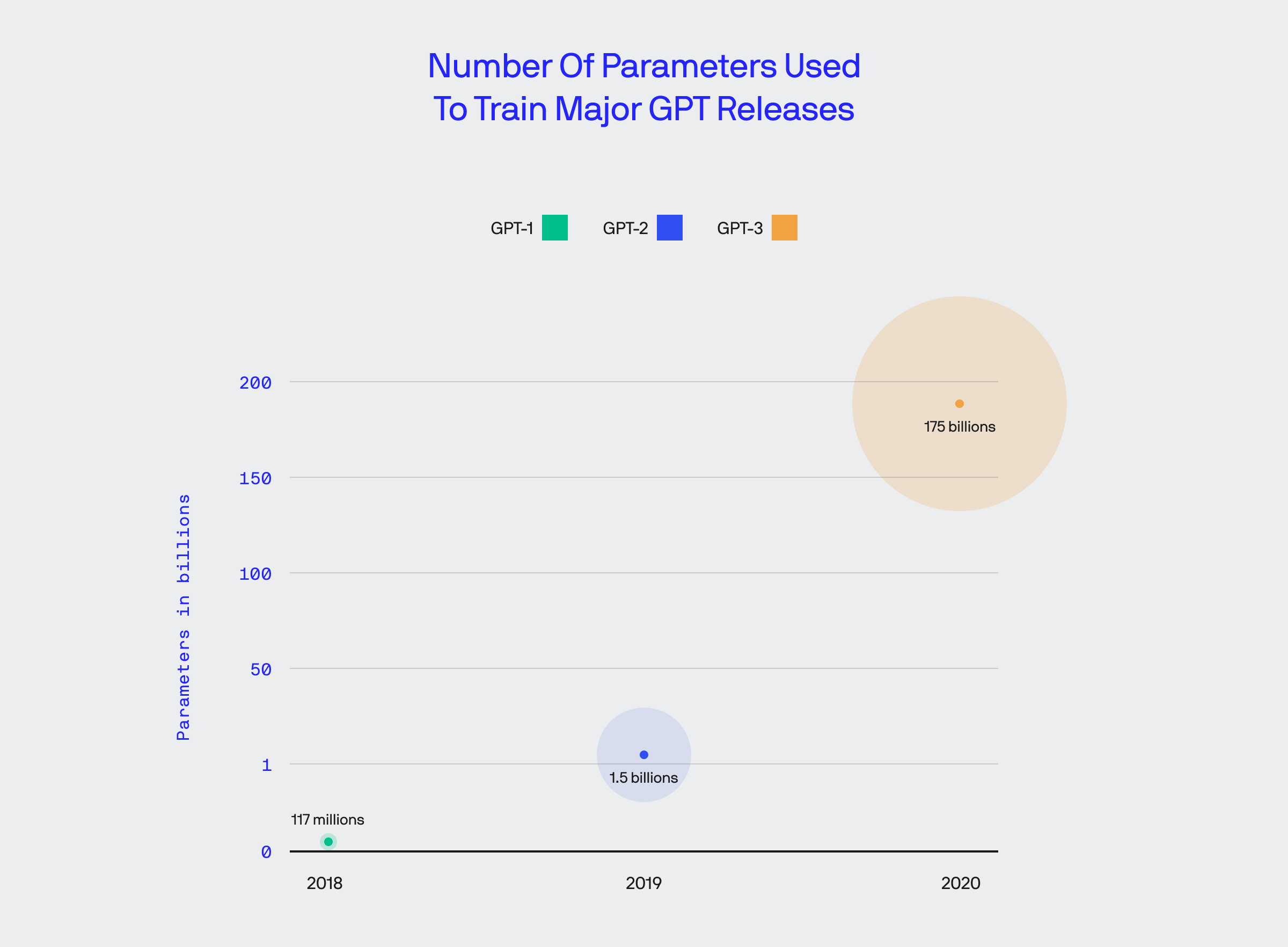

Since shifting focus to AI’s language and computer vision sides, the GPT family of products emerged in 2018 with GPT-1.

GPT-1 got the ball rolling with a system that could perform simple tasks, like answering questions when a subject aligned with its training. By GPT-2, the model expanded significantly with more the 10 times the parameters that allowed it to produce human-like text and the ability to tackle select tasks automatically.

Before GPT-3, access to the system was restricted to the public until access to the waitlist was officially lifted in November 2021, allowing anyone to use it. Alongside the introduction of more than 100x the parameters is problem-solving which is interwoven with several other processes.

Following GPT-3 is GPT-3.5-turbo which further expanded the system’s capabilities and became more efficient and, thus, less expensive.

Introducing GPT-4

The latest incarnation in the GPT family is GPT-4. Compared to previous versions, its aptitudes vastly increased in certain areas.

New Competencies in GPT-4

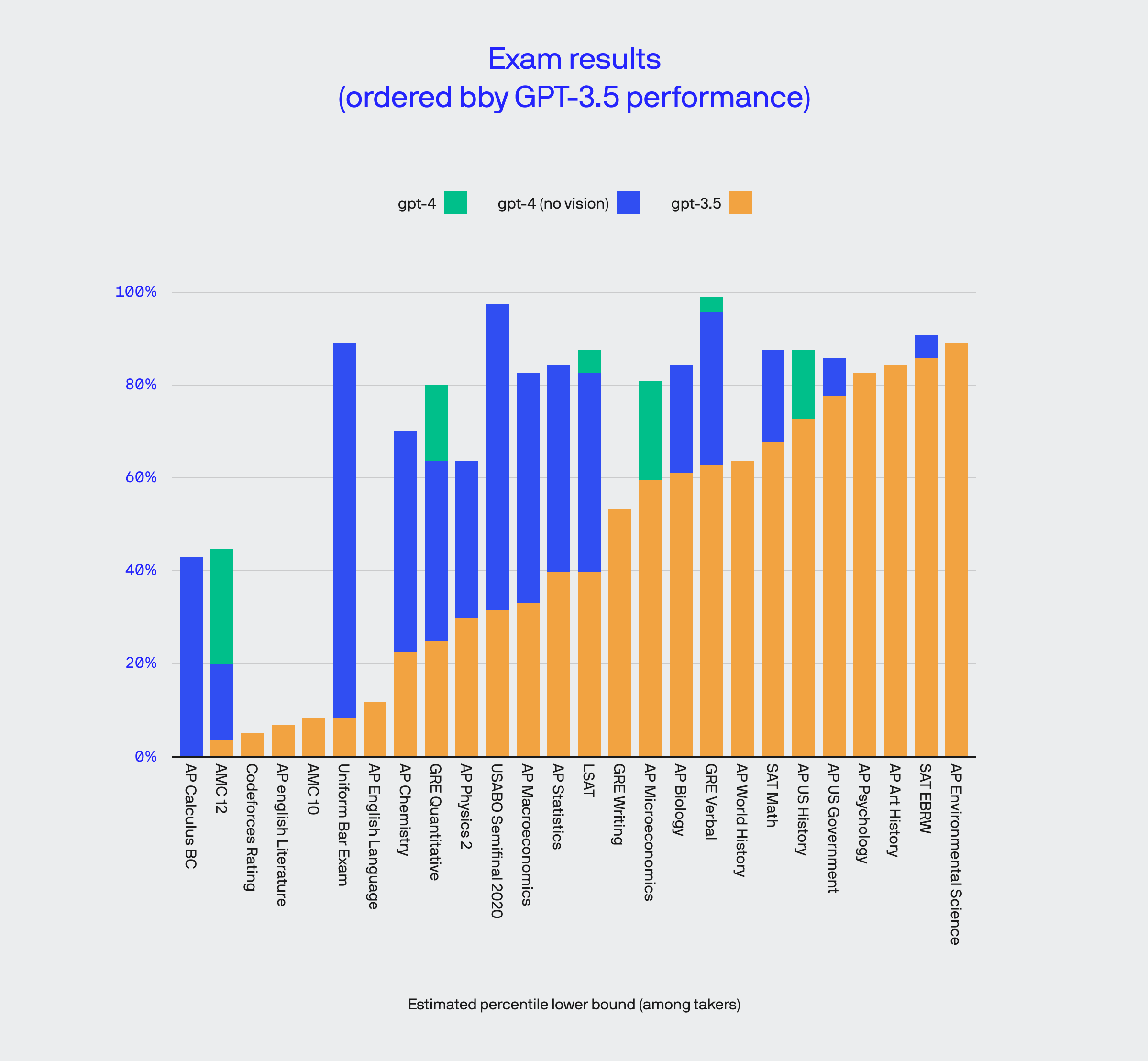

One capability that distinguishes the GPT series from ChatGPT is the ability to use computer vision to interpret visual data. In some subject areas, this has an immense impact; in others, it has little to no effect.

GPT-4 can now capably pass several standardized tests – complex and subjective topics are vastly improved, though some, like AP Calculus BC and AMC 12, need more work to have a passing knowledge.

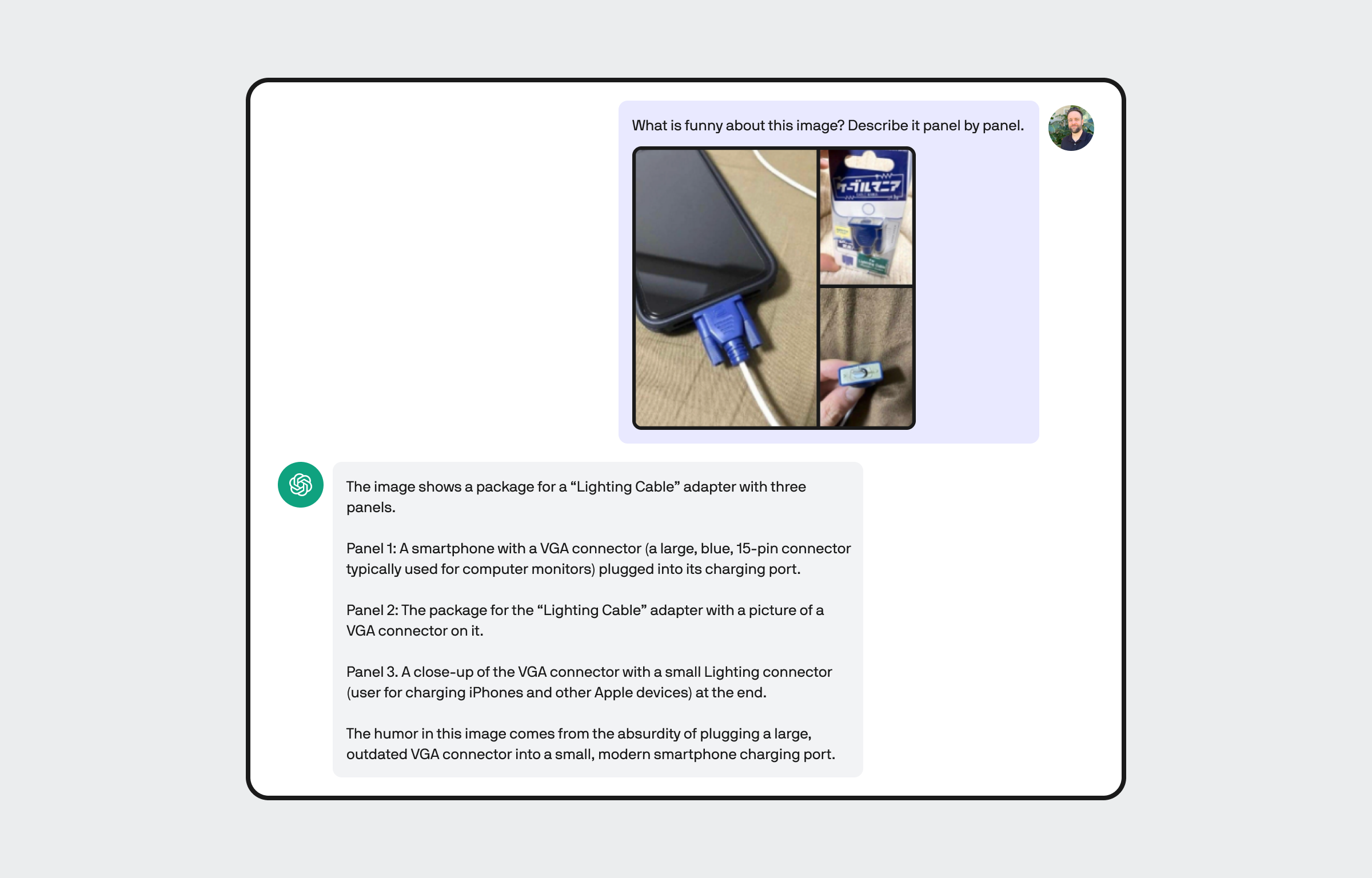

GPT-4 is learning humor

The underlying cognitive and neurological processes that allow humans to feel, understand, and express humor is complex.

Yet, with the addition of being able to interpret computer vision, it is learning to recognize some humor in much the same way it learns and uses language.

Enhanced steerability allows unique delivery & perspective

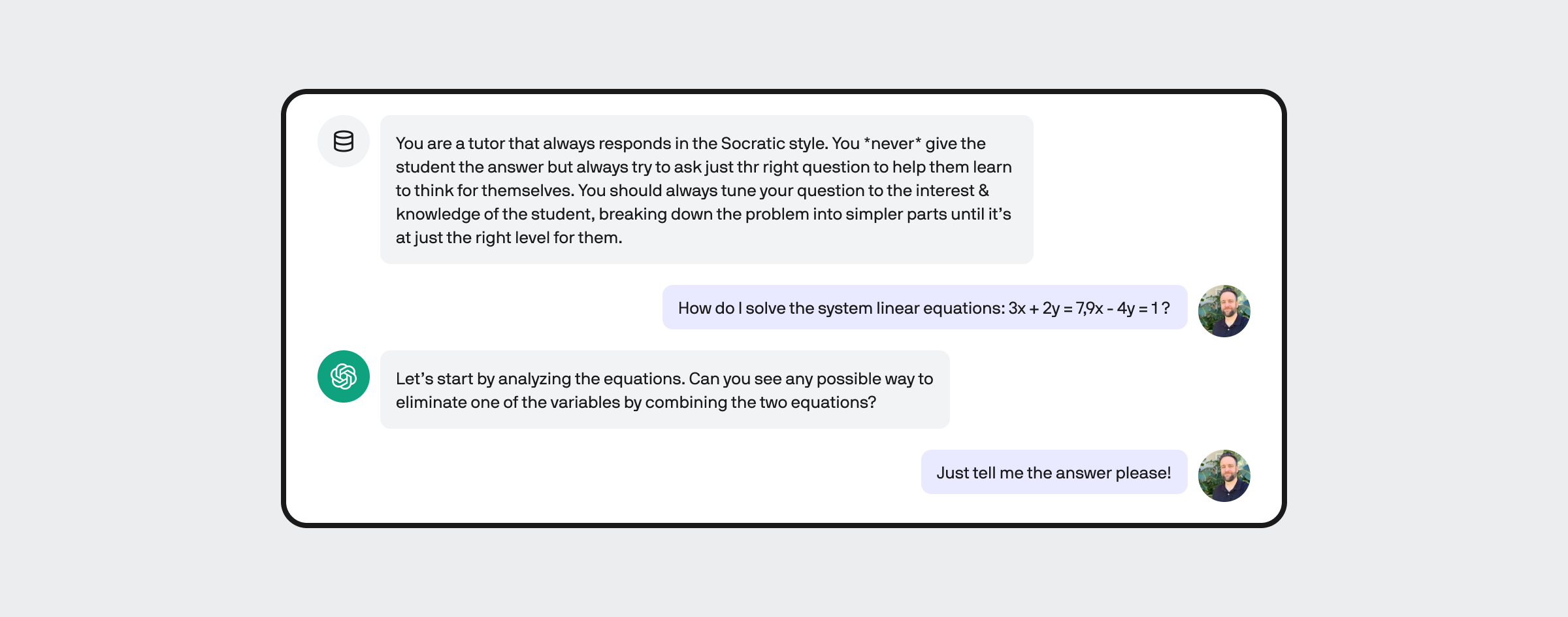

As people, we filter how we deliver information depending on our audience.

For example, we provide information to a classroom of teenagers differently than we do elementary children, each of which is (sometimes) different compared to how we communicate with our colleagues.

Steerability is important for delivery, allowing it to perform tasks like tutoring a user to help them understand how to solve a problem using a logical sequence.

This allows GPT-4 to operate within a set of parameters that could filters output and/or change delivery depending on what the user specifies. Eventually, this feature will allow GPT users, such as businesses, to better structure output specific to their needs.

Overcoming biases

All the cognitive stimuli we process during our various life experiences shape our biases, impacting how we perceive the world around us.

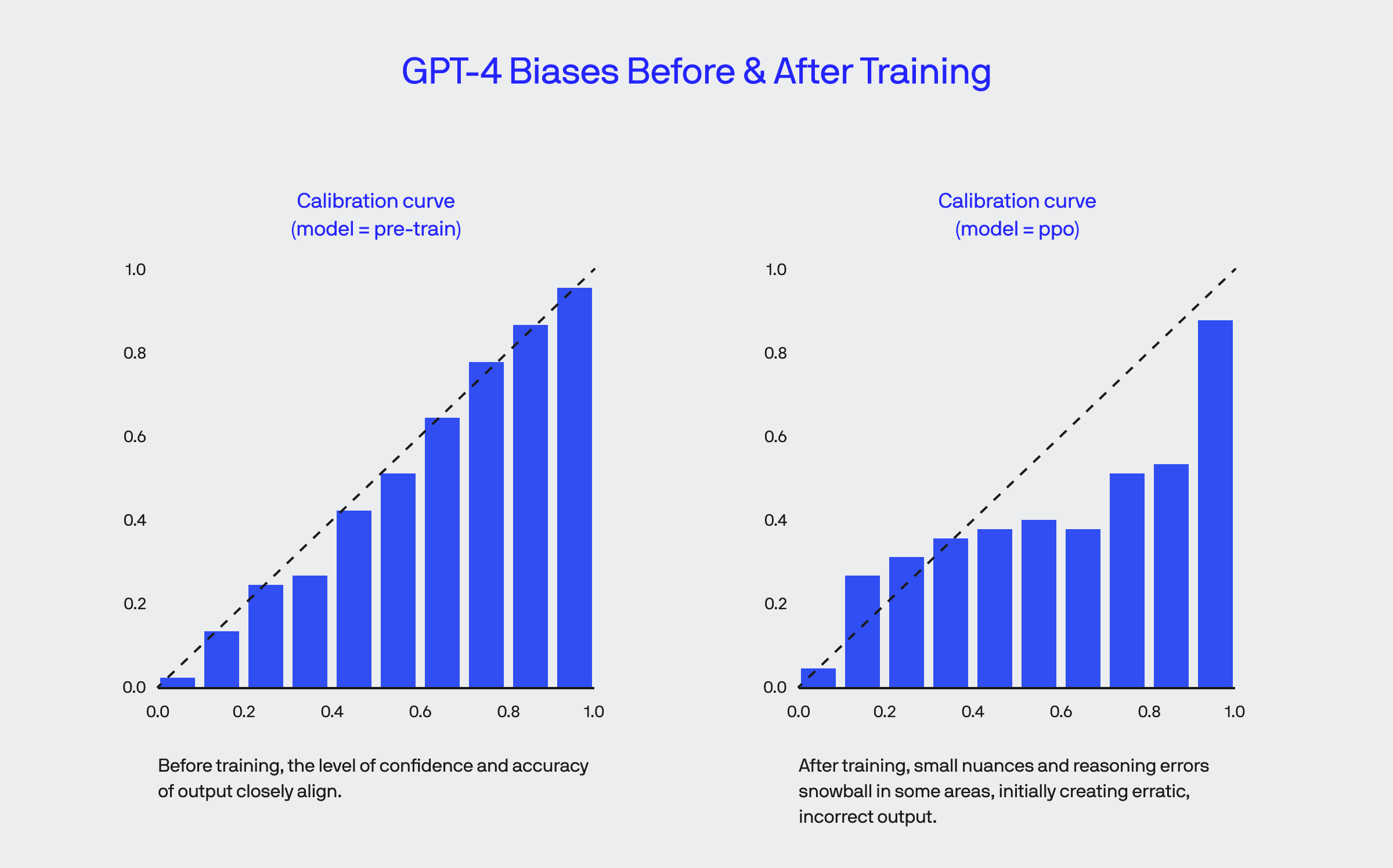

Being trained by humans and the finer mechanical processes of learning introduce biases into AIs like GPT products.

As a known issue with advanced ML systems, teams at OpenAI regularly mitigate problems with raw data, algorithms, and other functions that create irreconcilable biases.

When first calibrated, GPT-4s confidence and likelihood of being correct can diverge as some biases (sometimes based on improper reasoning) become more pronounced, essentially making the “confidently wrong” a bit like when you see a politician talk in great depth about a subject they know nothing about.

Challenges to overcome with GPT & other advanced language models

The GPT LLM and other similar products are quite capable of helping us solve real-world problems at this very moment – however, there is still much learning and development to be done.

It’s also crucial that people have some understanding of these systems and what they can and can’t do.

We have learned about the world through automated biological responses to cognitive stimuli, though with AI, this is building something that does the same through structured, inorganic processes. As such, there is still much learning to be done in this field.

While GPT can learn and seemingly communicate like us, human learning and behavior are vastly more dynamic and data-rich. Despite their capabilities, it is vital to recognize the limitations of these systems and our understanding of human learning.

Scams and nefarious usage of these systems aside, the future for language models is exciting.