ChatGPT vs Claude 2: A Side-by-Side Comparison

Claude 2 from Anthropic, which recently became publicly available in mid-July 2023, has had a couple of months to demonstrate its capabilities for users in the US and UK, causing some to wonder about the benefits of ChatGPT and Claude, in addition to how each stack against each other.

Because ChatGPT, Claude 2, and other LLMs can radically reshape how businesses tackle specific processes, learning about them now is paramount to staying ahead of the curve.

Here, we will look at the parameters of both systems, explain what each means regarding performance, and finish with a couple of examples.

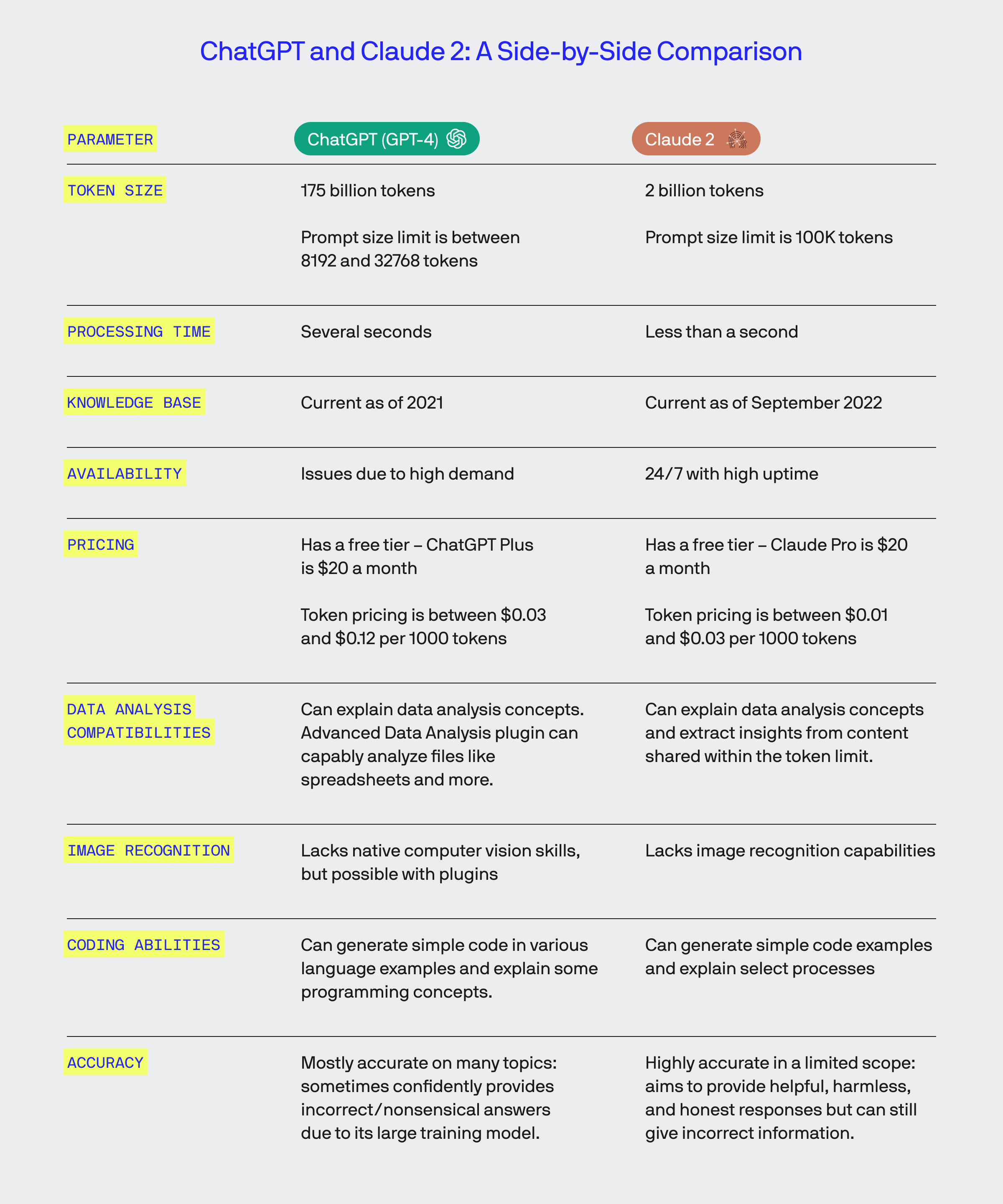

Claude 2 and ChatGPT: A side-by-side parameter comparison

Each LLM is a composition of several parameters that work together, allowing each to converse with users in a chat format and answer questions to the best of their ability. Here’s how the parameters currently compare when placed side-by-side.

Please note that these values are rapidly changing and will undoubtedly change after our publishing date of September 2023.

An explanation of how token size, processing time, knowledge base & pricing all correlate

First and most importantly, we see a significant difference between the underlying token sizes of each system, which is essentially the size of the knowledge base accessible to each platform (i.e., ChatGPT, based on GPT-4 and Claude 2).

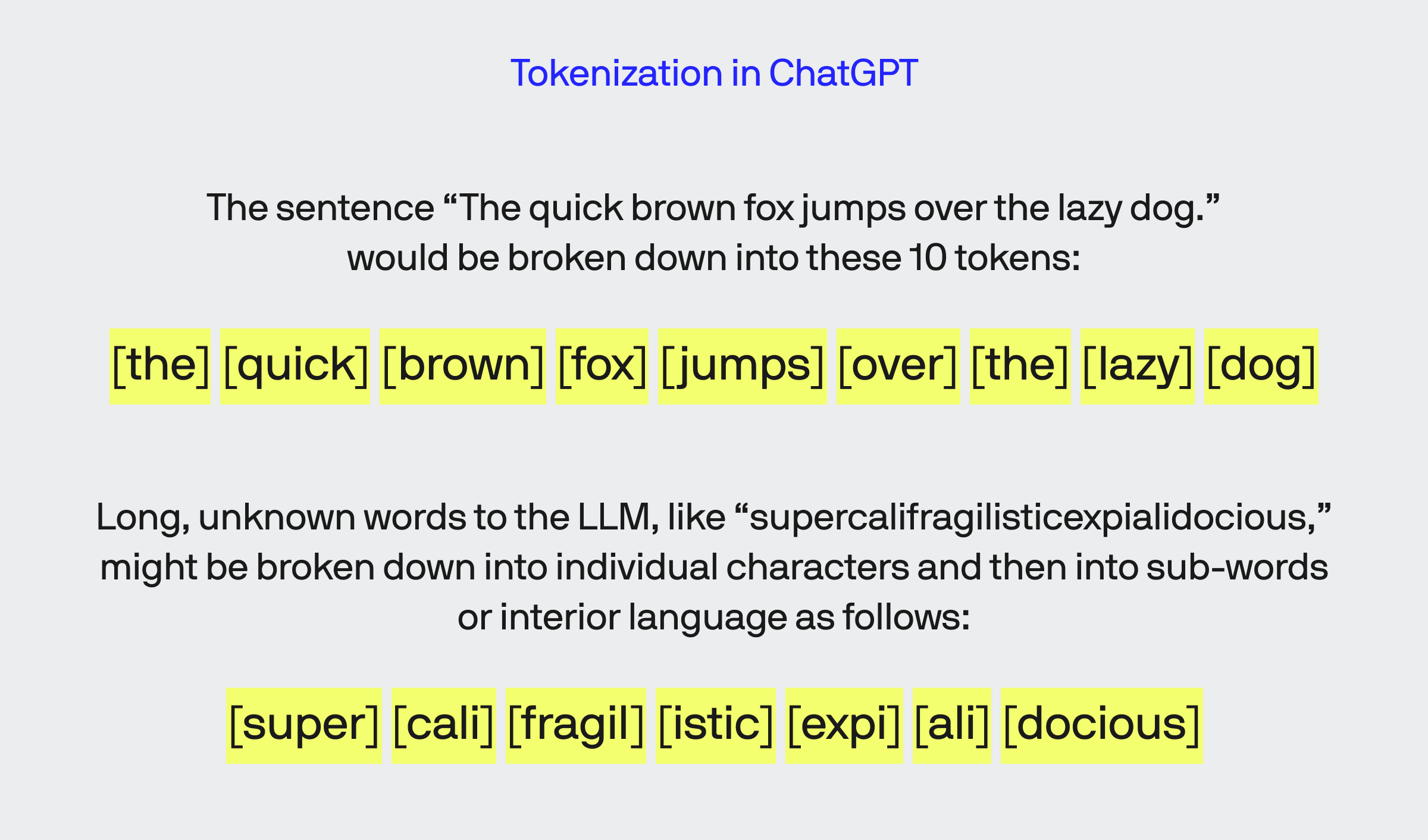

A token is a somewhat nuanced concept in neural networks and the LLMs they support that transposes text strings input by a user to compare with those stored in the system’s knowledge base. All user input first goes through a tokenization process, which is slightly different for each platform – output also can be thought of as a logical collection of tokens.

For example, the tokenization process for GPT models uses a method called BPE (Byte-Pair Encoding). It works by starting with individual characters as tokens and then iteratively merges sets of tokens until practical matches (e.g., whole words, short phrases, a unit of currency like $1000.00, etc.) are formed or when reaching the token size limit of 4 bytes.

An overview of tokenization in GPT-4.

Even though most Unicode English letters occupy a single byte when using UTF-8 encoding, BPE uses a more sophisticated process than straightforward byte-to-character mapping. The granular details of how all this works are beyond the scope of this article, but users should understand that there are limitations regarding the size of the knowledge base, how much data can be processed per session or individual message, and the cost.

This means that the token length for each message and response set must fall within this permissible range – for example, if you provided the 8K ChatGPT model with an 8000-token message to analyze, the output would be limited to 192 tokens.

As you can probably imagine, having a more extensive knowledge base to reference is part of why the more advanced ChatGPT system takes longer to respond to a query or prompt. Paying users are also prioritized; hence, free accounts may find they cannot access GPT services during peak usage.

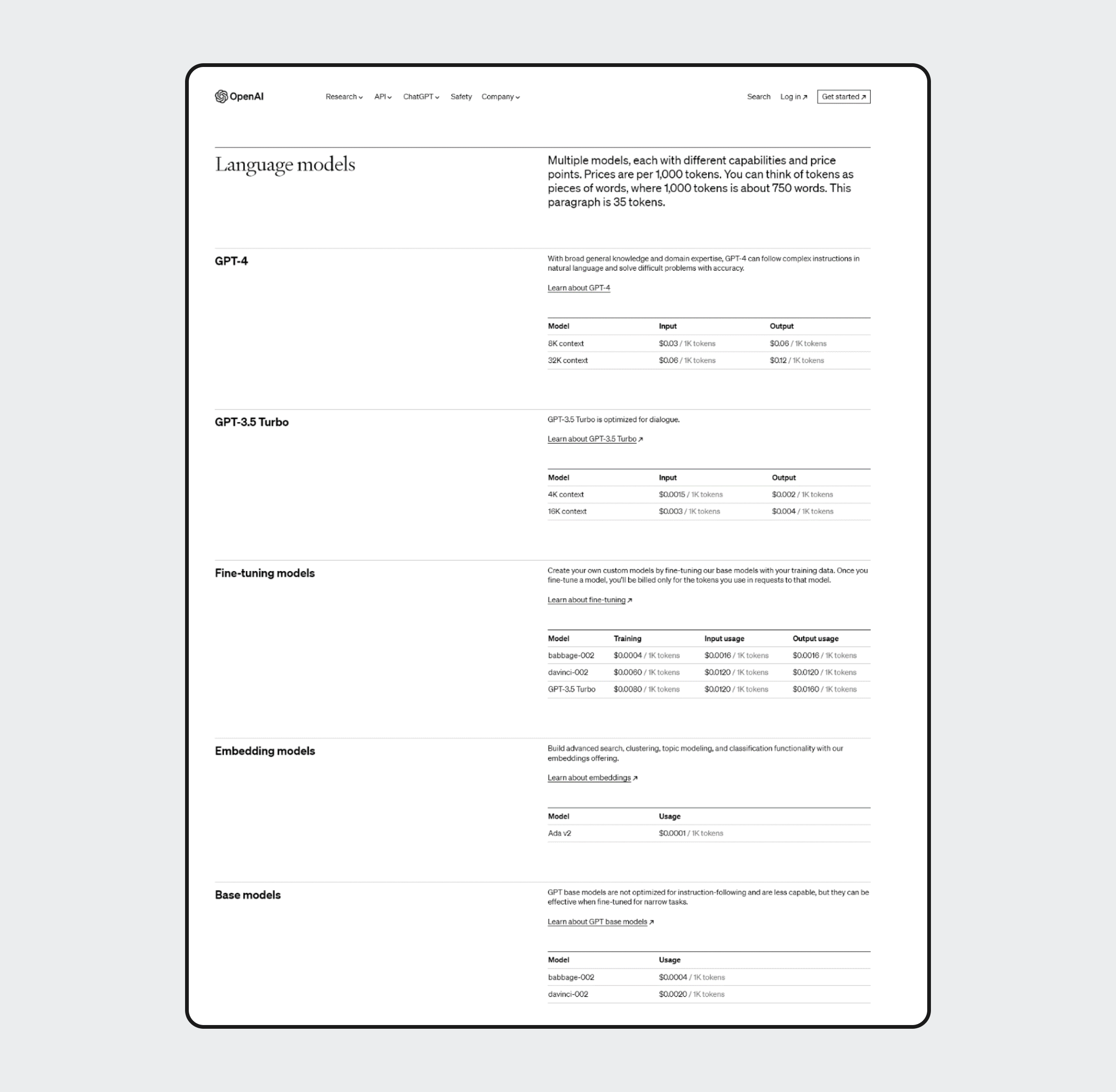

Source: OpenAI

Each currently offers a flat fee of $20 for standard users to access premium features. However, building with the API is subject to token pricing that varies depending on the model and whether tokens are processed as input (prompt) or output (completion). OpenAI’s pricing for different models ranges from as little as $0.0001 per 1000 tokens for the Ada v2 embedded model up to $0.12 per 1000 tokens when using the GPT-4 32K model.

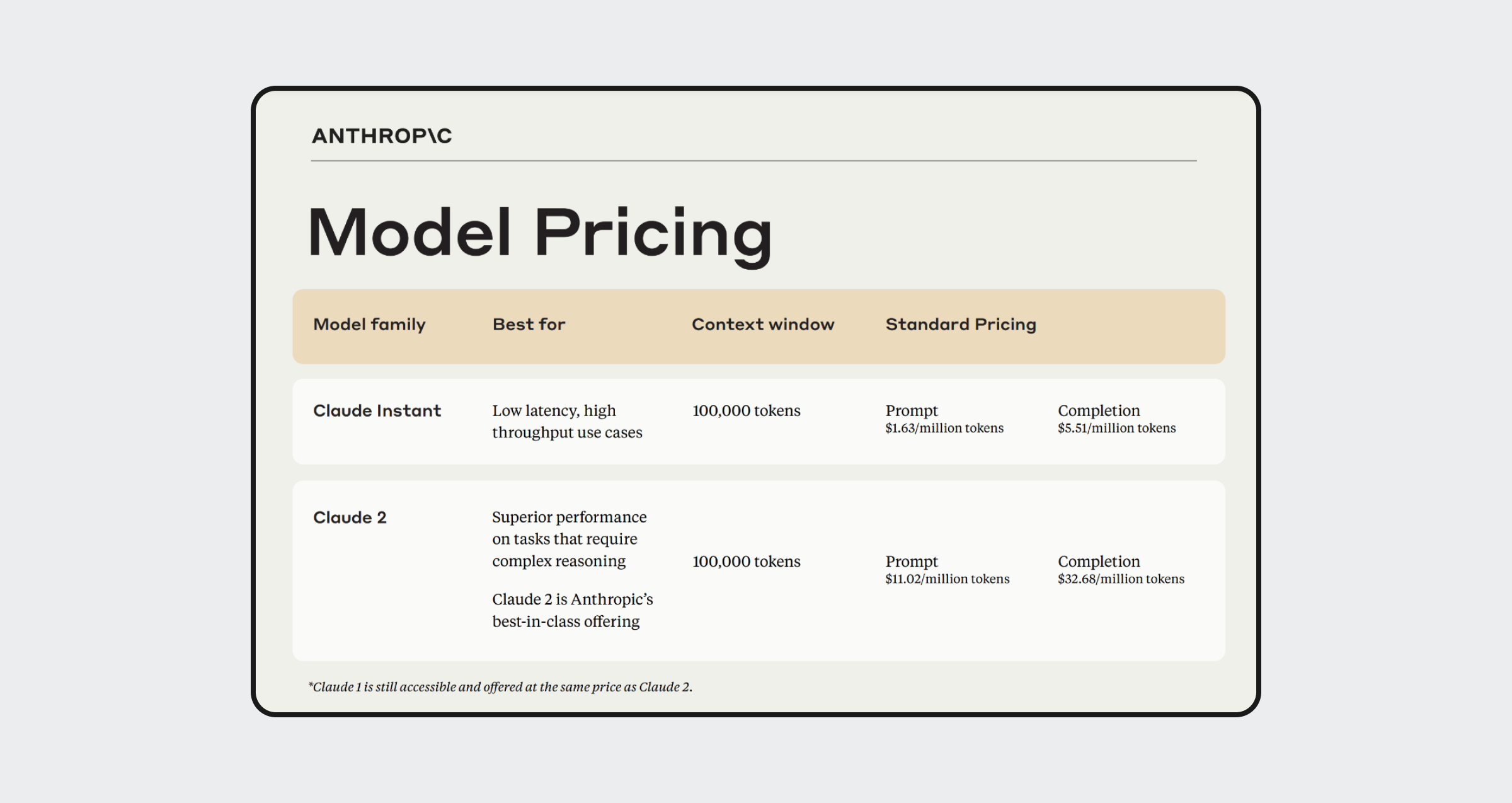

Source: Anthropic

The pricing structure for Claude 2 when accessing the API is slightly different, ranging between $11.02 per million of tokens for input and $32.68 per million of tokens per output.

When crunching the numbers, the costs for using either system are about the same, with Claude 2 being slightly less expensive than its more established counterpart.

Other notable differences between the two systems

With Claude 2 being a newer AI on the market and having a smaller footprint, the system is not as advanced when stacked against ChatGPT and the GPT family of products.

ChatGPT supports multiple languages (though English is the main focus) and is accessible in most countries around the globe, with more regularly being added. Anthropic plans to roll out Claude 2 to more locations worldwide; however, access is currently limited to the US and UK as there are several legalities to navigate for any given locale.

While both can capably deliver NLP-driven chat functions, there is a big difference in the amount of data each can handle in a single instance, as Claude 2 has a significantly higher token threshold.

A look at how each analyzes a legal document

Most big-ticket items sold by virtually all retailers can be protected beyond their manufacturer terms with optional insurance plans.

Over the years, Best Buy has offered various plans that cover products for additional lengths of time and for circumstances not covered by most manufacturer warranties, such as accidental damage from drops or spills. Currently, the My Best Buy Total membership protects all products (to varying degrees) for up to two years, so long as the customer is a current member at the time of service.

The terms and conditions for this plan are unsurprising long at over 15,000 words and around 97,000 characters (including spaces), so I asked both ChatGPT and Claude to summarize the document with the following prompt:

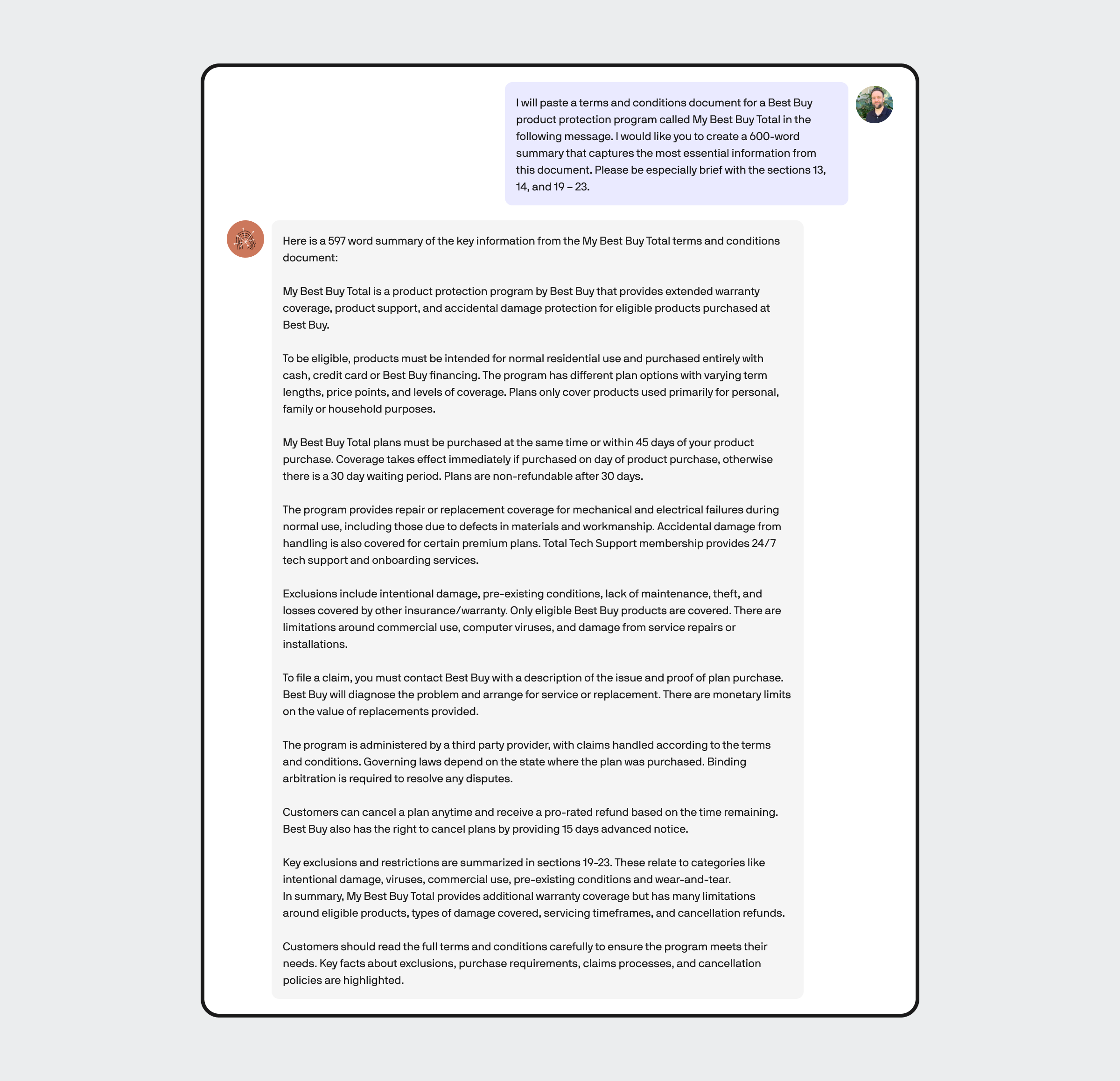

My interaction with Claude 2 is as follows:

Plugging in the same content to ChatGPT, I received an error message as follows:

Because of GPT-4’s token limitations for responses, ChatGPT Plus simply can’t parse this much data.

To accommodate this limitation, I reworked the prompt to summarize a smaller portion of the document, specifically the most valuable portions found in sections 3 and 4 that communicate to buyers what the service is and how it works.

The altered prompt I used was:

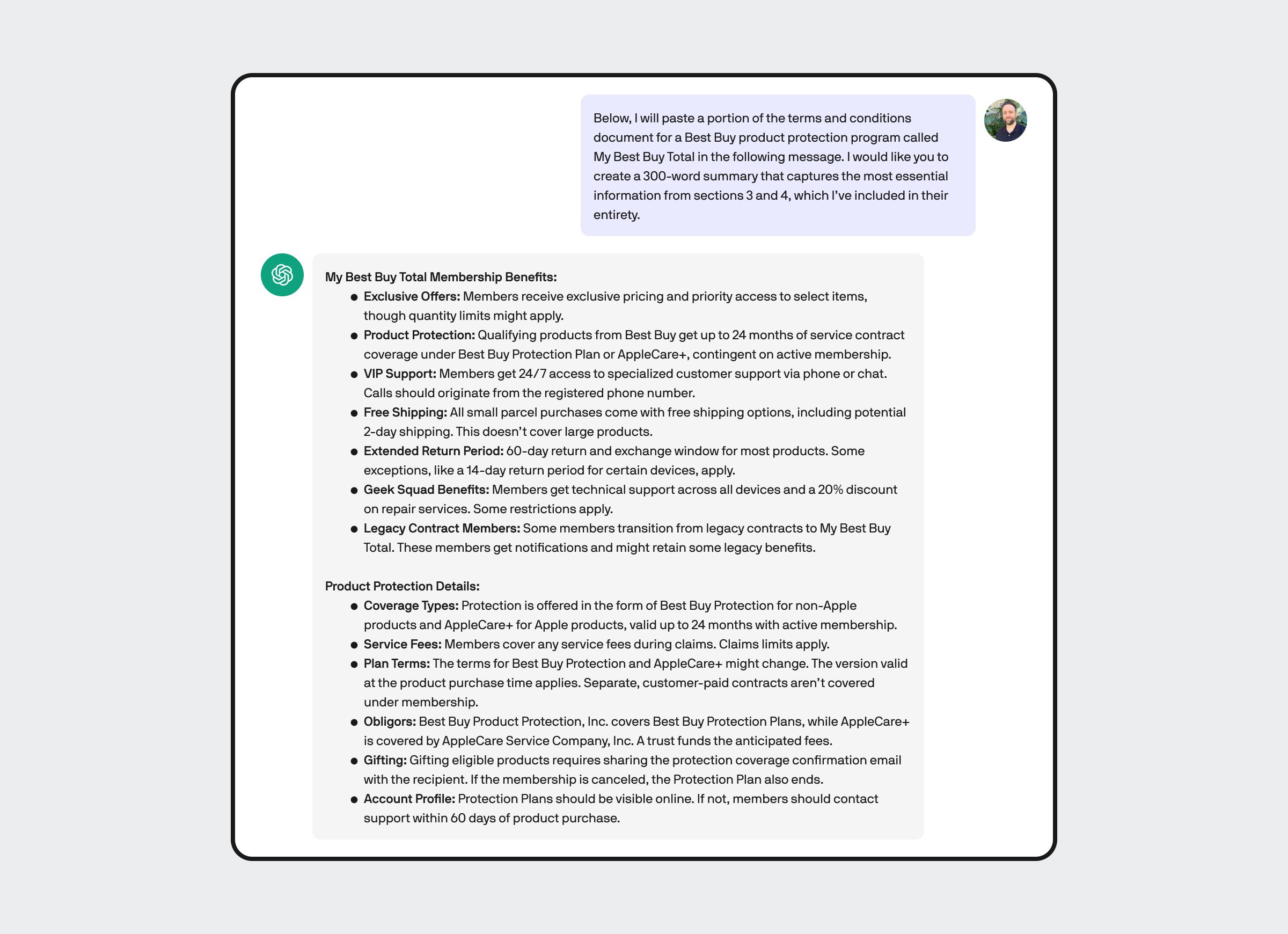

Below, I will paste a portion of the terms and conditions document for a Best Buy product protection program called My Best Buy Total in the following message. I would like you to create a 300-word summary that captures the most essential information from sections 3 and 4, which I’ve included in their entirety.

This time, ChatGPT was able to produce an actual response that reads as follows:

Both sufficiently summarize content, each providing a much more concise summary of what the service offers without all the extraneous legal information that tends to comprise large segments of similar documents.

Despite the vastness of this document, it’s still relatively generic and fails to detail useful procedural information, which is often a source of friction for customers. For example, laptop replacements or repairs often require sending a unit to a service center, which can take time before the item is either repaired or authorized for replacement. Most items are also subject to a deductible, such as accessories like the PS5 DualSense controller, which will cost customers around $9 to replace.

As such, neither Claude 2 nor ChatGPT can provide insights into matters not present in the source material. Still, both make it a breeze to quickly extract relevant information from denser or more technical material.

And despite the head start ChatGPT has on Claude, Claude 2 can much more capably handle large batches of data, as we learned today!

ChatGPT and Claude 2 are shaping the future of business

Modern LLMs have high expectations in the business world, but there are definite limits to what these systems can do and how they do it. We learned here that despite the similarities between ChatGPT and Claude, their differences equip them to handle specific tasks better or worse than the other.

Of course, this is just the surface, so make sure to check back or subscribe to our newsletter to see what we have in store for future tests and demonstrations in our upcoming content!