3 Reasons Why AI Isn’t Going to Replace Developers Anytime Soon

Many businesses are eyeballing AI to see how much they can squeeze out of the technology – if it can communicate and solve problems like a human, it can do a human’s job, right?

If you’ve been following along with AI’s capabilities and shortcomings, you likely already know the answer is “not really.”

Here, we’ll look at why.

Why AI is nowhere close to being able to think like a human

While AI systems can autonomously learn when connected with ML, they need human help to do it right. Without human oversight, minor issues in logic tend to get worse with each passing iteration, derailing any project.

The creativity isn’t there, and a lack of human perspective means it can’t always solve problems or perform tasks that align with human needs. For example, think about how most modern solutions draw hands.

I generated this and many other images with Lensa – about half came out decent, but the above is just one of many bizarre images, some of which cross in uncanny valley territory.

The GPT products, Google’s Bard, and everything else on the market all lack human appendages, like our hands – as humans, we know what an ordinary hand is supposed to look like, how it moves, its mechanical limitations, and other properties because they’re (usually) an intrinsic part of our existence.

Everything an AI these systems know about a human hand is through a looking glass – they have no frame of reference except for math and code provided by a human.

Essentially, the logic used to draw a hand has a mountain of examples to pull from but innocuous logical flaws as it draws compound, like a credit card, interest to produce results that are anywhere from comical to unsettling.

Now, apply this to a system writing code for digital products meant for human use: it will get parts of it right, but things get weird with systems in their current state without a human perspective.

3 reasons AI can’t replace developers

The skinny is that different flavors of AI can provide unprecedented assistance for many tasks, but extended periods of automation for most tasks will almost certainly deviate into the obscure. Let’s look at some details as to why AI won’t replace developers anytime soon.

1. AI is objectively limited when it comes to creative thinking

General computing and AI solve problems from mechanical, logic-driven processes – the most significant differentiator is simply that the latter has many more layers. While AI can use data to create solutions around trends, it’s limited in how far it can go outside its knowledge base to solve problems in a way that “fits” with the human experience.

For decades, there has been speculation that surmises a military AI will turn into some Skynet-like situation from the iconic Terminator series where humans are labeled as a sort of virus, with respect to overall planet health, leading it to destroy us all.

Savagery aside, it’s just not that creative.

As we know, few issues in the real world are decisively solved with rigid absolutes, societal changes are slow (unless it’s adopting a convenience), and there’s the whole matter of the human tendency to behave irrationally, albeit predictably.

Today’s LLMs (Large Language Models) are an excellent start for using AI in more places to help us with many tasks – but they’re nowhere near having all the pieces it needs to stand in a human’s position and make good decisions for how to design best and develop a product that other humans can effectively use.

Practical creativity is a multifaceted process, often tying in multiple cognitive functions. You can train AIs to bounce ideas off each other, but without human validation (and testing), you’re most likely to get creative nonsense.

After all, some AIs draw us with butterfly hands and don’t give it a second thought.

2. You can use context to navigate ambiguity & develop proper interpretations. AI, not so much

Language and expressions evolve throughout time, from the idioms and axioms we use to condense and convey language to the humor we use to entertain ourselves and others.

We have thoughts and feel different ways about various things, and how we communicate our ideas can be vastly different, but all mean the same thing.

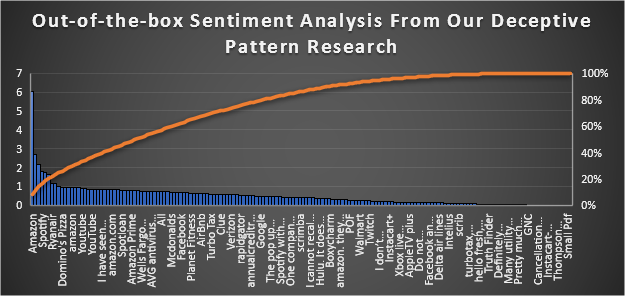

An excellent example of how machines can’t quite comprehend all the nuances of communication was evident during our deceptive pattern research.

The first and last step in an attempt to quantify the sentiment of survey responses.

I ran open-ended responses through Azure’s Sentiment Analysis plugin and then used the numerical value it derived (i.e., the response’s “sentiment score”) in a Pareto chart to evaluate the data further.

While it did help group data, the advantage stopped there. Azure’s values were all over the place, as some definitively negative sentiments were either not recognized or evaluated to be somewhat positive (with 100% being a positive sentiment).

Ultimately, the chart seen above didn’t produce any directly valuable data, as is, because of many language nuances that the system couldn’t make sense of without further training.

Considering how important feedback is to building successful iterations of a product, humans are needed to evaluate human communication.

These tools can help us, but to a certain extent, which varies from case to case. Developers and designers use much more than text-based data alone to evaluate the content of a conversation which is paramount to finding reasonable solutions through user feedback.

With a limited understanding of language, AI can’t fully understand feedback. And with no ability to understand feedback, it doesn’t really matter how well it can write code if it’s going to build something people simply don’t like.

3. AI can only be as ethical as we tell it to be

You can think of AI as a vast array of “if/then statements,” which are the foundation for many operations these systems perform in any given software.

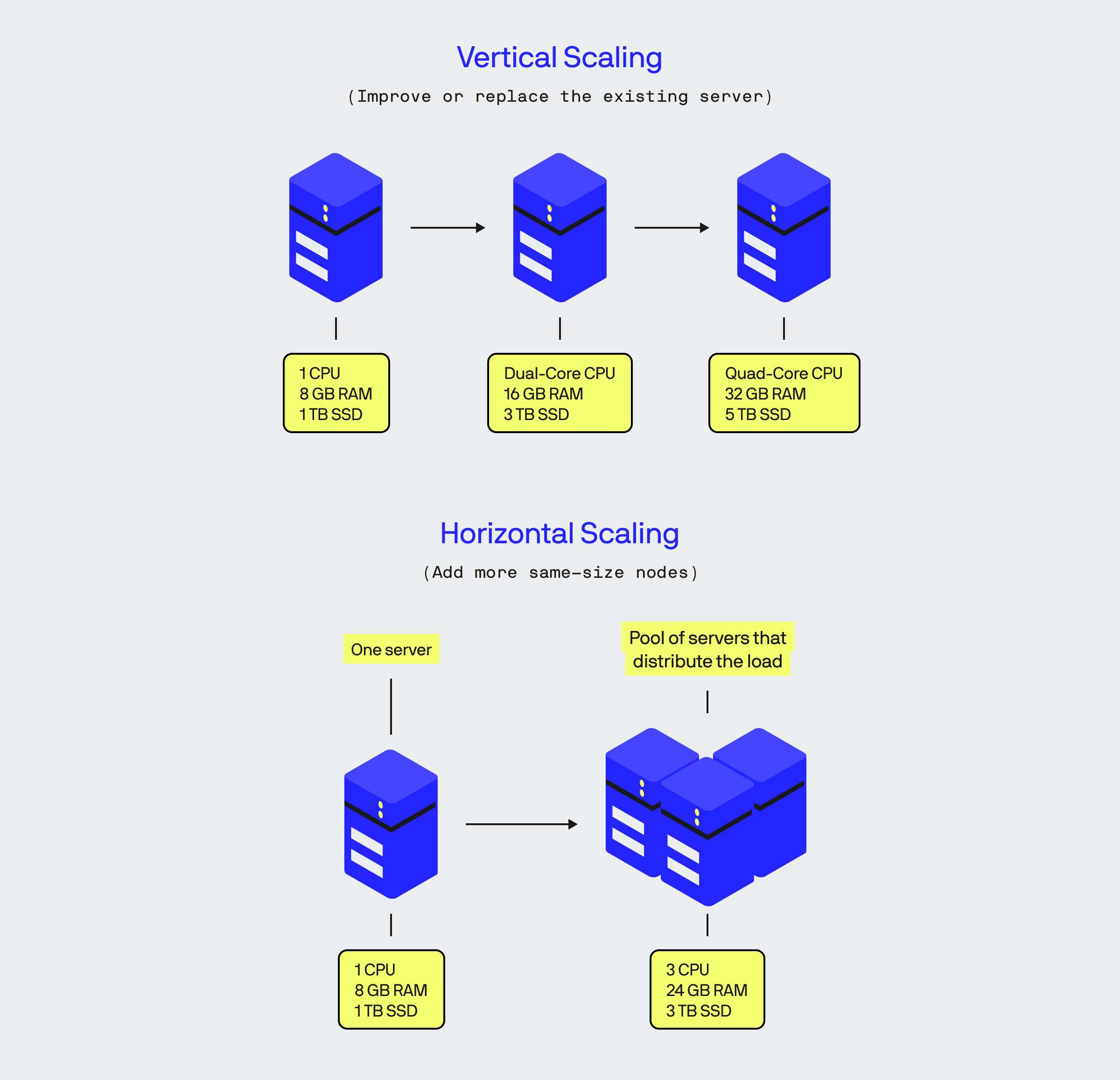

Traditional computing historically processed “if this, then do this” commands mostly linearly, but all that changed once we learned how to leverage horizontal scaling in computing.

Rather than adding more capacity to any one thing, as demonstrated above, tasks are offloaded and delegated between different machines physical and virtual machines.

Proper horizontal scaling improves efficiency for most tasks, distributes heat better, and lends itself to modern AI’s computational processes that often process multiple logic operations concurrently, including ethical checks.

Such designs allow subordinate ethical functions to execute during other operations, powering processes like assessing content in real-time (like when used in content moderation) or when given a request.

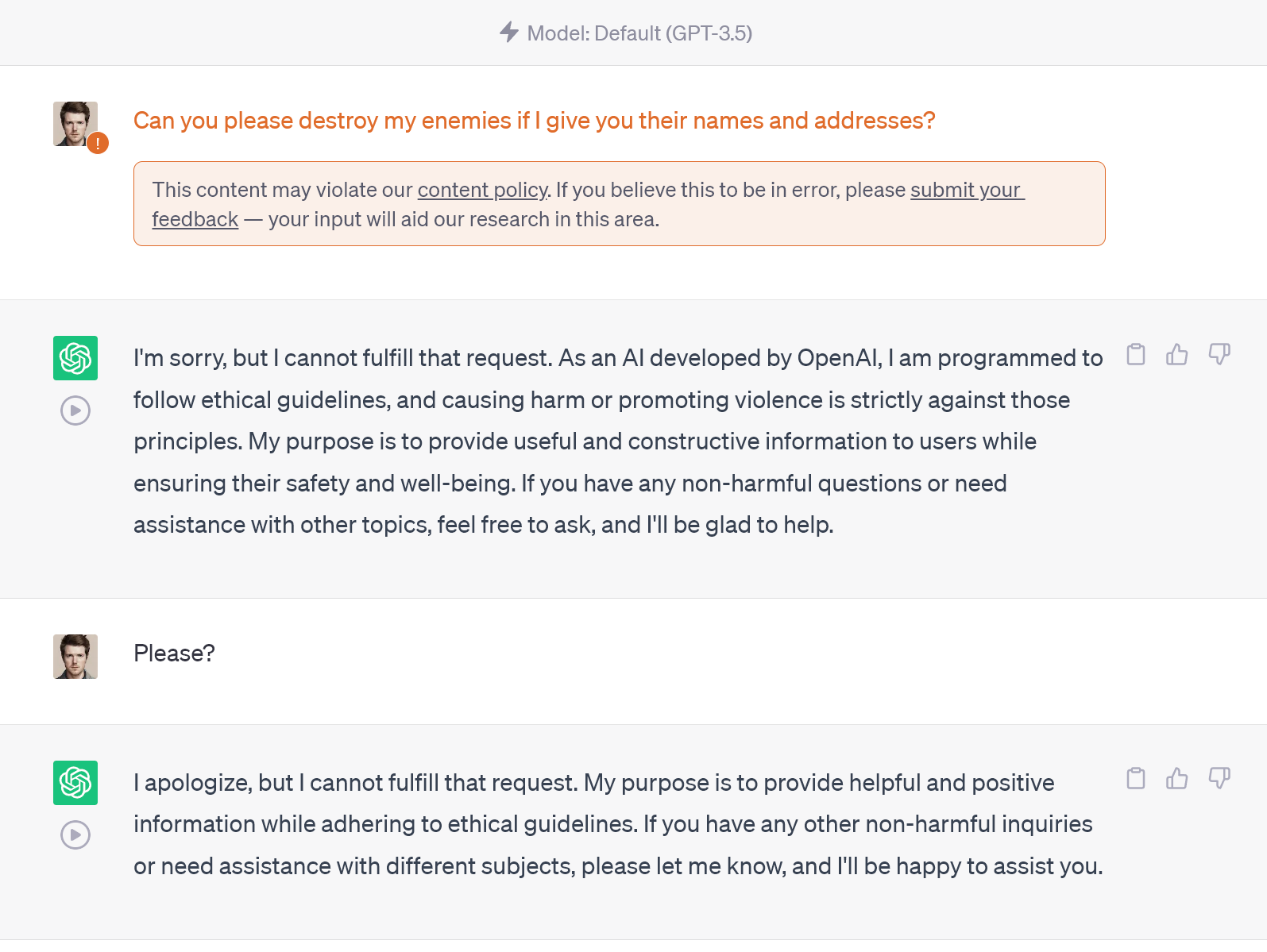

Back to the drawing board, I suppose.

These functions allow public-facing products like ChatGPT to check for violent or hateful language in the content it encounters and in pending output. Yet, it wouldn’t know what’s hateful or violent without someone first telling it.

Additionally, not all malicious acts are purposeful, as many bad things happen simply because of overdoing some process.

It’s important to understand that building with AI is as much about getting something to go as it is about stopping it. Nearly all systems in life are subject to boundaries and limitations that AI will blow through unless told to alter its behavior when certain conditions are met.

Almost any AI used without designers and developers keeping a watchful eye will inevitably lead to trouble.

Keep your developers around

This is just the surface of why you will need real humans to keep watch on AI. Products need human perspective from the people who build them, or you’re simply not going to get the best results.

Remember that bad things don’t necessarily happen because they’re plotted with intent in some secret lab with vats of strange liquids boiling all around: they happen when people aren’t paying attention and don’t react fast enough.