All About Content Moderation: The Good, Bad, And Ugly

Content moderation has been topical ever since the web began accepting user-generated content.

Still, it has been especially relevant in discussions because of its role in everything from discussing touchy subjects to simply keeping discussions on track.

Here, we’re going to dive in and discuss all things content moderation related.

What is content moderation?

The idea of content moderation is just as the name implies: it’s a process of administering discussions for some purpose.

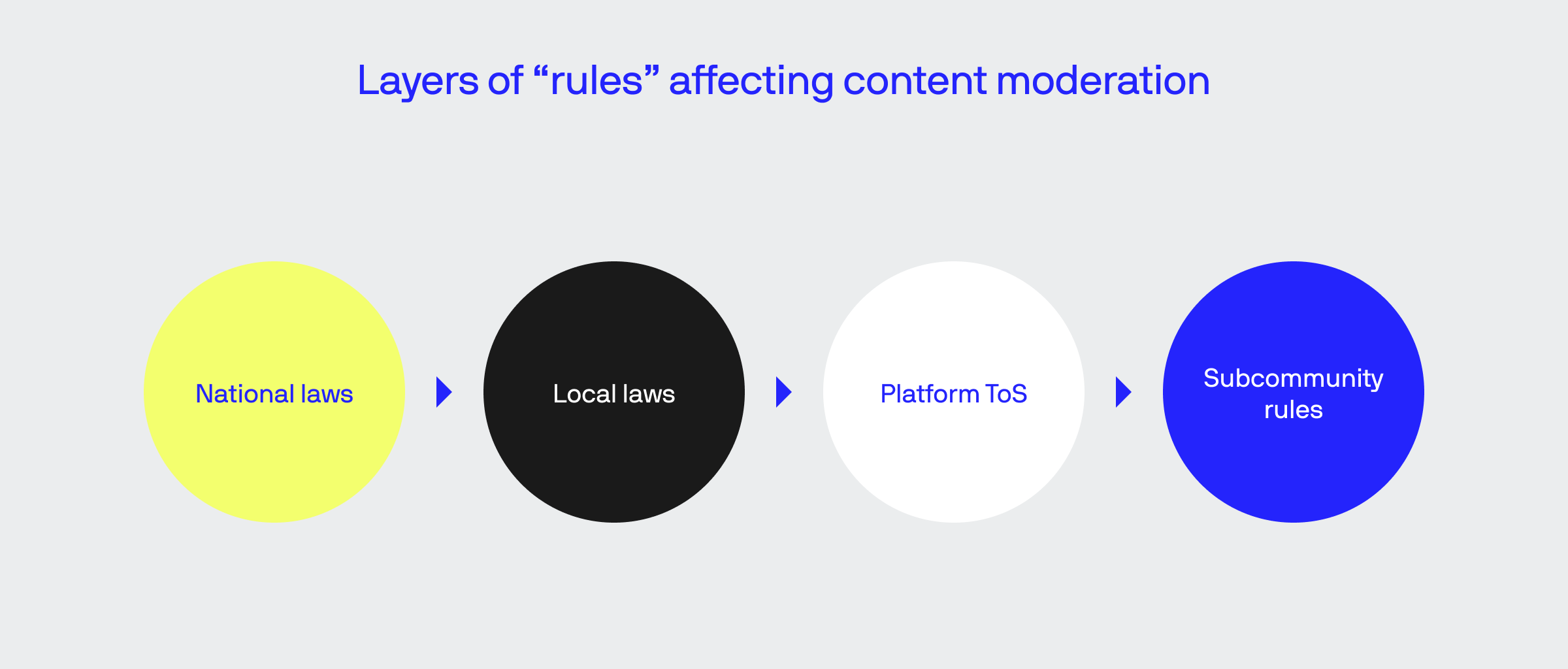

Essentially, most conversations and content published on the public web will be exposed to a layer of rules, not unlike a file or program on a modern PC or smart device where files, programs, and hardware components are subjected to a layer of permissions and policies.

The laws in any given region should provide a baseline for what people are allowed to openly say or share, though these aren’t always defined in a platform’s terms of service (ToS), specific expectations are typically embedded in a platform’s ToS and further, within subcommunities (where applicable).

Of course, the execution depends on a few factors, from tools and resources available to time and costs when there’s human involvement.

Why is content moderation needed?

Content moderation exists for a few different reasons.

All public-facing on the web is essentially subjected to conversational norms as if they were happening in the real world. Unlike real-world conversations, those on the web are much more aggressive because of the reality of the human condition and circumstances that come together in the worst possible way.

The enforcement of laws regarding speech, both national and local, is different around the world. In some cases, platforms require heavy modifications for use in some countries, if they’re allowed at all.

Here, clashes arise in the US and many other nations for a few different, intersecting reasons. | Source: Mathias Reding on Pexels

We know that in small groups (less than Dunbar’s number, or about 150), people can usually manage themselves with minimal, formal governance. Eventually, when a group becomes large enough, the complexity and sheer size will almost always subject the group to undesirable behavior.

Attempting to maintain a group’s integrity requires rules; when it’s users of a digital platform, there will always be some people who seek to disrupt or gain attention when there are rewards of the tribe to be had, as with social platforms.

Content moderation aims to maintain group harmony by deflecting ideas or content that doesn’t align with the group’s values, that’s intentionally attention-seeking or disruptive, as well as anything off-topic.

Real-world examples of content moderation

You likely have an app or browser tab open at this very moment where content is in effect. And if it’s working correctly, you should see minimal objectional material.

Each platform above has certain ways of dealing with content moderation.

Facebook has group-specific rules

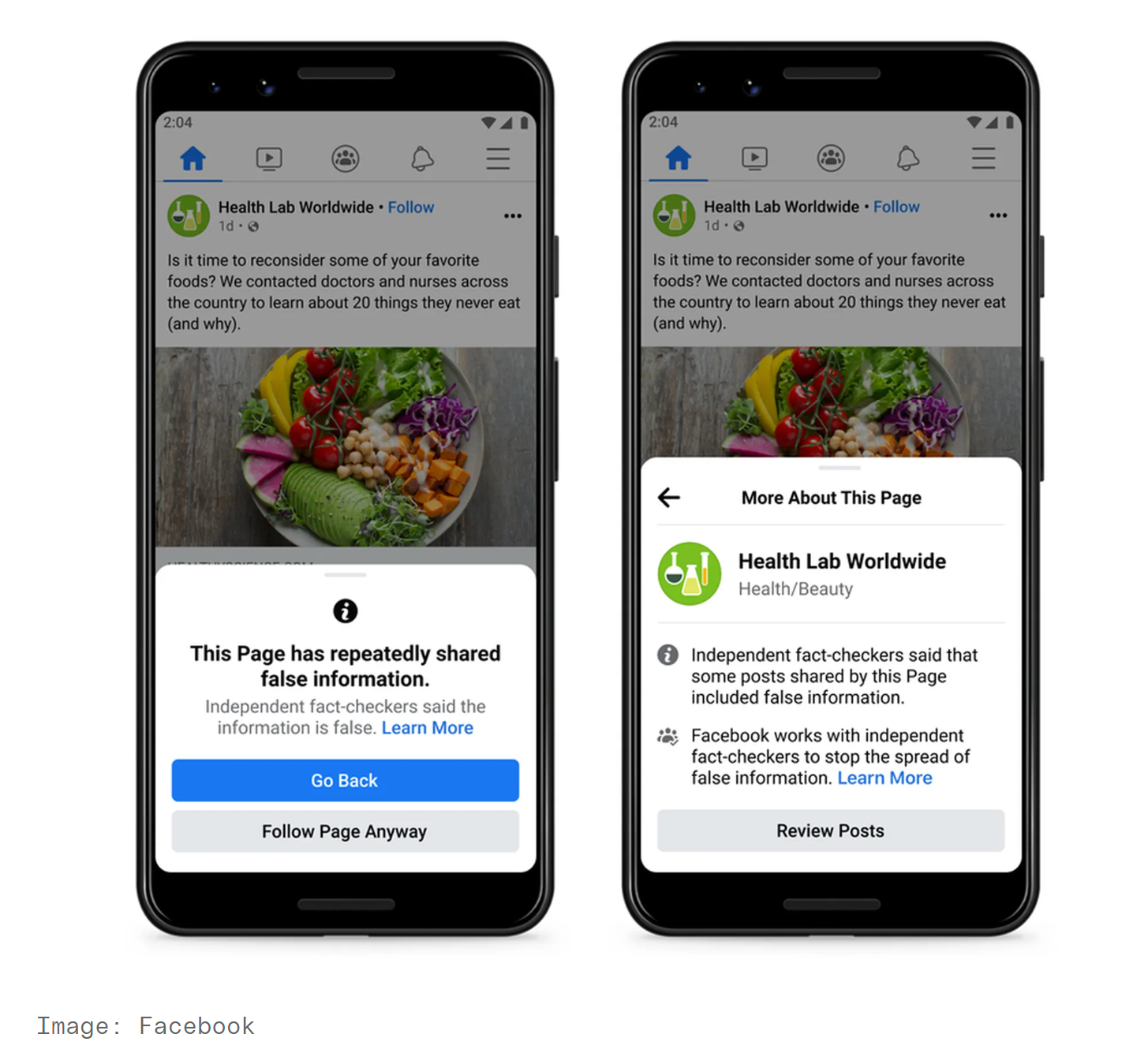

Users of Facebook are likely familiar with the following UI elements that tend to pop up from unreliable sources and people.

On Facebook, you can be anything you want, including wrong – some stuff gets a pass, but often gets tagged to indicate a source is sketchy. | Source: The Verge

Facebook is now well-known for its policing of sharing specific ideas. In an attempt to appease everything, they allow some groups and accounts to share information that is (usually) verifiably wrong or misleading with warnings.

Facebook has its own community standards in place for the entirety of the site, but every group will have its own set of rules. In most cases, users have complete control of the content they create, from customizable privacy settings to the ability to delete other user comments on posts generated by the user.

Why people tend to behave well on Reddit

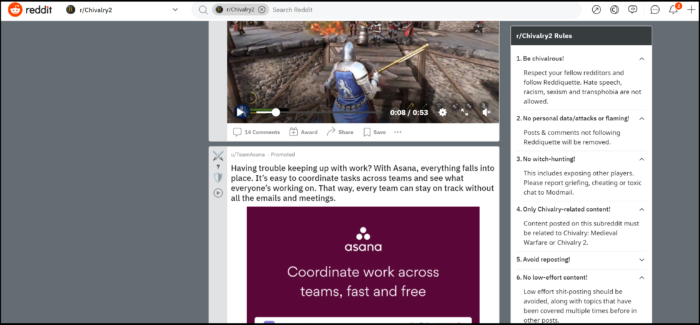

There are a few reasons that Reddit offers a less hostile experience for its users but we’re going to quickly look at one of the key reasons today.

People are better behaved on Reddit because of its structure, though we can’t dive all the way into why that is here.

Reddit is natively divided into small groups called subreddits, each of which has its own rules defined by the creator. There are usually multiple subreddits for almost every topic you can think of (and many more you had no idea existed) where people tend to have discussions with purpose.

Like anywhere else, Reddit still hosts many trolls, but because of similar mechanics to YouTube’s “like or dislike” feature, undesirable content can be “downvoted,” which will cause content to go out of sight for most user filter settings.

Minus ads run by the platform, Reddit tends to be a place where people have real conversations so solicitation, where it’s not permitted, tends to be dealt with aggressively by using tactics like shadowbanning.

LinkedIn works because it has minimal disruptive users

Fortunately, most users here got the memo that this platform is for professional communications.

The best part about LinkedIn is that disruptive users are few and far between. You can see what we are up to on Linkedin here.

LinkedIn has policies governing its site and subgroups, just like the platforms mentioned above.

The platform has minimal disruptive users, but it is thick with people all trying to be heard among the others shouting into a mostly deaf digital void. Though other platforms can allow admins to review posts before they go live in their group, this feature is frequently used on LinkedIn.

Since the platform does cater to building B2B connections as well as connecting talent to businesses, most places are accepting of marketing efforts unless stated otherwise. Like Facebook or Twitter, people are mostly free to post safe-for-work material to their own timelines as they see fit.

How content moderation works

Several methods are available to moderate content, meaning it’s important for digital product owners to understand the capabilities and limitations of different techniques.

Hands-on moderation

Though there are many excellent software-driven solutions on the web, human administrators are usually still needed by some systems.

Perhaps you’ve been improperly flagged when trying to post content to social media, even though it doesn’t violate terms on any level?

Things can go wrong, even with the best systems, plus many systems need manual intervention to “learn” what to look for – the point is, every system will require humans on some level to oversee some functions of moderating content, depending on a product’s design and goals.

If “this phrase” is found, then delete

Many platforms are triggered when specific phrases appear in the content text.

Luxury accommodation at the first and last annual Fyre Festival. | Source: Daily Beast

This technique was famously abused in recent times by a team helping manage the official Instagram account during the Fyre Festival debacle. If you watch the documentary on Hulu from the link, you can learn all about the scandalous behavior that defined this whole affair.

To keep matters under wrap, the promoters programmed Instagram to automatically prevent comments of all kinds from making it onto any of their threads, even going so far as to block the word “festival” and several related keyphrases.

AI & ML are leading the way for digital solutions to moderate content

As with many other areas where AI and ML are helping processes move faster and more efficiently, most leading platforms are using sophisticated systems to help monitor and manage user-generated content.

Here, we have a couple of different kinds of technologies to analyze user-generated content.

Natural Language Processing (NLP).

While these tools are often credited with use in speech-to-text (STT) and text-to-speech (TTS) systems, they also help recognize and learn new language patterns. As language evolves, between emerging slang and other notable changes that occur with time, these systems can learn patterns to both replicate speech and, in some cases, recognize abusive patterns. For example, some nefarious groups use codes to identify themselves, which these systems in place on most platforms often learn and likely log.

Computer vision.

These systems use layers of logic to recognize imagery, often in real-time, to moderate content. These systems learn to recognize objects in imagery, like as adult or violent material, to prevent it from making it to a public platform. Computer vision can also be trained to recognize symbols, thus building on the capabilities of NLP described above to help observe everything from social patterns to identifying hate groups.

These systems work together to bring functionality to tools like those that look or listen for copyrighted material in user-generated content. In time, these systems will also learn to look for other forms of copyright violation beyond infringements of major motion pictures and music.

As you’re undoubtedly well aware, censorship often intersects with content moderation. |Gianfranco Marotta on Pexels

There’s no denying that censorship becomes topical in many content moderation scenarios.

You may have read the section about the Fyre Festival and said, “That was censorship,” because, well, it was.

In other cases, censorship can be required by law in some parts of the world, or it can be instituted when it’s decided that keeping certain information from the public eye is in everyone’s best interest. Other times, users can loudly claim they’re being censored, even though they’re simply being subjected to standard content moderation rules.

And of course, we see some situations like recent happenings with users being upset after they shared “anti-vax” material to a platform and were subsequently censored by a content moderation system for being objectively incorrect. Here, we saw a lot of accusations of censorship when it was a poorly executed attempt at thwarting a common psycho-social reaction to a new rule, i.e., automatic opposition because of the perceived threat to one’s agency.

For businesses, there are all kinds of tools on the market to build a custom content moderation system – with that said, it’s a topic that often becomes entangled with controversy. As such, it’s unreasonable to expect that you’re going to make everyone happy, all the time.

Content moderation is a tricky but necessary job

Never assume that just because “people shouldn’t” that “they won’t.” It is human nature to disrupt, seek attention, and “make the thing do something it’s not supposed to.” As such, businesses need to prepare for all possible scenarios.