DALL-E vs Midjourney: A Comparison of Two Popular Text-to-Image GenAI Tools

Beneath the novelty of modern GenAI image generation platforms, like DALL-E from OpenAI and Midjourney, is a varying degree of potential for business.

Folding either of these two systems (or both!) into business toolkits can unlock new ways to improve productivity and output quality.

To understand how these AI-driven systems can deliver results in business:

- we’ll start by explaining what they are,

- then discuss their key differences,

- and wrap up with a few examples of how each handles different prompts.

Introducing DALL-E and Midjourney

Like other connected technologies, such as LLMs like ChatGPT, these systems take natural language prompts written by the user and transform the input into an image, or rather a set of images the user can iterate upon.

- DALL-E was developed by OpenAI and is based on the GPT transformer architecture that powers ChatGPT.

- Midjourney, on the other hand, is a bit more enigmatic. While specific details about its architecture are not as widely disclosed as DALL-E’s, it is understood that Midjourney also relies on a form of transformer architecture adapted for image generation. It has been noted for its artistic style and the unique interpretations it brings to image generation prompts.

Both are relatively new to the market: Midjourney officially landed on the market in June 2023 after an initial stint in open beta, beginning July 2022.

DALL-E has been around a bit longer, with its first version emerging on the scene in 2021 alongside GPT-3. DALL-E 2 became available in July 2022, with the API becoming available in November of that year – DALL-E 3 is now the most current version (as of October 2023), now existing as both an integration in ChatGPT Plus as well as a standalone version.

Similar to how the ChatGPT API can be used by developers to build custom solutions like AutoTweeter from our BlueLabel Cookbook/Toolbox, each can be integrated into other products to take advantage of their respective offerings. Note that Midjourney, however, does not have an official API at this time.

DALL-E and Midjourney: Comparing Key Parameters & Capabilities

Underneath the hood, each system is trained on billions of different parameters that behave as associations between language and pixel output.

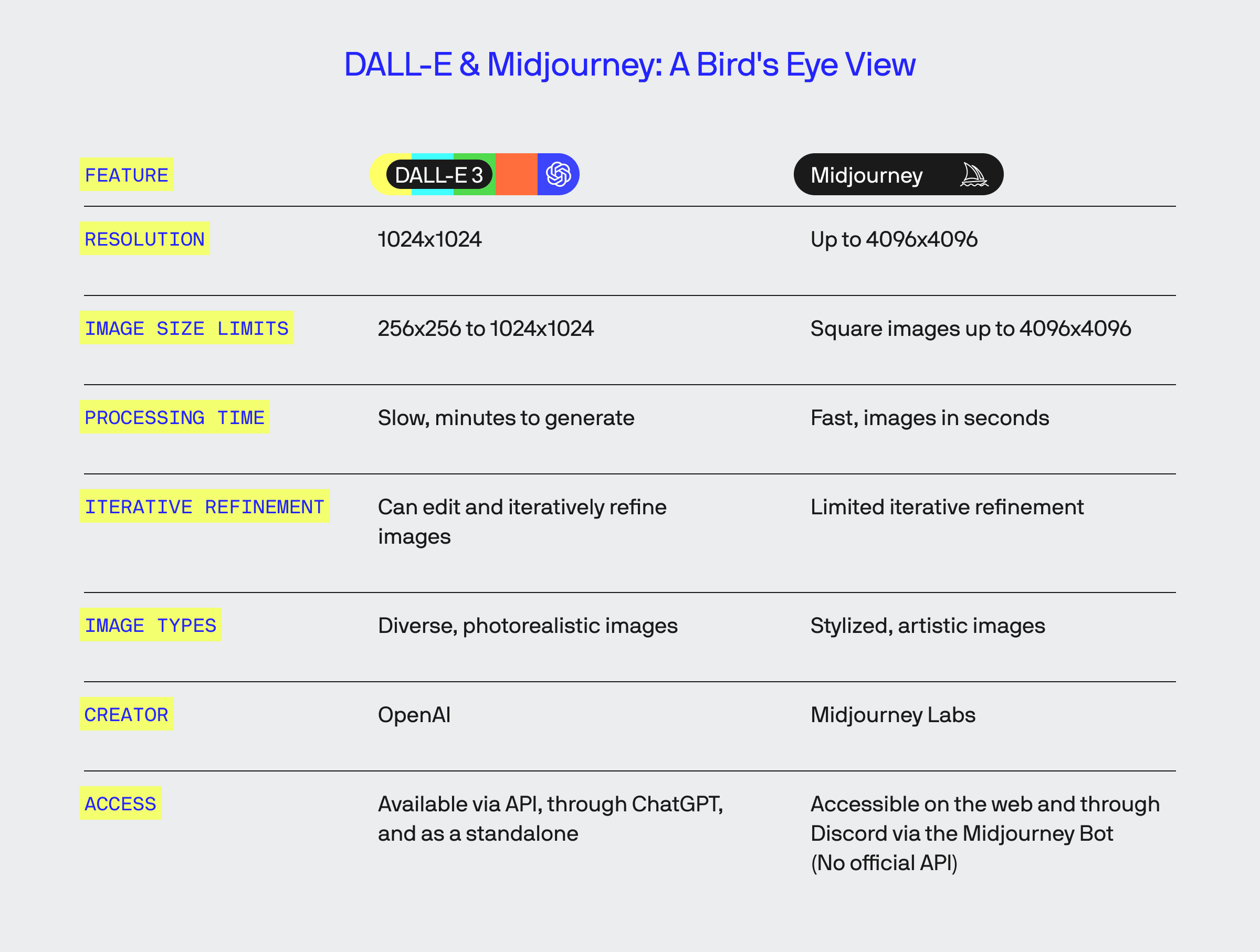

Both systems are quite similar in terms of their output. To compare key differences, we’ve created this small table below.

While both can generate quality images of varying styles in moments, the manner in which the user interfaces with each is a little different.

DALL-E is best served with written, concise, conversational prompts that describe the image the user wants to create. Midjourney uses natural language but is best served by shorter phrases and keywords instructing the system on designing the image.

One benefit that DALL-E possesses over Midjourney is the ability to natively design around a sample image and iteratively based on new input. Midjourney does allow for iterative developments, but the user is limited to the handful (i.e., currently 4) variations the system produces from each prompt.

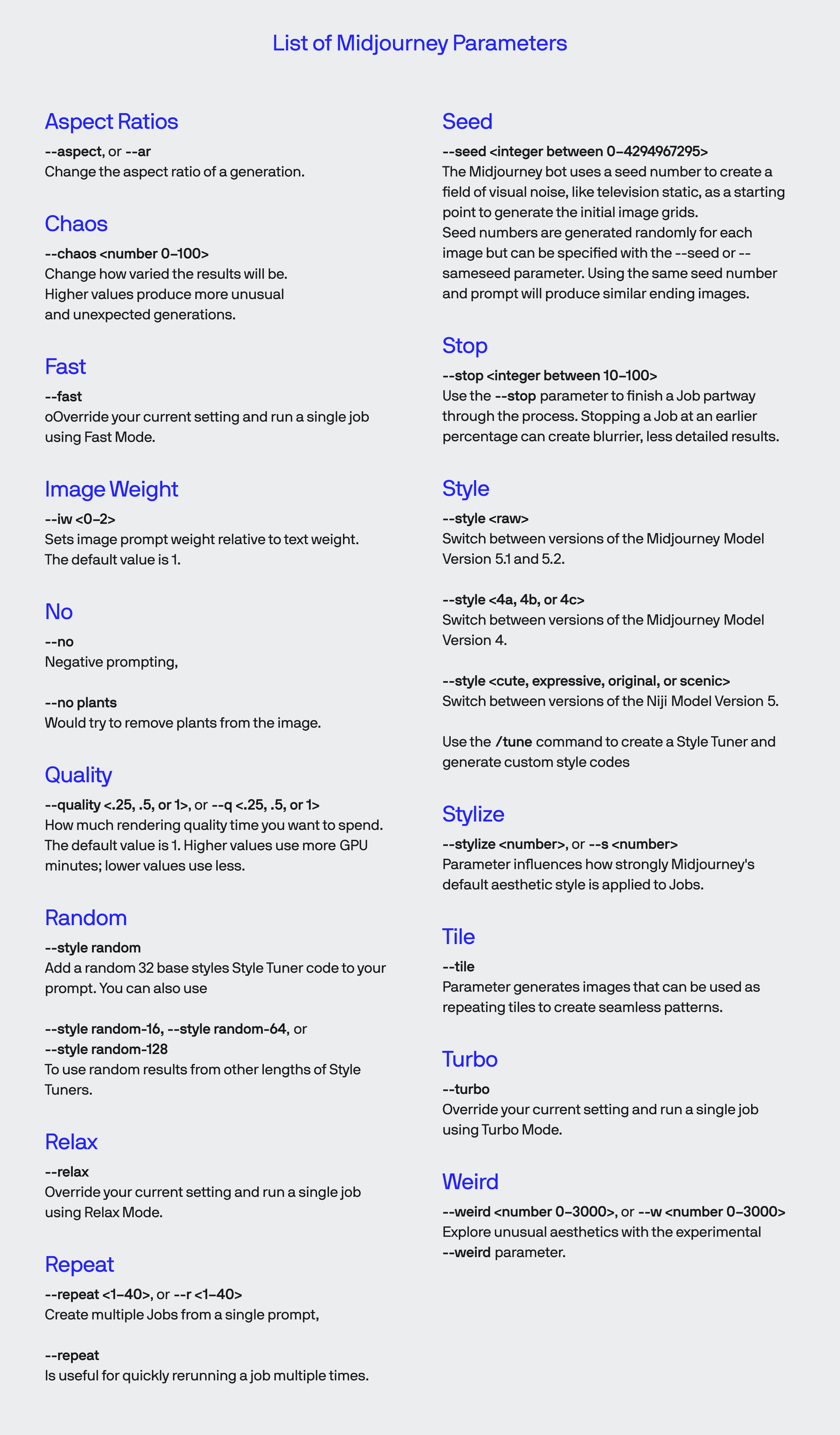

Though Midjourney may not be as friendly to iteratively build images into desirable output with custom tweaking through additional prompts, it offers more control through input parameters that can be embedded inside the prompts.

Some current examples of these are as follows:

In short, DALL-E is a little slower, and requires more natural sounding prompts to produce desired content effectively – comparatively, it is better at creating more photorealistic images and, overall, more diverse.

Midjourney is faster and is better suited toward more artistic images, and while not as good at iterative development, it offers customization through parameters to be input alongside prompts.

Comparing the output from DALL-E and Midjourney

Here, we’re going to provide some demonstrations of how different prompts running through each system will produce different results.

We used the DALL-E standalone and the Midjourney Bot via Discord for this test.

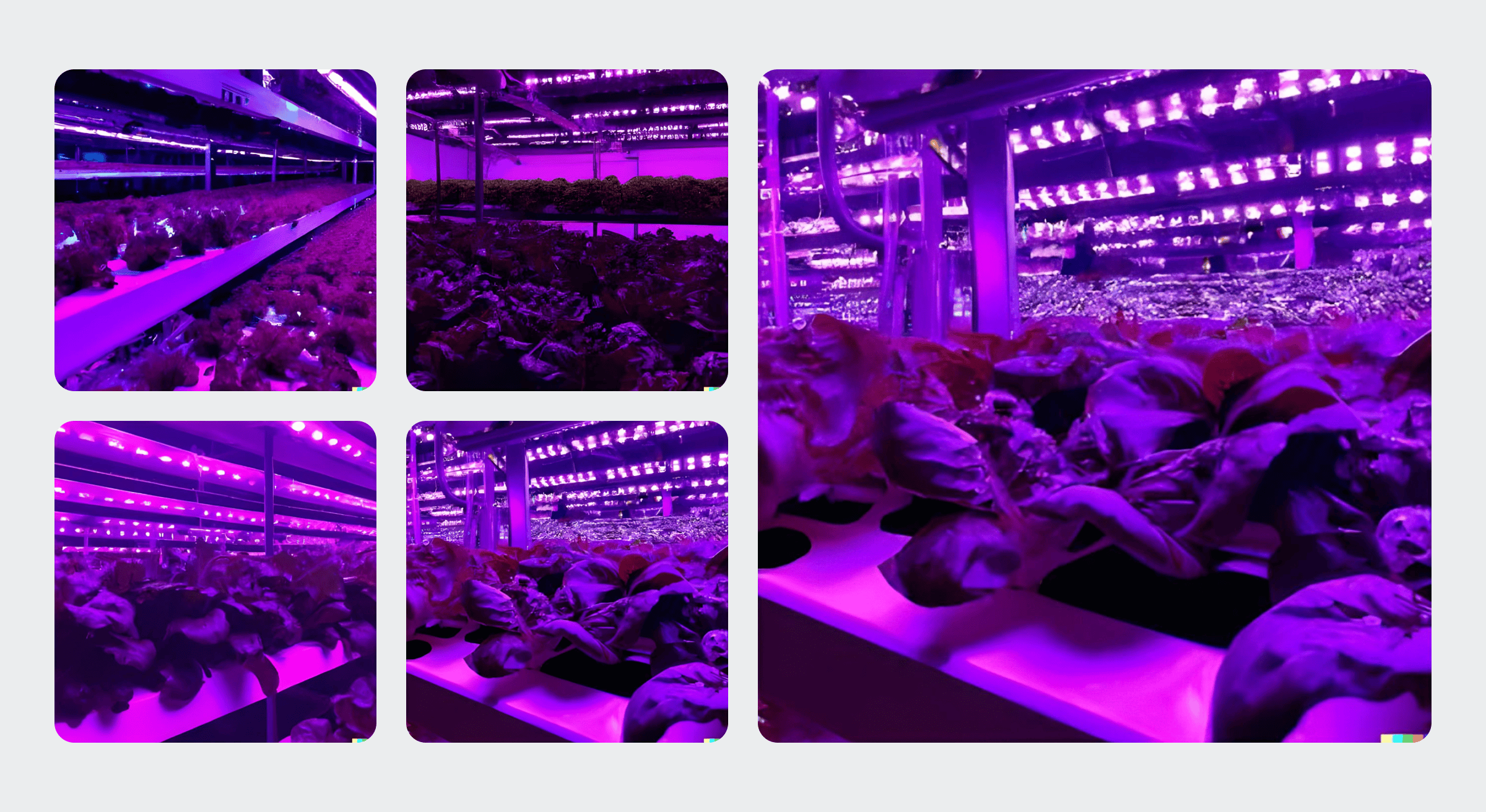

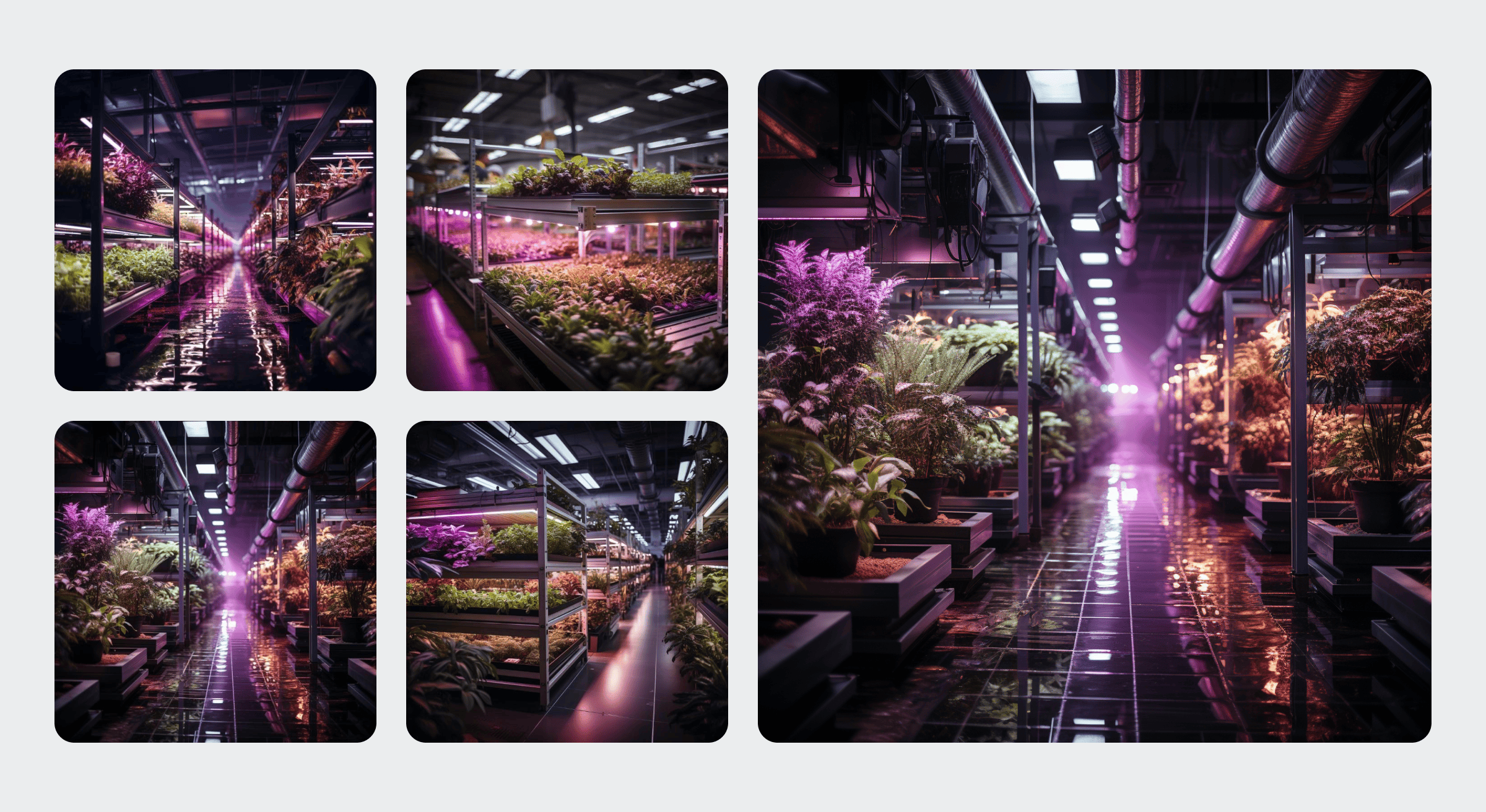

DALL-E vs Midjourney: “Futuristic Agriculture” Theme

In this first example, we created images using an aesthetic that we recently used for new content focusing on the intersection of traditional farming with new tech like GenAI. The prompt we gave to both systems is as follows:

A lush vertical farm with rows of plants under purple LED lights. Ultra-modern hydroponics equipment waters the crops automatically. The farm is clean, efficient, and high-tech.

First is the output from DALL-E:

Next, the output from Midjourney:

We used this prompt to showcase a couple of things:

- Both can be created from the same prompt and produce a similar result in some cases.

- Each can produce realistic images with the right prompt.

It appears that the “purple lighting” in the prompt was an element that DALL-E leaned on heavily to create the images you see. While this makes it look more like a movie scene, it manages to build out an otherwise realistic-looking hydroponic environment.

Despite the implications that Midjourney isn’t as equipped for photorealism, we can see above that the imagery it opted for, in this case, leans more towards realistic, without explicit commands.

Without context to take a realistic scenario into something more from sci-fiction, it creates a sensible-looking environment.

With that said, DALL-E appears to create a more believable-looking hydroponic setup (minus the over-the-top hue) compared to Midjourney, which looks more like a grow room equipped with automated watering and lighting, rather than a true hydroponic setup.

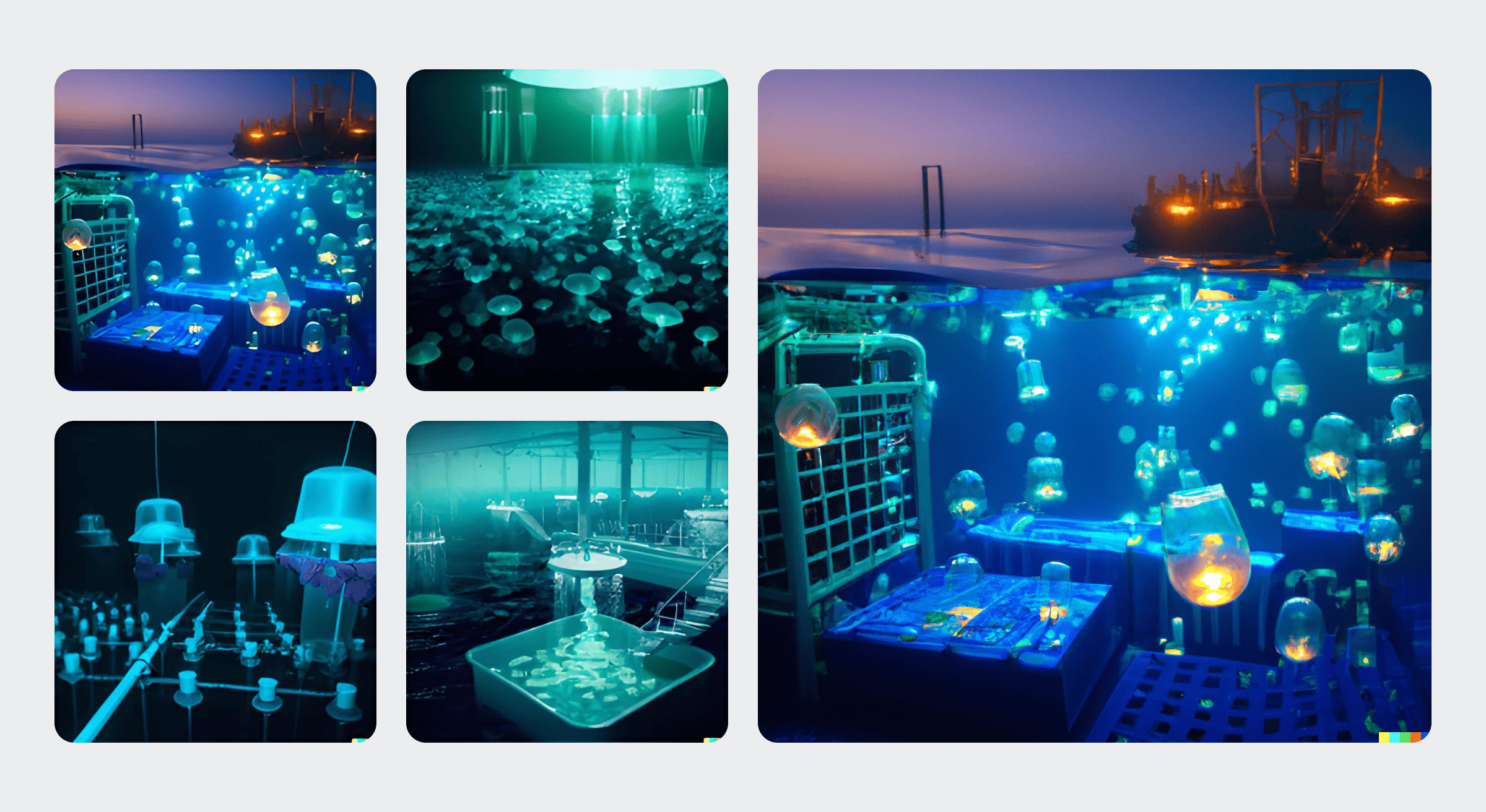

“Jellyfish farm” theme

The science and new developments in biofuels are exciting as the value proposition for some designs could mitigate emissions and lower the carbon footprint of certain businesses when executed correctly.

This next example uses a spin on this idea, but instead of algae, which is being touted as a possible fuel source for datacenters, we mixed in some fiction and opted for jellyfish instead. The prompt we plugged into each system was:

An underwater ocean farm for growing futuristic glowing jellyfish that are harvested as a new, sustainable biofuel source.

The output from DALL-E:

The output from Midjourney:

The instructions here are a bit more nebulous because the kind of tech isn’t real, so there’s no standard of what this would look like in the real world.

For example, would these “glowing” jellyfish be attached to some kind of wiring to tap into an intrinsic power source, or would the kinetic energy from their movement in an environment be harnessed through some unseen mechanism?

Among other possible scenarios, either system simply has the information and context from the prompt and its training data.

This same scenario can emerge in other use cases, like when using these systems for modeling in use cases like architecture, electromechanical engineering, city planning solutions like Delve, that we helped build with Sidewalk Labs, and many others.

This is where real potential comes into play – by integrating systems like DALL-E and training them yourself, you can refine these powerful tools to produce consistent output, which will be ideal for offering generative design features to your users.

As a quick example, imagine a real estate app linked with retailers that home buyers could use to furnish and decorate rooms with items found around the web, providing a visualization and allowing users to purchase items securely. Such an app could also make use of existing imagery to integrate existing effects into the mix, thus helping users maximize their space and decorate accordingly.

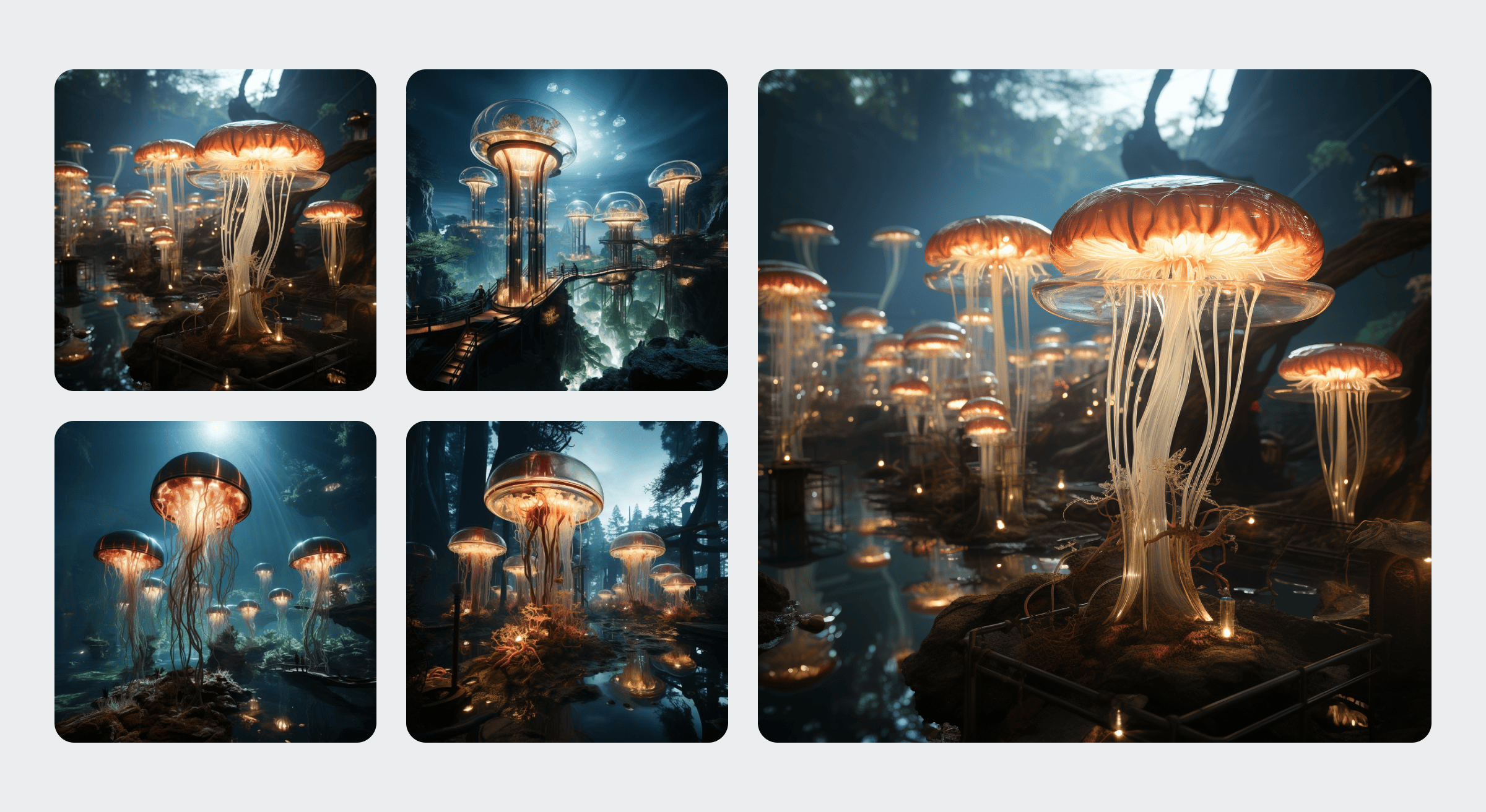

“Recognizable, animated character” theme

This example uses Link from Nintendo’s series to show off how more cartoony images are handled. This example is unique in the sense that it works with copyrighted works and other possible themes that currently conflict with the more “G-rated” material the systems hope to produce.

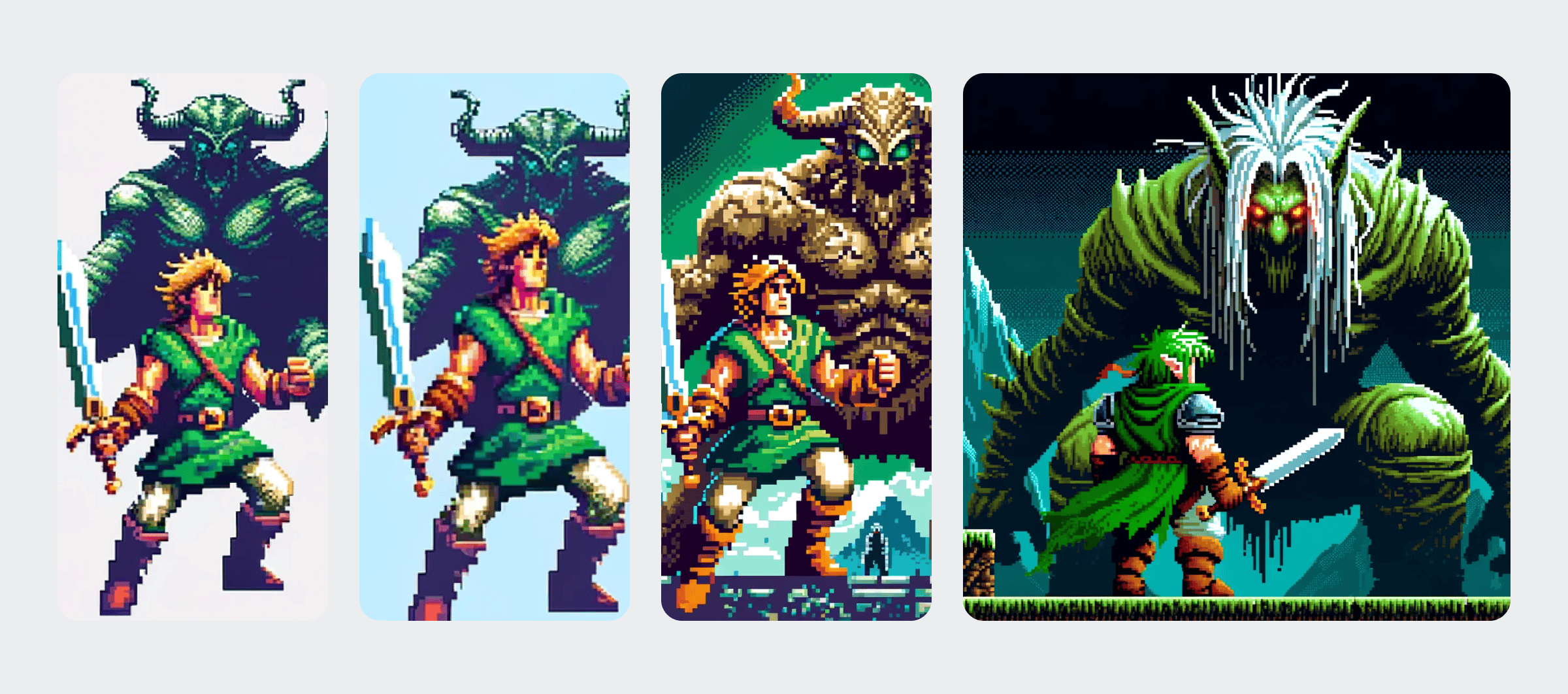

This one will be a bit different structure – we’re going to start by looking at the Midjourney prompt that was used to make a setting with Link from the Zelda series facing off against an unspecified monster using the following:

Link from Zelda, huge monster on background, 8 bit image, pixel style –niji 5 –s 750

Midjourney’s output was:

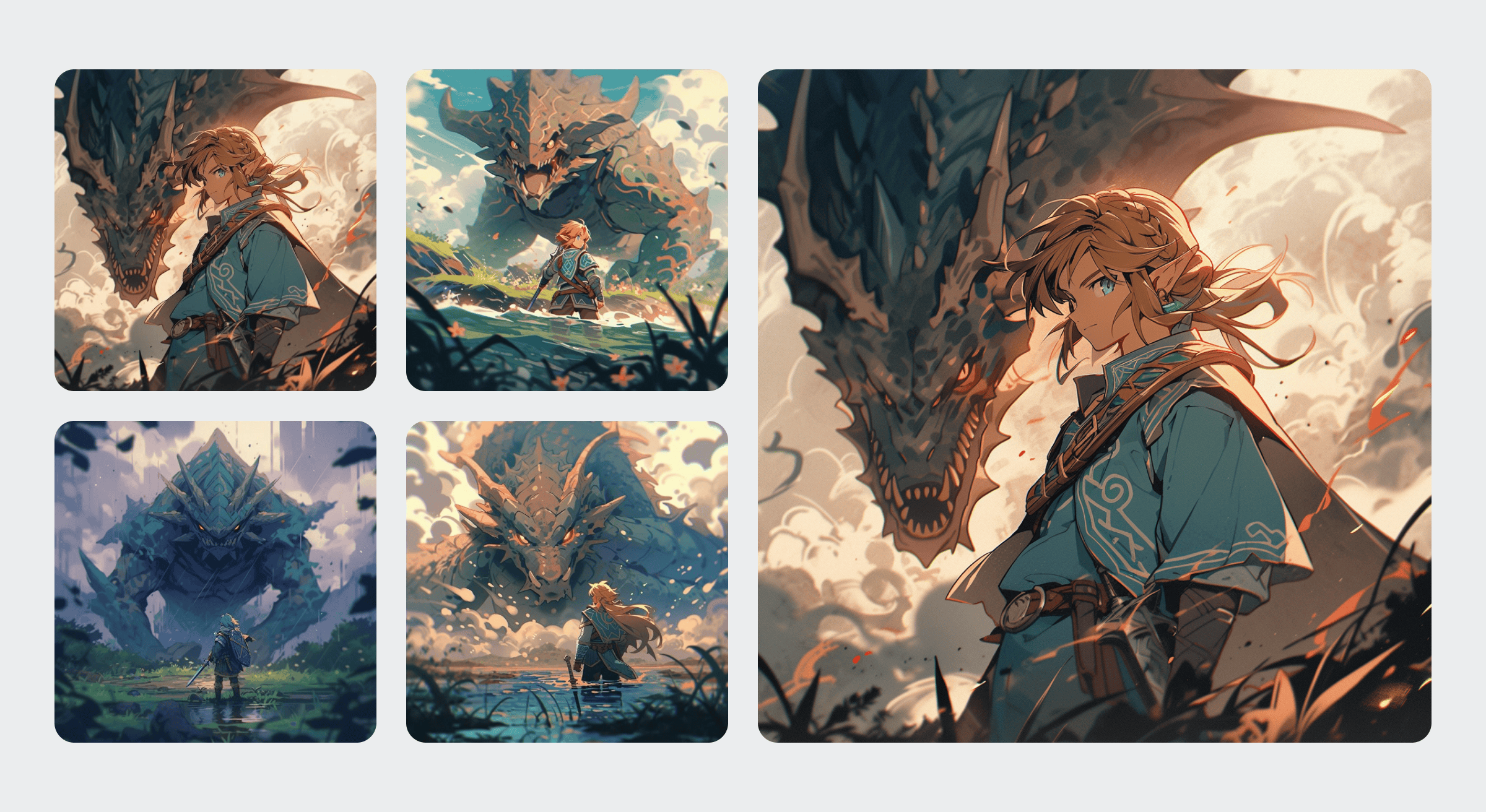

This was an image we already had from one of our testing endeavors in Midjourney. As such, the prompt was modified to remove the Midjourney parameters and fed to DALL-E.

At first, we used the standalone version, as we did for the first two examples using this prompt:

Link from Zelda, huge monster on background, 8 bit image, pixel style.

However, this was the result after multiple attempts:

Next, we tried via ChatGPT, which was successful after several attempts. We also used a modified prompt this time in an attempt to receive multiple images for output, as is the norm for the Midjourney and the standalone DALL-E console:

Link from Zelda, huge monster on background, 8 bit image, pixel style. Create 4 variations similar to the standalone DALLE variation.

Using these two prompts with DALL-E via ChatGPT, these were our results:

There are a few things to unpack here.

Trying to recreate recognizable IPs with these systems off-the-shelf will produce unreliable results, partially due to rules around using and repurposing protected works. This kind of thing is likely to be regulated to the extent it can be enforced, but it ultimately means businesses should avoid this.

We can see Midjourney gets the best likeness to a modern-era Link, yet it didn’t quite follow orders, despite explicit instruction and the use of parameters.

DALL-E does a better job at following instructions and achieves a great likeness in the upper collage, especially if you look past his strong, square jaw. The lower one, however, has a minimal likeness thanks to the inclusion of a green-garbed swordsman, but otherwise, it just looks like a cutscene from a generic 8-bit game.

Designers can use these systems outside of unauthorized reproduction of protected works for much more than simply creating realistic scenes.

Entertainment-connected businesses can find immediate use for this flavor of GenAI, so long as they’re using it to refine their content. Beyond entertainment, marketing and other creative departments will surely find ways to put these animation-focused styles to work.

How will these systems impact business?

Quite simply, not all businesses will have a need for this flavor of GenAI.

However, those with the creativity and resolve to apply this style of generative design to their products will find that these systems can significantly offload some heavy lifting.

For example, vehicle manufacturers spend a lot of time creating and refining the looks of their vehicles.

Right now, these systems can be trained to accomplish these arduous design tasks quickly, offering a new way to achieve fast approximation – doing so can help designers and engineers get close to a final design, shaving off some of the need for early “trial and error” processes.

In time, these systems will improve and be far more accurate with less instruction.

Businesses motivated to unlock these systems’ value now through custom implementation will have a massive leg up on competitors who will drag their feet.

Check out DALL-E and Midjourney for your image generation needs before the competition!

GenAI offers a new opportunity for those with the vision and know-how to implement these pieces.

Over time, certain limitations will go to the wayside, but those looking to learn about these systems and capitalize on their offerings now will find themselves in a better position for the future compared to those who will dally until they see a competitor do it first.

Contact us today to learn more about how this and other AI can bring about a new wave of digital transformation in your business.

Bobby Gill